Background and Objectives: Antibiotic misuse contributes to antibiotic resistance and is a growing public health threat in the United States and globally. Professional medical societies promote antibiotic stewardship education for medical students, ideally before inappropriate practice habits form. To our knowledge, no tools exist to assess medical student competency in antibiotic stewardship and the communication skills necessary to engage patients in this endeavor. The aim of this study was to develop a novel instrument to measure medical students’ communication skills and competency in antibiotic stewardship and patient counseling.

Methods: We created and pilot tested a novel instrument to assess student competencies in contextual knowledge and communication skills about antibiotic stewardship with standardized patients (SP). Students from two institutions (N=178; Albert Einstein College of Medicine and Warren Alpert Medical School of Brown University) participated in an observed, structured clinical encounter during which SPs trained in the use of the instrument assessed student performance using the novel instrument.

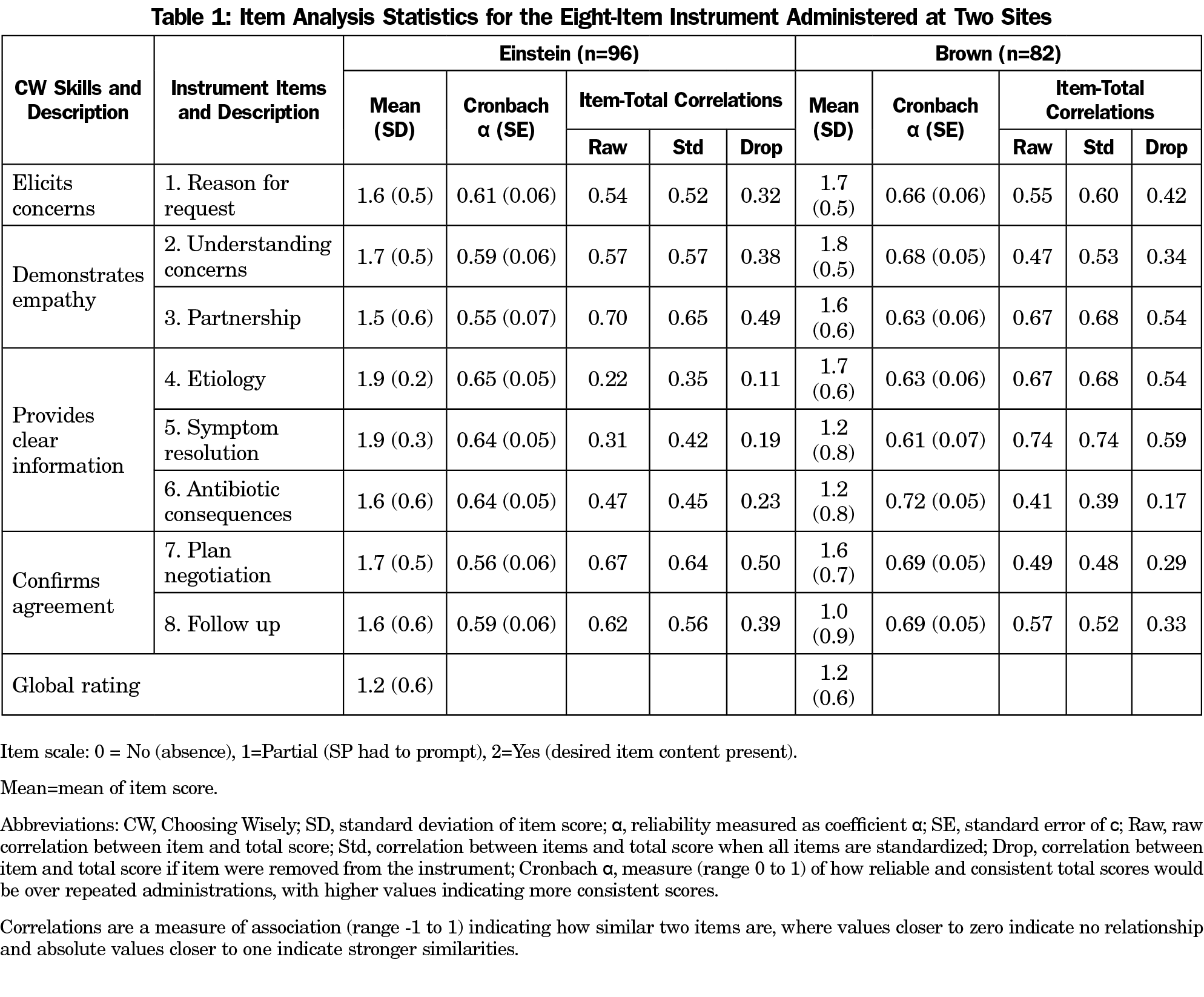

Results: In ranking examinee instrument scores, Cronbach α was 0.64 (95% CI: 0.53 to 0.74) at Einstein and 0.71 (95% CI: 0.60 to 0.79) at Brown, both within a commonly accepted range for estimating reliability. Global ratings and instrument scores were positively correlated (r=0.52, F [3, 174]=30.71, P<.001), providing evidence of concurrent validity.

Conclusions: Similar results at both schools supported external validity. The instrument performed reliably at both institutions under different examination conditions, providing evidence for the validity and utility of this instrument in assessing medical students’ skills related to antibiotic stewardship.

Antibiotic misuse contributes to antibiotic resistance and is a global public health threat.1,2 Annually, 2 million people acquire antibiotic resistant infections in the United States, and 23,000 die.2 In 2012, the American Board of Internal Medicine (ABIM) Foundation, Consumer Reports, and nine medical specialty societies established the Choosing Wisely (CW) campaign to promote resource stewardship and encourage conversations with patients about unnecessary care.3-6 Through CW, various specialty societies recommend against the inappropriate prescribing of antibiotics for upper respiratory infections (URI).4,6,7

Several professional medical societies promote antibiotic stewardship education for medical students.3 Medical students want more education on antibiotic stewardship.7,8 The literature on antibiotics stewardship training mainly reports on feedback to learners in postgraduate training programs.9 Few programs for medical students report behavior change outcomes.9,10 Although several instruments assess medical students’ general communication skills, none assess students’ ability to communicate about stewardship of antibiotics.11-13 To address this gap we have developed, and determined the psychometric properties of, a novel instrument to assess students’ antibiotic stewardship competency.

Setting and Participants

Third-year students at the Albert Einstein College of Medicine (Einstein, n=96) and the Warren Alpert Medical School of Brown University (Brown, n=82) participated in this study during their family medicine clerkship (2017-2018). Both institutional review boards deemed this study exempt.

Intervention

At both institutions, antibiotic stewardship instruction is integrated into the preclinical Microbiology/Infectious Disease course. Students reviewed the CW website and its related videos12 during the family medicine clerkship. At the clerkship’s conclusion, student competency attainment is assessed via an observed structured clinical encounter (OSCE).

We completed a multistage, iterative development and implementation of a CW-OSCE with trained standardized patients (SPs) as evaluators.14 At both institutions, the CW-OSCE was one of five end-of-clerkship OSCE stations. Each student interviewed and counseled an SP requesting antibiotics for URI symptoms using the framework of CW concepts.13 The SPs and simulated cases were different at each school: viral pharyngitis (Brown) and viral rhinosinusitis (Einstein).

Measures

Using the CW framework,13 we designed eight items to measure students’ ability to engage patients meaningfully in appropriate prescribing of antibiotics.12 For example, these items included whether the student explored patient’s reason for requesting antibiotics, explained the side effects of antibiotics, and planned a follow up. Each antibiotic stewardship concept was measured by one to three items, and each item had a yes (2 points), partial (1 point), or no (0 point) rating scale.15,16 All items measured antibiotic stewardship concepts rather than general communication skills.17 Table 1 gives the main-item concepts for assessing antibiotic stewardship. SPs also assigned a single-item global rating of the student performance (range from 0-2, with higher ratings indicating better overall performance for the station).

SPs at both institutions received training in the use of the instrument. The instrument is currently in use, and is available from the authors upon request.

Analyses

Preliminary analysis of the initial Einstein data showed minimal variability in the student scores (SP gave perfect scores to almost all students). This prompted SP retraining and exclusion of the first 6 months’ data from the final analyses. We used the R statistical software program and the Psych package (R Foundation for Statistical Computing, Vienna, Austria) to calculate a total instrument score for each student, as well as item difficulties, score distributions, scale structure, reliability (Cronbach α), and concurrent validity via item-total correlations.20 We analyzed results separately from each school to generate initial evidence for external validity. Taken together, these analyses were intended to determine the psychometric properties of the instrument.18,19,21-24

Analysis of the instrument scores of all students during the study period (Einstein: 96, Brown: 82) showed that item difficulties averaged 1.6 (range 1-1.9), which is ideal for discriminating student ability. Cronbach α was 0.64 (Einstein, 95% CI: 0.53 to 0.74) and 0.71 (Brown, 95% CI: 0.60-0.79), both within a commonly accepted range (ie, 0.60-0.85) for estimating reliability (Table 1).

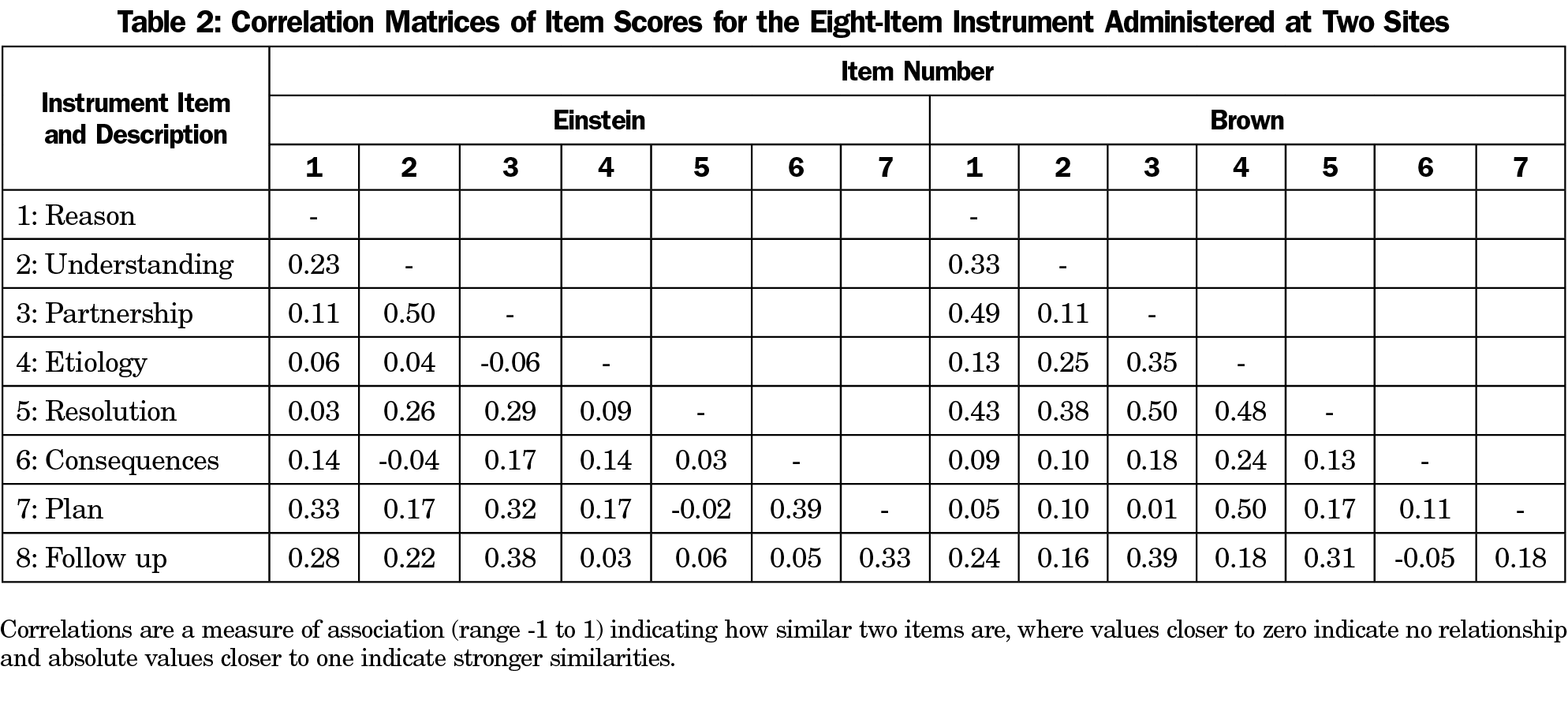

Table 2 shows that simple correlations (r) between items were in an acceptable range (ie, 0.15-0.50), considering that correlations deflate when item score ranges are limited to discrete values of 0 to 2. Except for items Q4 and Q5, with smaller Drop correlations due to the ceiling effect, item correlations were similar in magnitude for the two locations (eg, Q3 had relatively high, and Q6 relatively low correlations at both schools).

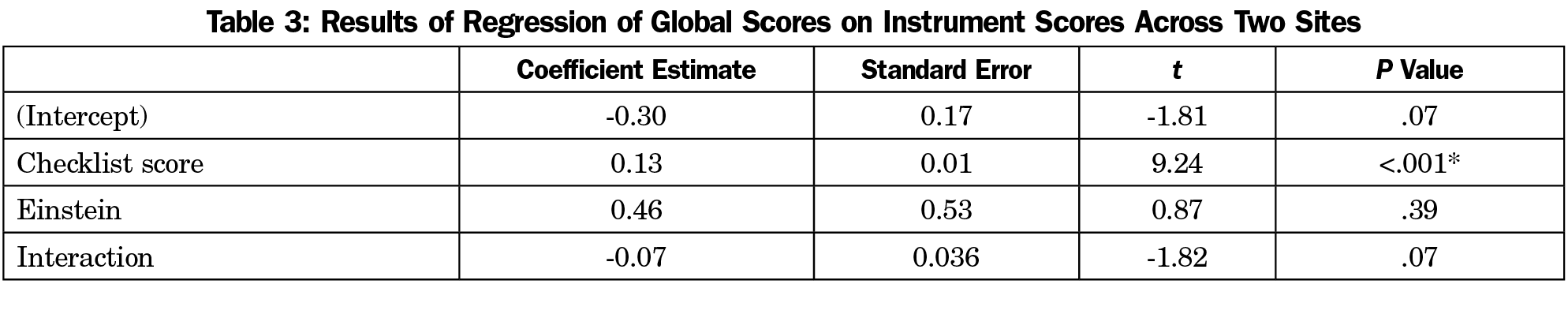

Global ratings and instrument scores were positively correlated (r=0.52, F [3, 174]=30.71, P<.001): higher instrument scores were correlated with more positive global rating scores. Table 3 shows that regression analysis predicting global scores based on total score and test site showed no significant interaction of scores by site, suggesting that the relationship between total score and global score did not differ by institution and only total scores predicted global scores (coefficient estimate=0.13, t[174]=9.24, P<.001).

Literature reports that objectively designed instruments can assess students’ contextual knowledge and communication skills in identifying issues impacting clinical outcomes.11 We have developed a novel instrument measuring students’ demonstration of antibiotic stewardship concepts.12 The reliability and item statistics results demonstrate that the instrument measured scores reliably and support its use for similar purposes at other institutions. Higher instrument scores predict higher global ratings, providing evidence of concurrent validity.

Our study has limitations. Students were assessed on a single case during their third-year family medicine clerkship, limiting generalizability to other educational settings and stages of training. Ideally, there should be coordinated preclerkship and clerkship curricula and assessments reinforcing antibiotic stewardship. Additionally, we cannot provide evidence of behavior beyond the clerkship nor in the clinical setting. Assessing these competencies in practice requires novel interventions such as unannounced SPs in patient care settings.25

We developed and tested a novel instrument to measure medical student antibiotic stewardship competencies. Implementation at other institutions with different cases and learners would provide additional validity evidence for the instrument and elucidate item performance under various conditions.

Acknowledgments

The authors thank Dr Conair Guilliames, Dr Oladimeji Oki, Ms Heather Archer-Dyer, and Ms Adriana Nieto for their assistance in training the SPs, piloting the checklist, and ensuring the smooth operation of the OSCEs. They also thank the standardized patients for their commitment to helping educate our students.

Financial Support: This project was supported by funding from a National Institutes of Health Behavioral and Social Science Consortium for Medical Education Award, and from an American Board of Internal Medicine Foundation Putting Stewardship Into Medical Education and Training Award. The authors have no conflicts of interest to declare.

References

- World Health Organization. Antibiotic Resistance: Key Facts. https://www.who.int/news-room/fact-sheets/detail/antibiotic-resistance. Accessed January 29, 2019.

- Barlam TF, Cosgrove SE, Abbo LM, et al. Executive Summary: Implementing an Antibiotic Stewardship Program: Guidelines by the Infectious Diseases Society of America and the Society for Healthcare Epidemiology of America. Clin Infect Dis. 2016;62(10):1197-1202. https://doi.org/10.1093/cid/ciw217

- Levinson W, Born K, Wolfson D. Choosing Wisely campaigns: a Work in progress. JAMA. 2018;319(19):1975-1976. https://doi.org/10.1001/jama.2018.2202

- Schwartz BS, Armstrong WS, Ohl CA, Luther VP. Create Allies, IDSA stewardship commitments should prioritize health professions learners. Clin Infect Dis. 2015;61(10):1626-1627. https://doi.org/10.1093/cid/civ640

- Choosing Wisely. Infectious Diseases Society of America. http://www.choosingwisely.org/clinician-lists/infectious-diseases-society-antbiotics-for-upper-respiratory-infections/. Accessed January 29, 2019.

- Fishman N; Society for Healthcare Epidemiology of America; Infectious Diseases Society of America; Pediatric Infectious Diseases Society. Policy statement on antimicrobial stewardship by the Society for Healthcare Epidemiology of America (SHEA), the Infectious Diseases Society of America (IDSA), and the Pediatric Infectious Diseases Society (PIDS). Infect Control Hosp Epidemiol. 2012;33(4):322-327. https://doi.org/10.1086/665010

- Abbo LM, Cosgrove SE, Pottinger PS, et al. Medical students’ perceptions and knowledge about antimicrobial stewardship: how are we educating our future prescribers? Clin Infect Dis. 2013;57(5):631-638. https://doi.org/10.1093/cid/cit370

- Minen MT, Duquaine D, Marx MA, Weiss D. A survey of knowledge, attitudes, and beliefs of medical students concerning antimicrobial use and resistance. Microb Drug Resist. 2010;16(4):285-289. https://doi.org/10.1089/mdr.2010.0009

- Silverberg SL, Zannella VE, Countryman D, et al. A review of antimicrobial stewardship training in medical education. Int J Med Educ. 2017;8:353-374. https://doi.org/10.5116/ijme.59ba.2d47

- Beck AP, Baubie K, Knobloch MJ, Safdar N. Promoting Antimicrobial Stewardship by Incorporating it in Undergraduate Medical Education Curricula. WMJ. 2018;117(5):224-228.

- Thompson BM, Teal CR, Scott SM, et al. Following the clues: teaching medical students to explore patients’ contexts. Patient Educ Couns. 2010;80(3):345-350. https://doi.org/10.1016/j.pec.2010.06.035

- Duke P, et al. The ABIM Foundation's Choosing Wisely Communication Module. http://modules.choosingwisely.org/modules/m_00/default_FrameSet.htm. ABIM Foundation; 2013. Accessed October 15, 2019.

- Mukerji G, Weinerman A, Schwartz S, Atkinson A, Stroud L, Wong BM. Communicating wisely: teaching residents to communicate effectively with patients and caregivers about unnecessary tests. BMC Med Educ. 2017;17(1):248. https://doi.org/10.1186/s12909-017-1086-x

- Shumway JM, Harden RM; Association for Medical Education in Europe. AMEE Guide No. 25: the assessment of learning outcomes for the competent and reflective physician. Med Teach. 2003;25(6):569-584. https://doi.org/10.1080/0142159032000151907

- Sennekamp M, Gilbert K, Gerlach FM, Guethlin C. Development and validation of the “FrOCK”: frankfurt observer communication checklist. Z Evid Fortbild Qual Gesundhwes. 2012;106(8):595-601. https://doi.org/10.1016/j.zefq.2012.07.018

- Berger AJ, Gillespie CC, Tewksbury LR, et al. Assessment of medical student clinical reasoning by “lay” vs physician raters: inter-rater reliability using a scoring guide in a multidisciplinary objective structured clinical examination. Am J Surg. 2012;203(1):81-86. https://doi.org/10.1016/j.amjsurg.2011.08.003

- Downing SM. Validity: on meaningful interpretation of assessment data. Med Educ. 2003;37(9):830-837. https://doi.org/10.1046/j.1365-2923.2003.01594.x

- Bergus GR, Kreiter CD. The reliability of summative judgements based on objective structured clinical examination cases distributed across the clinical year. Med Educ. 2007;41(7):661-666. https://doi.org/10.1111/j.1365-2923.2007.02786.x

- Al-Naami MY. Reliability, validity, and feasibility of the Objective Structured Clinical Examination in assessing clinical skills of final year surgical clerkship. Saudi Med J. 2008;29(12):1802-1807.

- Revelle W. psych: Procedures for Personality and Psychological Research 2017. Evanston, IL: Northwestern University/Northwestern Scholars.

- Duhachek A, Lacobucci D. Alpha’s standard error (ASE): an accurate and precise confidence interval estimate. J Appl Psychol. 2004;89(5):792-808. https://doi.org/10.1037/0021-9010.89.5.792

- Cureton E. Corrected item-test correlations. Psychometrika. 1966;31(1):93-96. https://doi.org/10.1007/BF02289461

- Cronbach LJ. Coefficient alpha and the internal strucuture of tests. Psychometrika. 1951;16(3):297-334. https://doi.org/10.1007/BF02310555

- Lord FM, Novick MR, Birnbaum A. Statistical Theories of Mental Test Scores. Oxford, England: Addison-Wesley; 1968.

- Siminoff LA, Rogers HL, Waller AC, et al. The advantages and challenges of unannounced standardized patient methodology to assess healthcare communication. Patient Educ Couns. 2011;82(3):318-324. https://doi.org/10.1016/j.pec.2011.01.021

There are no comments for this article.