Background and Objectives: Pretests have been shown to contribute to improved performance on standardized tests by serving to facilitate development of individualized study plans. fmCASES is an existing validated examination used widely in family medicine clerkships throughout the country. Our study aimed to determine if implementation of the fmCASES National Examination as a pretest decreased overall failure rates on the end-of-clerkship National Board of Medical Examiners (NBME) subject examination, and to assess if fmCASES pretest scores correlate with student NBME scores.

Methods: One hundred seventy-one and 160 clerkship medical students in different class years at a single institution served as the control and intervention groups, respectively. The intervention group took the fmCASES National Examination as a pretest at the beginning of the clerkship and received educational prescriptions based on the results. Chi-square analysis, Pearson correlation, and receiver operating curve analysis were used to evaluate the effectiveness and correlations for the intervention.

Results: Students completing an fmCASES National Examination as a pretest failed the end-of-clerkship NBME exam at significantly lower rates than those students not taking the pretest. The overall failure rate for the intervention group was 8.1% compared to 17.5% for the control group (P=0.01). Higher pretest scores correlated with higher NBME examination scores (r=0.55, P<0.001).

Conclusions: fmCASES National Examination is helpful as a formative assessment tool for students beginning their family medicine clerkship. This tool introduces students to course learning objectives, assists them in identifying content areas most in need of study, and can be used to help students design individualized study plans.

Standardized knowledge-based examinations are the standard by which medical professionals must demonstrate a minimum competence in order to earn a medical license and achieve specialty-based credentials. Previous studies suggest a strong correlation between National Board of Medical Examiners (NBME) subject examinations and subsequent United States Medical Licensing Examination (USMLE) scores.1-3 Thus, student preparation and performance on gateway NBME subject examinations can potentially provide a foundation for future successful licensing examination performance.

Previous studies demonstrate the ability of clerkship practice tests to predict performance on NBME subject examinations, but show mixed results of the impact on student performance improvement.4-5 General education concepts show that practice tests can contribute to improved performance on standardized tests via comprehension calibration, study plan development, and application of metacognitive strategy.6-13

fmCASES are online case-based modules originally created to meet the Society of Teachers of Family Medicine’s National Clerkship Curriculum Objectives,14 and were found to “foster self-directed and independent study” and “emphasize and model clinical problem-solving.”15 During the time of this initiative, 146 medical schools in the United States utilized fmCASES to teach or assess student learners.16 Increased student engagement in the online fmCASES is associated with improved student performance on end-of-clerkship examinations, and use of the virtual cases is comparable to traditional textbook learning.17-20 Evidence also exists to suggest that the fmCASES National Examination provides accurate and valid assessments of student clinical knowledge in family medicine, and are comparable to the use of NBME examinations.17

Our study extended the application of fmCASES National Examination outside of its use as an end-of-clerkship evaluation. The specific aims were: (1) to assess if implementation of the fmCASES National Examination as a tool for formative assessment decreased group overall failure rates on the end-of-clerkship NBME examination, and (2) to assess if fmCASES pretest scores correlate with student NBME scores.

This was a retrospective cohort study comparing outcomes of students from two different medical school classes. Participants included students from the class of 2016 (Co16) and 2017 (Co17) who completed the 6-week family medicine clerkship at the Uniformed Services University (USU). Co16 USU students served as the control cohort, and Co17 USU students served as the intervention/comparison group. The local Institutional Review Board approved this project as an exempt protocol.

As part of a clerkship curriculum change in January 2015, we implemented the fmCASES National Examination as a 100-question pretest and formative assessment tool. Our goals were: (1) to provide initial personal perspective and reflection on topic areas likely to be covered on the NBME exam; (2) to use this perspective to serve as study focus areas during the following rotation weeks; (3) to give students an objective measurement of their knowledge prior to taking the NBME examination; (4) to identify students at risk for failure of the NBME examination; and (5) to highlight individual knowledge areas in need of improvement.

Students in the intervention group were given one of two versions of the fmCASES National Examination, a nationally-standardized and validated test on Med-U.org. The examination was administered in proctored classrooms in the online format. A results report was provided to all students 2 days after the exam. Intervention group students attended an hour-long study skills lecture directing them to prioritize study time during clinical weeks on the fmCASES for which they received the lowest scores. Other study management strategies were also discussed. All students completed the NBME examination at the end of the clerkship.

Intervention group students were risk stratified based upon their pretest score. Those who received a score below the group 20th percentile were designated as at-risk for failure of the NBME examination, and subsequently received academic counseling by one of two clinical associate professor faculty members (current and previous FM clerkship directors). Faculty assisted at-risk students in development of individualized study plans. Study plans were tailored toward the learning styles of the learners, with focused study in weaker knowledge areas. In addition to completion of online fmCASES, study plan options included use of question banks, case-based learning textbooks, directed topic reading, and online video review. The clerkship director offered optional assistance to all clerkship students.

We determined an overall NBME failure rate for the control and intervention groups. In the intervention group we also examined individual pretest and NBME scores for correlation. Chi-square analysis, multivariate linear regression, Pearson correlation, and receiver-operator curve analysis were used to evaluate the effectiveness of the intervention.

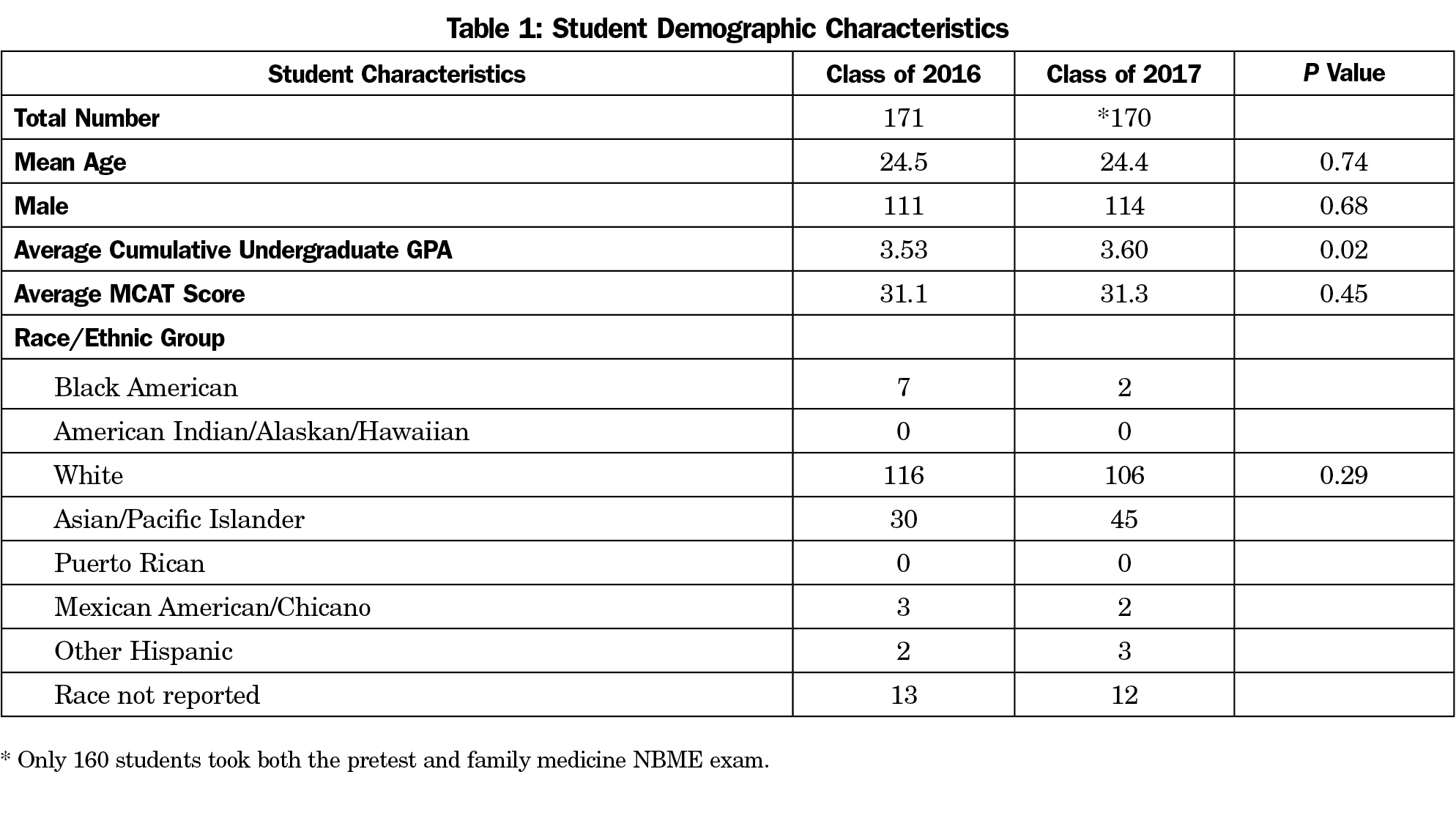

Demographic data for the control and intervention groups is shown in Table 1. The family medicine clerkship was completed by 171 students hip in the class of 2016. This control group did not take the pretest. The intervention group (Co17) had a significantly higher undergraduate GPA, but characteristics of age and MCAT score were not significantly different between the groups.

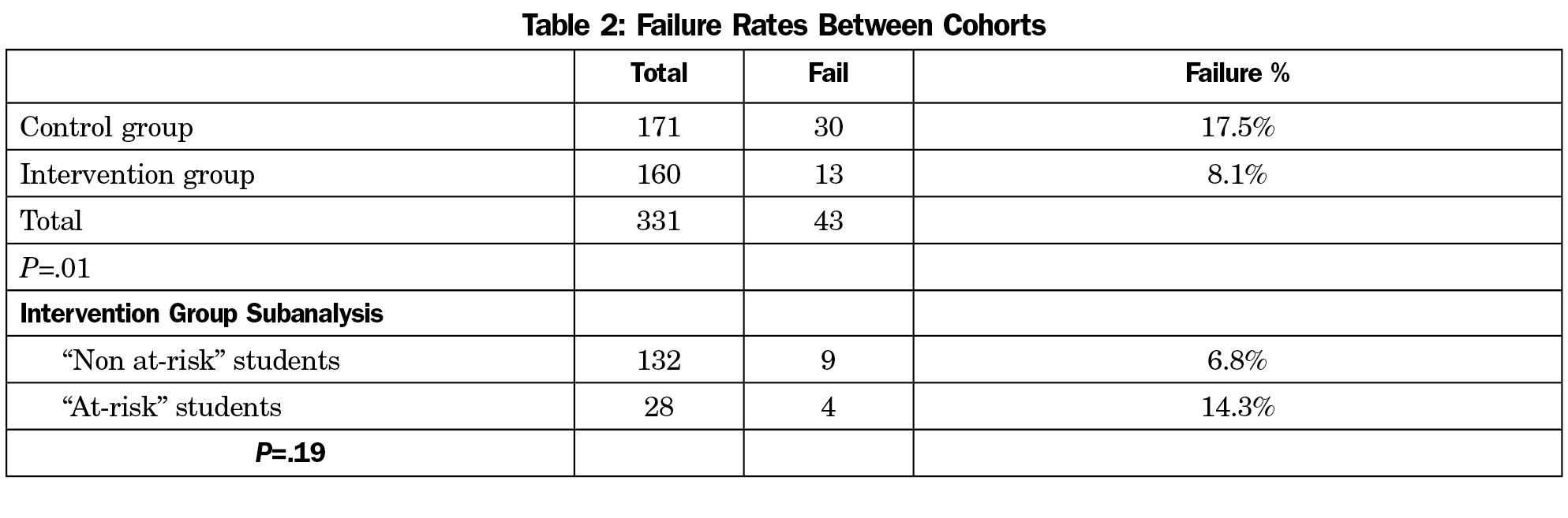

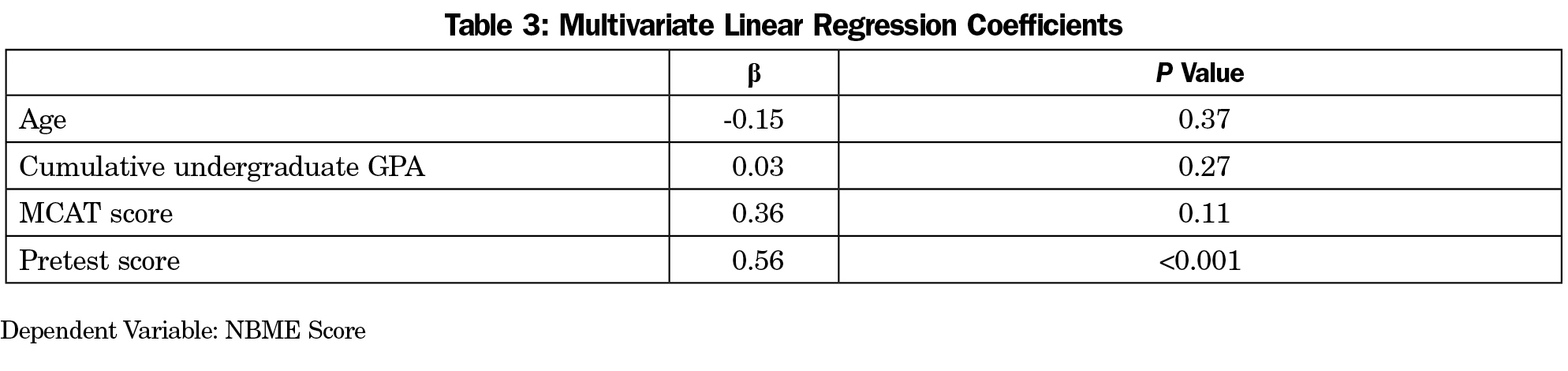

Thirty of 171 students (17.5%) failed the family medicine NBME on the first attempt; 160 students from the class of 2017 took the fmCASES pretest and completed the entirety of the family medicine clerkship. Among these students, 13 (8.1%) failed the family medicine NBME examination on the first attempt. Chi-square analysis between these two groups indicates a significant difference in pass rates, with P=0.01 (Table 2). Multiple regression analysis was used to test if the student pretest score was predictive of the NBME score as compared to other demographic factors (Table 3). It was found that pretest significantly predicted NBME score (β=.56, P<0.001). Age, undergraduate GPA, and MCAT scores were not predictive of NBME score.

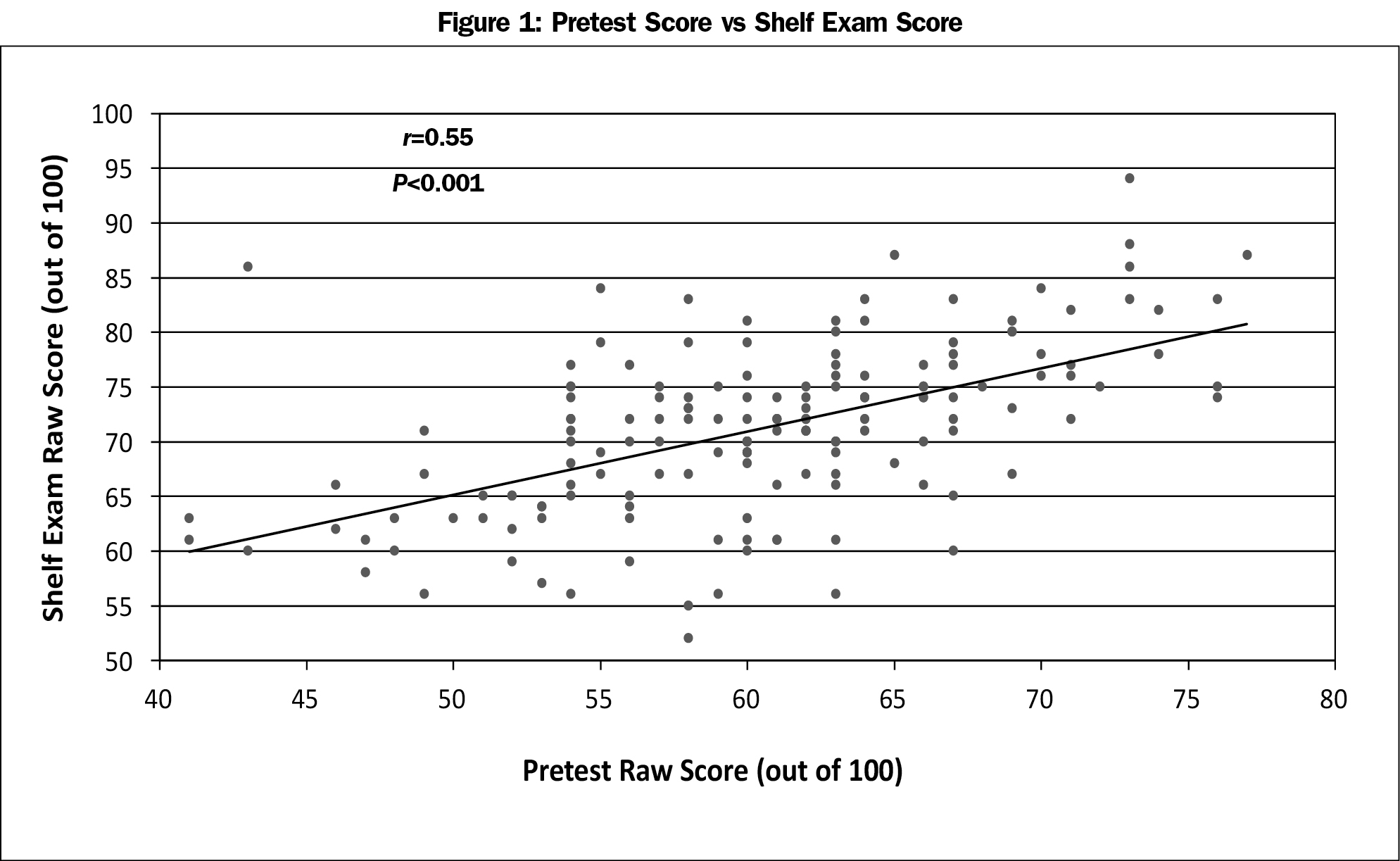

Bivariate Pearson correlation analysis of the relationship between pretest scores and NBME scores showed a correlation coefficient of 0.55 with P<0.001 (Figure 1), indicating a statistically significant moderate positive correlation between pretest scores and NBME examination scores.

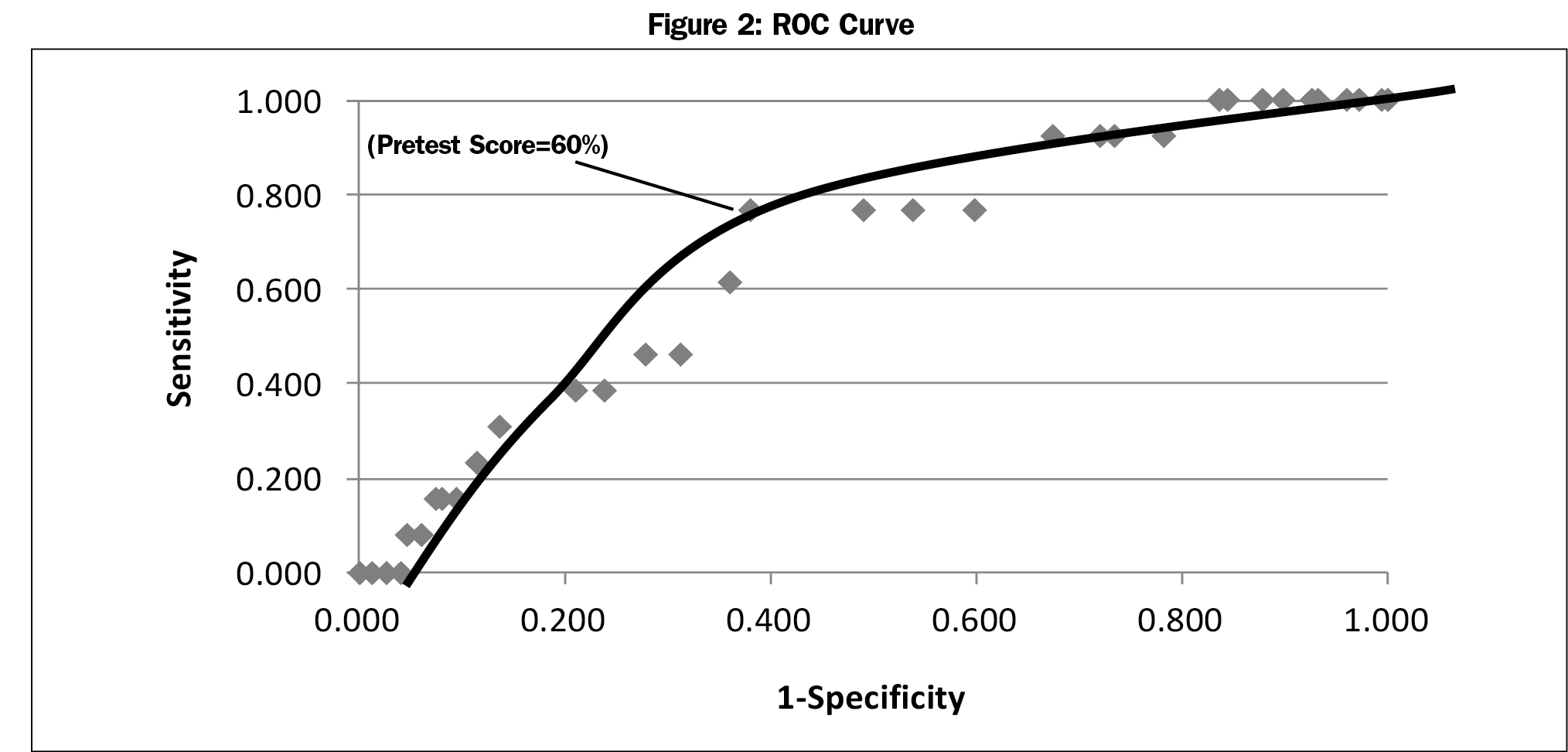

Receiver-operator curve (ROC) analysis determined the optimal pretest examination score with the highest sensitivity and specificity for failing the NBME examination. Pretest examination scores ranging from 40% to 78% were selected as cutoff values based upon the range of scores achieved by the pretest group. For each score cutoff value, sensitivity and specificity were calculated and the results were plotted as an ROC curve. Figure 2 displays the ROC curve with optimal sensitivity and specificity cut-offs marked with an arrow. According to our data, a pretest score cutoff value of 60 optimized sensitivity and specificity for test failure.

The fmCASES National Examination in conjunction with the case breakdown results report served as a helpful formative assessment tool and guided individual NBME examination preparation in our student population. Our findings demonstrate that use of this exam as a pretest combined with study plan counseling can decrease overall failure rates on the end-of-clerkship NBME examination for FM clerkship students. Additionally, we confirmed a moderate positive correlation between fmCASES pretest scores and end-of-clerkship NBME examination scores. In general, lower fmCASES pretest scores were associated with lower NBME scores. Although there was a slight difference in demographic characteristics between the groups, the pretest score was a significant predictor of NBME score after adjusting for age, MCAT score, and undergraduate GPA. Other institutions could use this correlation and our ROC analysis to help identify students most likely to fail the end-of-clerkship exam.

We theorize that the benefits gained from our pretest intervention are not purely secondary to the identification of knowledge gaps. General education theory suggests that testing produces better organization of knowledge and improves the transfer of knowledge to new contexts.13 We also theorize that completion of the pretest served as a cognizant or mindful introduction to the clerkship course objectives, and that detailed awareness of these objectives helped students tailor their clinical encounters and academic study time to subject areas most likely on the final exam.

Implementation of a formative assessment prior to the beginning of a clerkship can provide students an explicit roadmap for learning objectives and assist them in identifying content areas in most need of study. fmCASES National Examination is helpful in this context and can be used to help students design individualized study plans.

Acknowledgments

The authors thank Cara Olsen, PhD, USU Biostatistician, for assistance with the statistical analysis on this project, and Christian Ledford, PhD, USU Department of Family Medicine, for assistance in preparing the manuscript.

Presentations: Presented at the Society of Teachers of Family Medicine Medical Student Education conference in February 9-12, 2017 in Anaheim, CA.

Disclaimer: The views expressed herein are those of the authors and do not reflect the official policy of the Department of the Army, Department of Defense, or the US Government.

References

- Zahn CM, Saguil A, Artino AR Jr, et al. Correlation of National Board of Medical Examiners scores with United States Medical Licensing Examination Step 1 and Step 2 scores. Acad Med. 2012;87(10):1348-1354.

https://doi.org/10.1097/ACM.0b013e31826a13bd.

- Dong T, Swygert KA, Durning SJ, et al. Is poor performance on NBME clinical subject examinations associated with a failing score on the USMLE step 3 examination? Acad Med. 2014;89(5):762-766.

https://doi.org/10.1097/ACM.0000000000000222.

- Hiller K, Franzen D, Heitz C, Emery M, Poznanski S. Correlation of the National Board of Medical Examiners Emergency Medicine Advanced Clinical Examination Given in July to Intern American Board of Emergency Medicine in-training Examination Scores: A Predictor of Performance? West J Emerg Med. 2015;16(6):957-960.

https://doi.org/10.5811/westjem.2015.9.27303.

- Hemmer PA, Markert RJ, Wood V. Using in-clerkship tests to identify students with insufficient knowledge and assessing the effect of counseling on final examination performance. Acad Med. 1999;74(1):73-75.

https://doi.org/10.1097/00001888-199901001-00022.

- Constance E, Dawson B, Steward D, Schrage J, Schermerhorn G. Coaching students who fail and identifying students at risk for failing the National Board of Medical Examiners medicine subject test. Acad Med. 1994;69(10)(suppl):S69-S71.

https://doi.org/10.1097/00001888-199410000-00046.

- Balch WR. Practice versus review exams and final exam performance. Teach Psychol. 1998;25(3):181-185.

https://doi.org/10.1207/s15328023top2503_3.

- Zabrucky KM. Knowing what we know and do not know: educational and real world implications. Procedia Soc Behav Sci. 2010;2(2):1266-1269.

https://doi.org/10.1016/j.sbspro.2010.03.185.

- Glenberg AM, Wilkinson AC, Epstein W. The illusion of knowing: failure in the self-assessment of comprehension. Mem Cognit. 1982;10(6):597-602.

https://doi.org/10.3758/BF03202442.

- Glenberg AM, Sanocki T, Epstein W, Morris C. Enhancing calibration of comprehension. J Exp Psychol. 1987;116(2):119-136.

https://doi.org/10.1037/0096-3445.116.2.119.

- Bjork EL, Soderstrom NC, Little JL. Can multiple-choice testing induce desirable difficulties? Evidence from the laboratory and the classroom. Am J Psychol. 2015;128(2):229-239.

https://doi.org/10.5406/amerjpsyc.128.2.0229.

- Little JL, Bjork EL, Bjork RA, Angello G. Multiple-choice tests exonerated, at least of some charges: fostering test-induced learning and avoiding test-induced forgetting. Psychol Sci. 2012;23(11):1337-1344.

https://doi.org/10.1177/0956797612443370.

- Grühn D, Cheng Y. A self-correcting approach to multiple-choice exams improves students’ learning. Teach Psychol. 2014;41(4):335-339.

https://doi.org/10.1177/0098628314549706.

- Roediger HL III, Putnam AL, Smith MA. The benefits of testing and their applications to educational practice. Psychol Learn Motiv. 2011;(55):1-36.

- Society of Teachers of Family Medicine. About the Family Medicine Clerkship Curriculum. http://www.stfm.org/Resources/ResourcesforMedicalSchools/STFMNationalClerkshipCurriculum/AbouttheFamilyMedicineClerkshipCurriculum. Accessed 24 July 2017.

- Leong SL. fmCASES: collaborative development of online cases to address educational needs. Ann Fam Med. 2009;7(4):374-375.

https://doi.org/10.1370/afm.1028.

- MedU. Our Subscribers. http://www.med-u.org/about/our-subscribers. Accessed July 24, 2017.

- Sussman, H. Does clerkship student performance on the NBME and fmCASES exams correlate? Poster presented at the Society of Teachers of Family Medicine Conference on Medical Student Education; February 2-5, 2012; Long Beach, CA.

- Chessman A, Svetlana C, Mainous A, et al. fmCASES National Exam: Correlations with Student Performance Across Eight Family Medicine Clerkships. Presented the Society of Teachers of Family Medicine Conference on Medical Student Education; January 24-27, 2013; San Antonio, TX.

- Shokar GS, Burdine RL, Callaway M, Bulik RJ. Relating student performance on a family medicine clerkship with completion of Web cases. Fam Med. 2005;37(9):620-622.

- Demarco MP, Bream KD, Klusaritz HA, Margo K. Comparison of textbook to fmCases on family medicine clerkship exam performance. Fam Med. 2014;46(3):174-179.

There are no comments for this article.