Background and Objectives: Dermatology is often an overlooked and underemphasized area of training in postgraduate primary care medical education, with an abundance of dermatological educational resources available, but no clear guidelines on how to best take advantage of them. The objective of this study was to develop a dermatology digital tool kit designed to describe, evaluate, recognize, and manage (DERM) common dermatological conditions in primary care residency education and to evaluate potential improvement in clinical confidence.

Methods: A total of 14 family medicine (FM) and 33 internal medicine (IM) residents were given the DERM tool kit to complete over 7 weeks. Effects on residents’ self-reported comfort with dermatology and resources used were measured by voluntary anonymous surveys distributed before and after DERM completion.

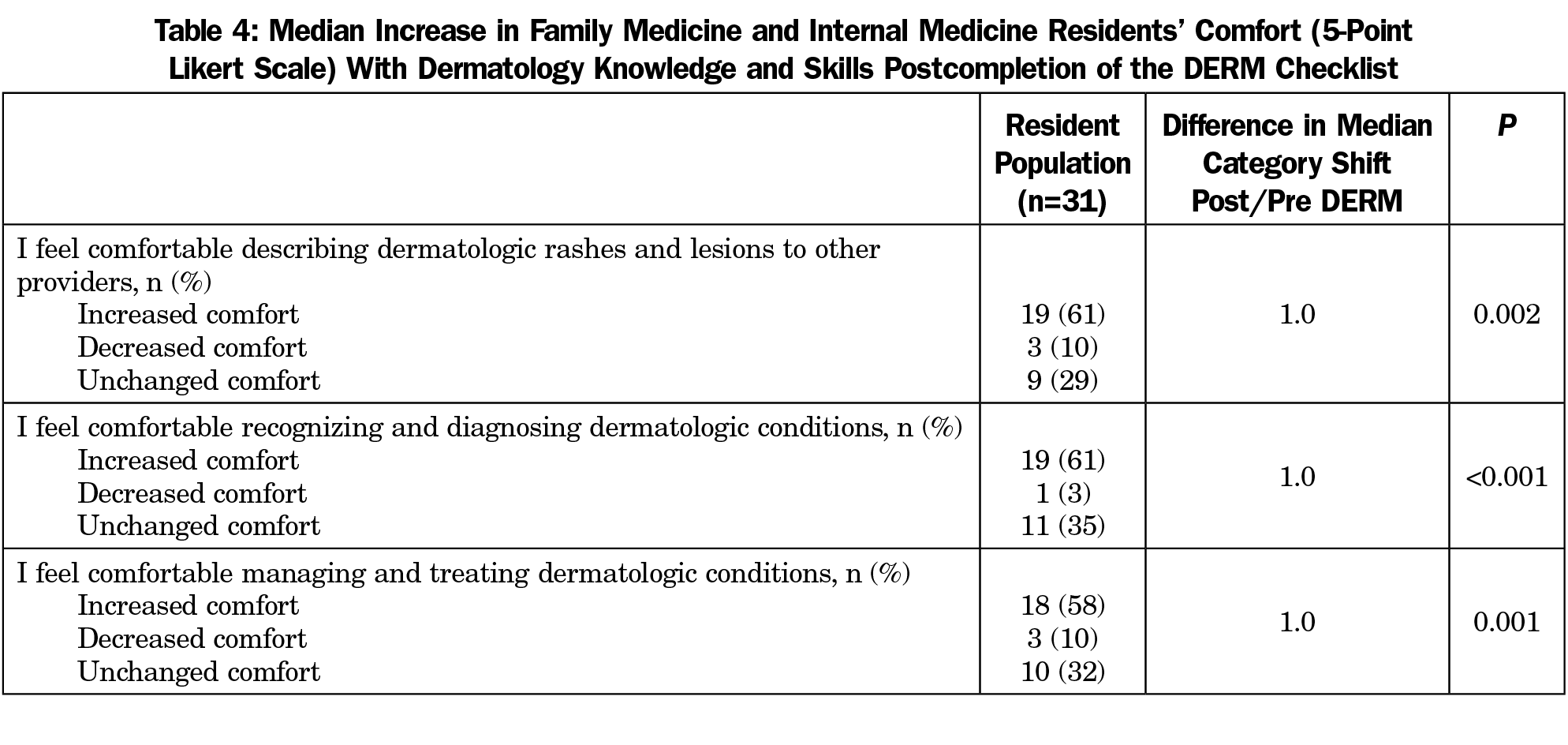

Results: A response rate of 100% (14/14) for FM residents and 52% (17/33) for IM residents was achieved. The majority of residents (61%) recalled minimal dermatology education—less than 2 weeks—in medical school and 71% agreed that there is not enough dermatology in their residency curriculum. A statistically significant increase in resident comfort with describing (P=0.002), recognizing and diagnosing (P<0.001), and managing (P=0.001) dermatologic conditions was observed postcompletion. Residents reported they would recommend this tool to other primary care residents.

Conclusions: Implementing the DERM digital tool kit is feasible with primary care residents and appears to improve comfort with describing, recognizing and diagnosing, and managing dermatologic conditions.

With the high demand for efficiently managing skin conditions and the limited supply of dermatologists, it is advantageous to maximize efforts in training primary care residents. During a 2-year period, 36.5% of patients will present to their primary care physician with at least one skin complaint, and 58.7% of these patients will list a skin concern as their chief complaint.1 Despite this high prevalence of skin-related problems, dermatologists correctly diagnose 93% of conditions, and nondermatologists 52% of conditions.2 Approximately one-half of medical schools require 10 or fewer hours of dermatology instruction, and 8% do not require any at all.3 Currently, there is no set requirement from the Accreditation Council for Graduate Medical Education (ACGME) for dermatology in internal medicine (IM) training and the requirement for family medicine (FM) states, “Residents must have experience in diagnosing and managing common dermatologic conditions.”4 This amount of discretion can lead to significant variability in requirements across individual programs.

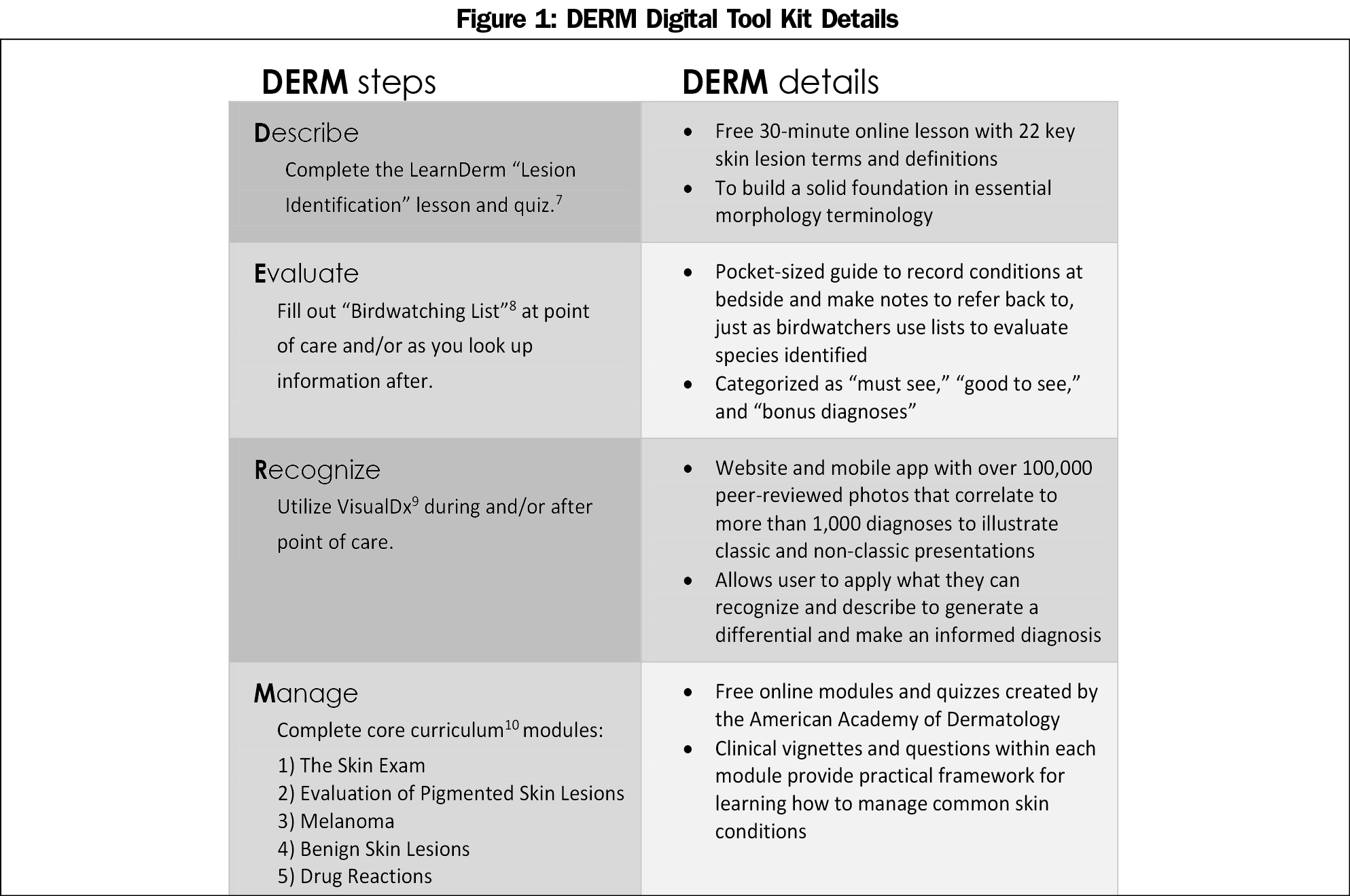

Supplemental resources to learn dermatology inside and outside of the clinic are plentiful.3,5,6 What is lacking, however, is a clear and succinct set of recommendations for nondermatology residents on how to maximize these resources. For these purposes, the authors have consolidated a list of recommendations for primary care residents to encompass four dermatological competencies: describe, evaluate, recognize, and manage (DERM).

A prospective observational survey study of FM and IM residency programs was conducted with approval from the institutional review board, and a waiver of consent granted. Resident recruitment was achieved by presenting the details of the study to the residencies during morning report.

A pre/postsurvey design was employed. Residents completed a presurvey, were given 7 weeks to utilize the DERM digital tool kit (Figure 1), followed by a postsurvey. Nonrespondents and individuals that completed only one of the pre- or the postsurvey were excluded. Reputable self-study resources that were engaging, interactive, and targeted to the four competencies were used to develop DERM.7-9, 12

Effects on perceived dermatology experience, comfort level, and resources used were measured by the presurvey. Utilization and perceived usefulness of the tool kit were assessed in the postsurvey. Descriptive statistics with a 5-point Likert scale (strongly disagree, disagree, neither agree nor disagree, agree, strongly agree) were used to present survey responses relating to comfort levels with dermatology, and a 4-point Likert scale for frequency of VisualDx use (almost never, sometimes, often, almost always), reported as mean and standard deviation. Differences between residency groups were analyzed by t-test. Categorical responses were reported as frequency (%) with differences between residency groups analyzed by chi-square or Fisher’s exact test as appropriate. Average pre/postsurvey results were compared within residency groups and within the total population by paired t-test. Wilcoxon signed rank test was used to analyze pre- versus post-Likert survey responses. Statistics were performed using IBM SPSS Version 24.0. The level of significance was set at P<0.05.

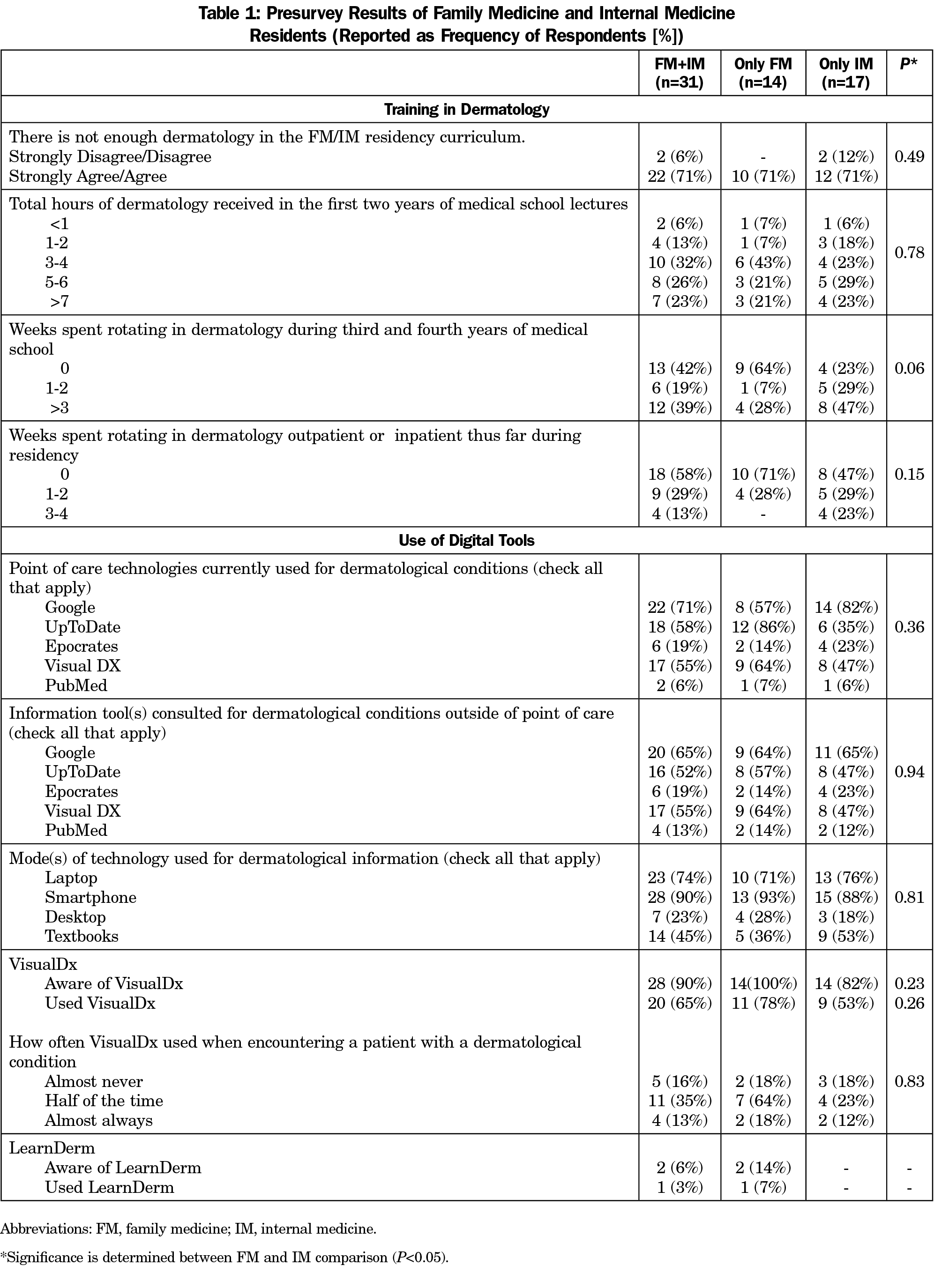

A response rate of 100% for FM residents and 52% for IM residents was achieved, yielding an overall response rate of 66%. Forty-two percent of residents did not spend any time rotating in dermatology during medical school, 77% reported 6 or fewer hours of dermatology lectures in medical school, and on average residents agree that there is not enough dermatology training in their residency curriculum (3.8±1.0, Table 1). Smartphones were reported as the most common source of dermatology information (90%), with Google as the most heavily accessed resource (65% to 74%). Residents were aware of and had used VisualDx (90% and 65%, respectively). In contrast, only 6% and 3% of residents were aware of and had used LearnDerm prior to this study.

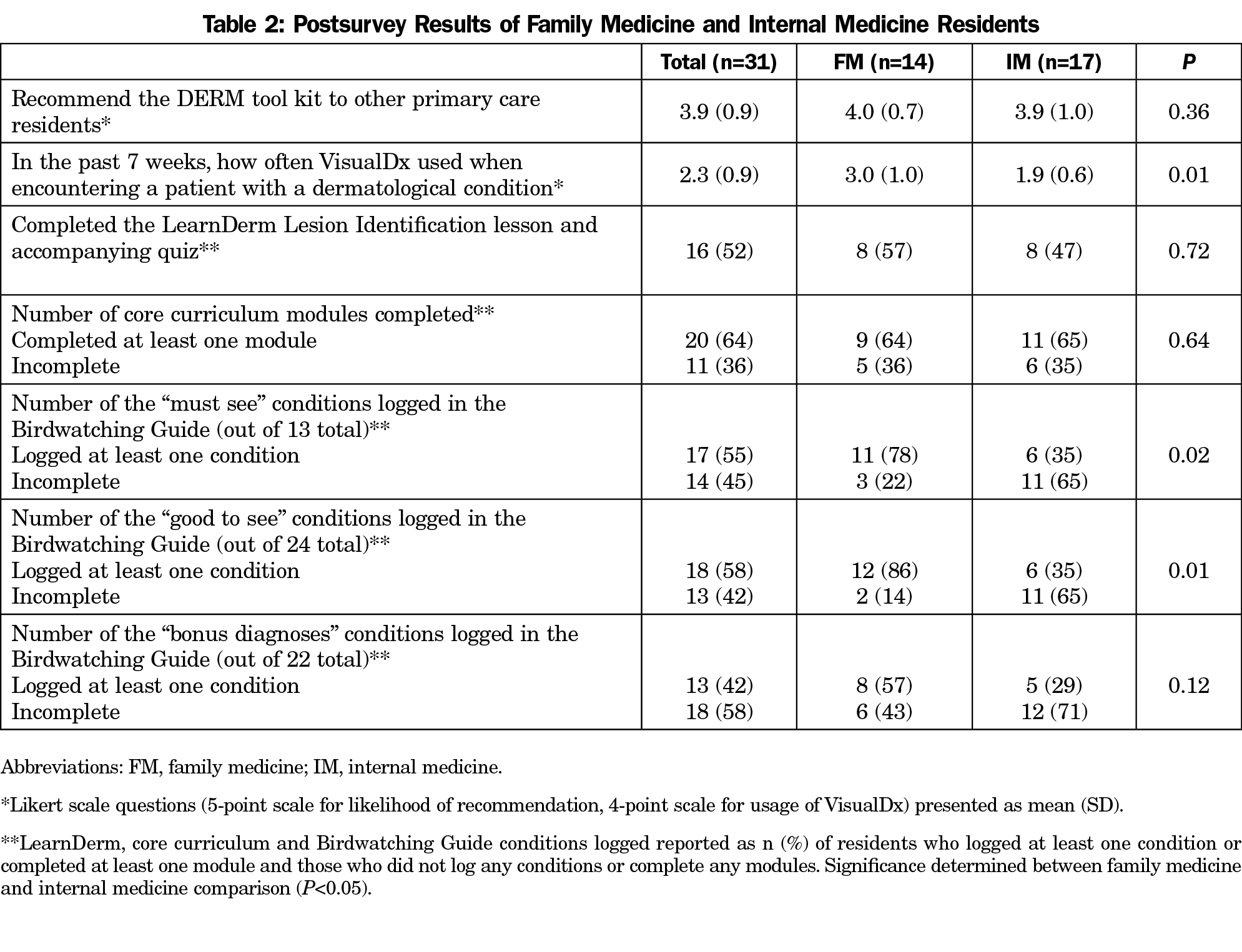

Fifty-two percent reported completing the LearnDerm lesson, 64% reported completing at least one core curriculum module, and on average residents reported using VisualDx “often” during the preceding 7 weeks (2.3±0.9, Table 2). Fifty-five percent logged at least one “Must See” condition in the Birdwatching Guide, 58% logged at least one “Good to See” condition, and 42% logged at least one “Bonus Diagnoses” condition. Significant differences between FM and IM responses for both pre- and postsurveys were that FM residents were more likely to log at least one “Must See” and “Good to See” condition in the Birdwatching Guide (78% vs 35%, and 86% vs 35%, respectively). Residents agree that they would recommend the DERM tool kit to other primary care residents (3.9±0.9).

Post-DERM, there was a statistically significant increase in self-reported resident comfort with describing, recognizing, diagnosing, managing, and treating dermatologic conditions for both FM and IM residents (Table 3). Comfort level increased an average of one point on the Likert scale for each of the competency categories (Table 4).

Primary care physicians are challenged with integrating both primary and specialty care, necessitating well-rounded and comprehensive training, but this can be difficult to accomplish in the standard 3-year residency. Our data supports previous findings that there is minimal dermatology education in medical school and residency.3 Residents were more familiar with some items in the digital tool kit than others at baseline, allowing for the introduction of new resources and new ways to utilize the accustomed ones. The lack of significant differences between FM and IM responses suggests shared inexperience with dermatology prior to the study, DERM compliance rates, and views on the utility of DERM prior to completion.

Study limitations include: small sample size, recall bias, single training site, and that many of the residents had not yet spent any time rotating in dermatology as a resident. Furthermore, while the residents self-reported improvement in their comfort with dermatology following the program, there was no true evaluation of whether diagnostic or management skills objectively improved. It would be of value to administer a pre- and posttest of ability to actually recognize and correctly manage dermatologic lesions. Future study will focus on long-term recall effects of the intervention in the same resident cohort. Despite its limitations, the DERM digital tool kit is a feasible tool to meaningfully enhance comfort with dermatology knowledge and skills.

Acknowledgments

This work was presented as a poster presentation on April 27, 2017 in Cleveland, Ohio at Case Western Reserve University’s Innovations in Medical Education retreat, and received first place in the poster competition. It was also presented at the Northeast Ohio Medical University Scholarship Day on May 24, 2017.

References

- Lowell BA, Froelich CW, Federman DG, Kirsner RS. Dermatology in primary care: prevalence and patient disposition. J Am Acad Dermatol. 2001;45(2):250-255. https://doi.org/10.1067/mjd.2001.114598

- Federman DG, Concato J, Kirsner RS. Comparison of dermatologic diagnoses by primary care practitioners and dermatologists. A review of the literature. Arch Fam Med. 1999;8(2):170-172. https://doi.org/10.1001/archfami.8.2.170

- McCleskey PE, Gilson RT, DeVillez RL. Medical student core curriculum in dermatology survey. J Am Acad Dermatol. 2009;61(1):30-35.e4. https://doi.org/10.1016/j.jaad.2008.10.066

- Accreditation Council for Graduate Medical Education. ACGME Program Requirements for Graduate Medical Education in Family Medicine. https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/120_family_medicine_2016.pdf. Accessed April 12, 2017.

- Brewer AC, Endly DC, Henley J, et al. Mobile applications in dermatology. JAMA Dermatol. 2013;149(11):1300-1304. https://doi.org/10.1001/jamadermatol.2013.5517

- Goulart JM, Quigley EA, Dusza S, et al; INFORMED (INternet curriculum FOR Melanoma Early Detection) Group. Skin cancer education for primary care physicians: a systematic review of published evaluated interventions. J Gen Intern Med. 2011;26(9):1027-1035. https://doi.org/10.1007/s11606-011-1692-y

- LearnDerm by VisualDx. Lesion Identification. https://www.visualdx.com/learnderm/lesson-2. Accessed April 12, 2017.

- Patadia DD, Mostow EN. Dermatology elective curriculum: birdwatching list and travel guide. Dermatol Online J. 2011;17(6):1.

- VisualDx. (2016). Apple iOS. (Version 7.0.4.77) [Mobile application software]. Retreived from https://www.visualdx.com. Accessed April 18, 2017.

- American Academy of Dermatology. Medical student core curriculum. http://www.aad.org/education-and-quality-care/medical-student-core-curriculum. Accessed April 12, 2017.

- McCleskey PE. Clinic teaching made easy: a prospective study of the American Academy of Dermatology core curriculum in primary care learners. J Am Acad Dermatol. 2013;69(2):273-279. https://doi.org/10.1016/j.jaad.2012.12.955

- Awadalla F, Rosenbaum DA, Camacho F, Fleischer AB Jr, Feldman SR. Dermatologic disease in family medicine. Fam Med. 2008;40(7):507-511.

There are no comments for this article.