Background and Objectives: The I3 POP Collaborative sought to improve health of patients attending North Carolina, South Carolina, and Virginia primary care teaching practices using the triple aim framework of better quality, appropriate utilization, and enhanced patient experience. We examined change in triple aim measures over 3 years, and identified correlates of improvement.

Methods: Twenty-nine teaching practices representing 23 residency programs participated. The Institute for Health Care Improvement Breakthrough Series Collaborative model was tailored to focus on at least one triple aim goal and programs submitted data annually on all collaborative measures. Outcome measures included quality (chronic illness, prevention); utilization (hospitalization, emergency department visits, referrals) and patient experience (access, continuity). Participant interviews explored supports and barriers to improvement.

Results: Six of 29 practices (21%) were unable to extract measures from their electronic health records (EHR). All of the remaining 23 practices reported improvement in at least one measure, with 11 seeing at least 10% improvement; seven (24%) improved measures in all three triple aim areas, with two experiencing at least 10% improvement. Practices with a greater number of patient visits were more likely to show improved measures (odds ratio [OR] 10.8, 95% confidence interval [CI]: .68-172.2, P=0.03). Practice interviews revealed that engaged leadership and systems supports were more common in higher performing practices.

Conclusions: Simultaneous attainment of improvement in all three triple aim goals by teaching practices is difficult. I3 POP practices that were able to pull and report data improved on at least one measure. Future work needs to focus on cultivating leadership and systems supporting large scale improvement.

According to leading health care experts, the US health care system needs to address the triple aim of improving the patient experience of care, the health of populations, and reducing unnecessary costs.1 Several value-based payment models are underway, including the development of Next Generation Accountable Care Organizations (ACOs), alternative payment models and merit-based incentive programs (MIPS) under the Medicare Access and CHIP Reauthorization Act (MACRA).2,3 These programs specifically include quality, cost, and patient experience and are directly impacted by primary care. These models currently target practicing clinicians; however, it is necessary to train future primary care physicians so that they are prepared to meet triple aim goals in their future practices. In response to this, faculty development initiatives are examining the transformation of primary care residency training and the clinical practice setting.4,5

With the changing landscape of primary care delivery, knowledge, and skills in assessing and impacting population health management, improving processes of care in practice settings and managing change are critical for physicians. The necessary process of measuring and improving quality in academic medical center environments, however, can prove challenging given academia’s tripartite mission of research, teaching, and patient care.6,7 Nonetheless, the powerful forces of declining reimbursement rates, emergence of narrow networks in health exchanges, and enhanced focus on medical costs and reputation require greater transparency and accountability for cost, satisfaction, and health outcomes.8

In 2005, the I3 Collaborative was chartered to address ways to improve chronic disease care in family medicine residencies5 and subsequently expanded to address patient-centered medical home recognition in family medicine, internal medicine, and pediatrics teaching practices.9 Given the success of these initial two collaborative cohorts, we focused a third phase of the I3 collaborative (I3 POP) on improving care of populations served by primary care teaching practices in North Carolina, South Carolina, and Virginia in three broad areas (the triple aim): care quality, appropriate utilization, and experience of care. Three years into the collaborative, we examine change in triple aim measures and identify factors associated with improvement.

Setting

Planning for the I3 POP Collaborative, including obtaining baseline measures, began in July 2012, with 29 teaching practices that were associated with 23 primary care residencies (family medicine, internal medicine, and pediatrics) in North Carolina, South Carolina, and Virginia. Participating programs began active work in the collaborative in April 2013 and concluded in October 2015. The collaborative utilized a tailored version of the Institute for Health Care Improvement Breakthrough Series Collaborative model to develop interprofessional teams that would work together using the Model for Improvement to address triple aim goals.10 A core belief of the I3 POP Collaborative is that the practice is the curriculum for ambulatory continuity practice in primary care teaching settings; the curricular emphasis was on engagement of both faculty and residents in learning while doing the work of improving care. This was accomplished by: (1) having semiannual face-to-face learning sessions; (2) having monthly webinars on each of the three thread focus areas in which residents, faculty, and staff participated as members of quality improvement (QI) teams within their teaching practices; (3) provision of supporting references and resources for each topic; and (4) reporting of data that each site could use to assess progress and reestablish new plans for subsequent plan-do-study-act (PDSA) cycles. Each participating teaching practice submitted data on collaborative-wide triple aim metrics annually and also focused on one or more triple aim goals. Further details can be found in Donahue et al.11 Baseline data and initial assessment protocols were approved by the University of North Carolina Institutional Review Board. Data shared among the collaborative were exempted from review, and contained no personal identifiers.

Outcome Measures

The primary collaborative outcomes comprise a set of core measures representing each arm of the triple aim. Core measures, selected using a consensus process at the beginning of the collaborative, were aligned with national measures and collected at baseline and annually. Quality measures fell into the following categories: chronic disease (diabetes–5 measures, congestive heart failure–2 measures, hypertension control, asthma–4 measures, obesity–3 measures, ADHD–3 measures) and prevention (tobacco use–2 measures, breast and colorectal cancer screening, adult influenza immunization, and pediatric immunization). We decided to operationalize cost reduction by focusing on key drivers of utilization, including hospitalizations, emergency department (ED) visits, readmissions, subspecialty referrals and high-end radiology (CT, MRI, PET scans). Given the variation in measures of patient experience across collaborative practices, we decided to measure two known drivers of patient experience: continuity of care (as measured by usual provider continuity rates) and access to care (measured by time to third next available appointment).12 The full measure set is described in an earlier paper.11 At each reporting period, practices were asked to report two sets of chronic illness measures and one prevention measure set for quality, both patient experience measures, and all five cost/utilization measures. Each program was allowed to select one or more of the three “threads” (chronic disease, utilization, or patient experience) on which to focus their improvement efforts during the collaborative. Not all practices were able to report all measures at each reporting period, so we treated each reporting period as an open cohort. In each time period we calculated the median for each measure to assess overall change. As in prior I3 collaboratives, methods to promote care improvement included sharing data, face-to-face learning session meetings every 6 months, and monthly webinars featuring best practices for each component of the triple aim.

Process Measures

We constructed an improvement score and collaborative engagement score for each participating practice. The improvement score was the total number of improvements over baseline for all measures reported. The collaborative engagement score for each practice reflected reporting of core measures data, attendance at semiannual learning sessions, and participation in monthly thread webinars. For each measure, we identified “bright spots”—practices achieving 10% or greater improvement over the 3-year collaborative period. This threshold was felt to be clinically significant by the collaborative leadership.

Analyses

Measures were reported as percentages, except access (days to third next available appointment) which was reported as the average number of days for each practice. Because of the sparseness and variability of our data we used nonparametric statistical tests. We compared matched pairs of observations for each measure in each time period using the sign test. We used the χ2 test for trend to assess each measure for improvement over baseline across all time periods. We calculated odds ratios with 95% confidence intervals to compare programs whose improvement scores were above the collaborative median to those who scored at or below the median on a variety of characteristics, including university vs nonuniversity department, PCMH recognition, nationally-certified electronic health record (EHR) in transition, faculty member involved in data management, registry use, collaborative engagement, number of physician providers, patient age group (less than 65 years vs 65 years and older), and insurance status, as well as number of active patients and annual visits. Stata 10.1 software was used for statistical analyses.13

We then shared the individual practice data with participating programs to further explore supports and barriers to improvement. Interview questions included: “Does the data accurately reflect your participation in the I3 POP Collaborative?”; “What were the key facilitators and challenges for your practice program when implementing your I3 related practice goals?”; “What aspects of the triple aim did your practice find easiest to address?”; and “Which were most difficult?” Responses to each question were tabulated and coded for common themes by one of the authors using the method of constant comparison.14 Another author reviewed coded responses for validity and consistency.

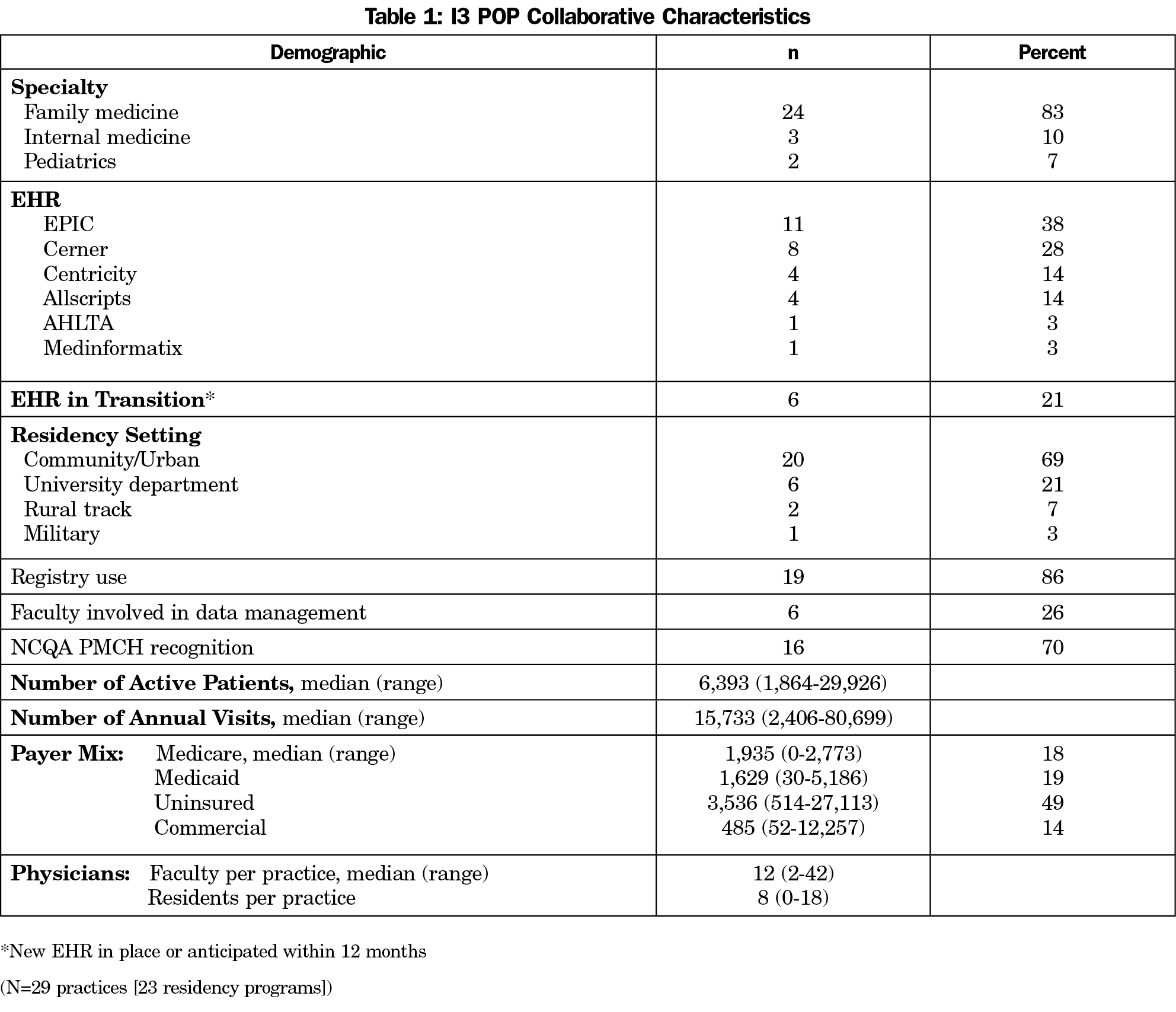

A total of 24 family medicine, three internal medicine, and two pediatric practices, representing 23 distinct residency training programs, participated in I3 POP (Table 1). These practices utilized six different electronic health records (EHRs) and the majority (69%) came from community settings. Programs consisted of 352 faculty and 687 residents. Practice population size varied from 2,406 to 80,699 annual visits per year (median: 15,733). Thirteen practices focused on patient experience, 14 on quality measures and 13 on utilization measures (practices could work on one or more threads). Six practices (21%) were unable to successfully extract comparable core measures from their nationally certified EHR over the 3-year time frame. These practices were unable to report any data, or, in a few cases, were able to report at only one time period. There was no pattern to the individual data elements they were unable to report. There was no relationship to EHR type and ability to extract data.

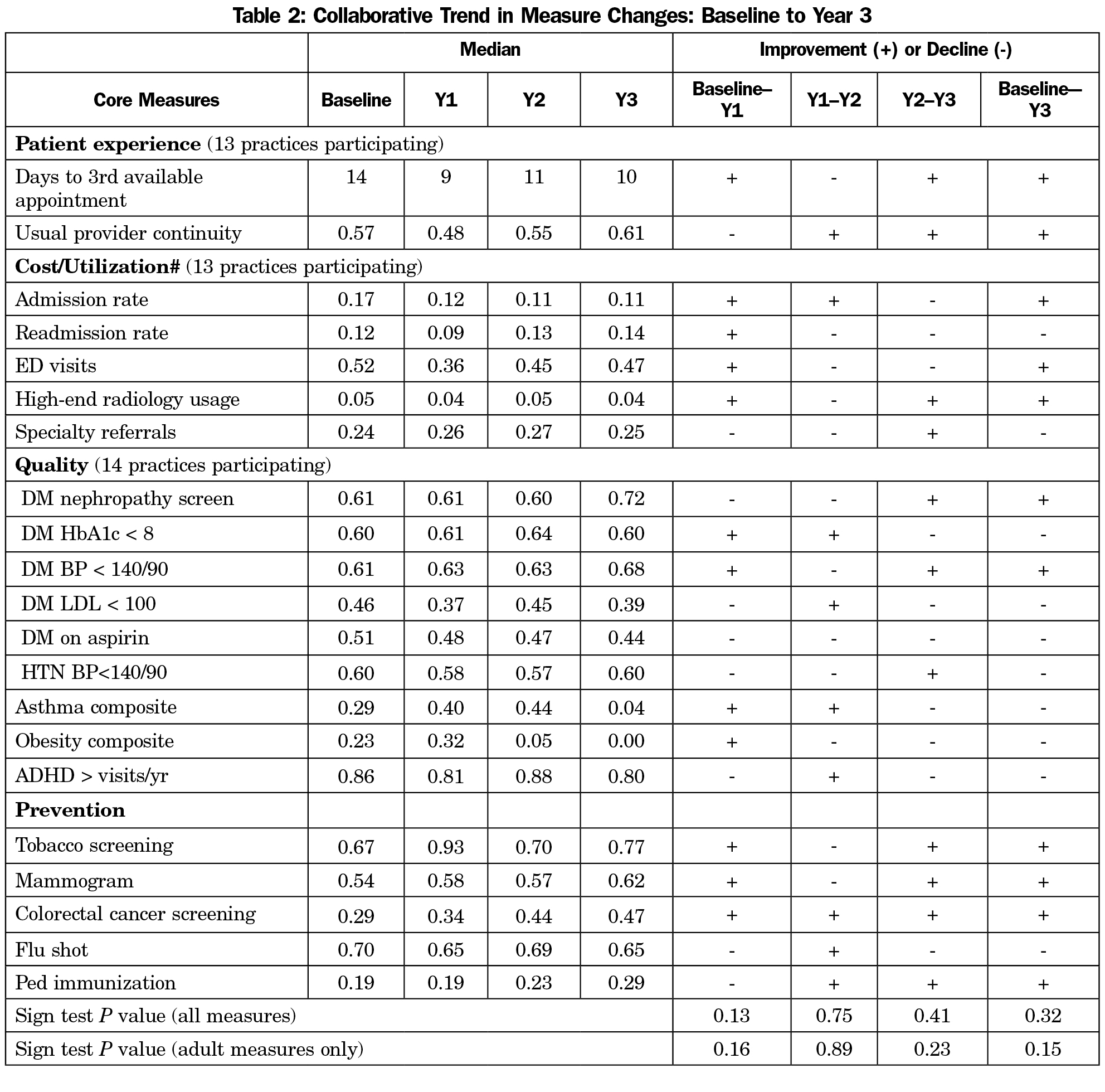

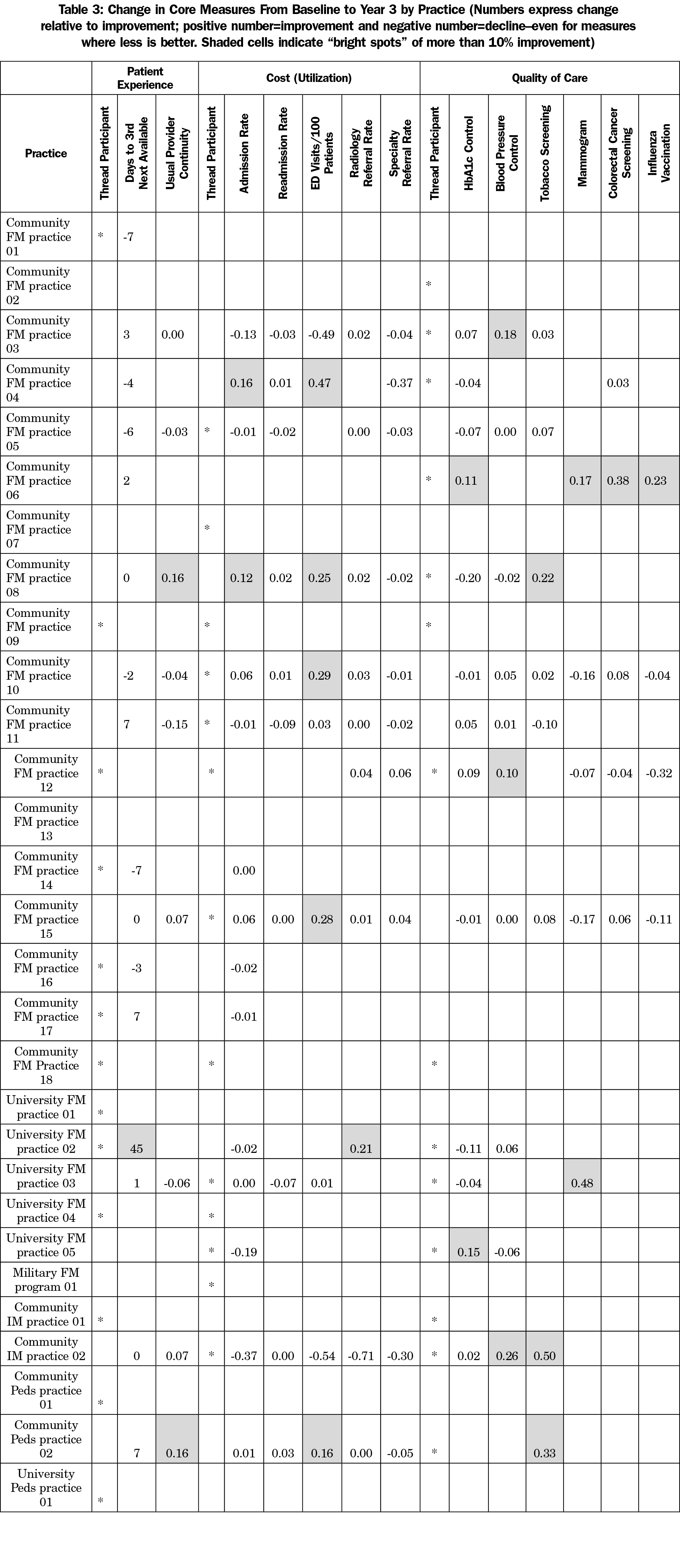

There were no statistically significant triple aim improvements noted across the collaborative as a whole (Table 2). Nonsignificant improvements were noted in access, continuity rates, hospitalization rates, emergency department visits, high-end radiology referrals, tobacco screening, mammography, colorectal cancer screening and pediatric immunization. For the 23 practices that did report data, all improved in at least one measure. Seven (24%) of the practices improved measures in all three areas of the triple aim (Table 3).

Bright Spots

Despite the absence of overall significant improvement, we found bright spots (10% or greater improvement from baseline to year 3) for all measures except hospital readmissions and specialty referrals. All bright spots practices in care quality measures participated in the collaborative’s quality thread. Two-thirds of bright spots for cost and patient experience measures participated in those threads (Table 3). Two practices were noted as bright spots for at least one measure in each element of the Triple Aim. Both programs had stable EHRs and registry use and both reported faculty involvement with the data management. They also had engaged I3 champions who were able to create and sustain a culture of improvement in their programs.

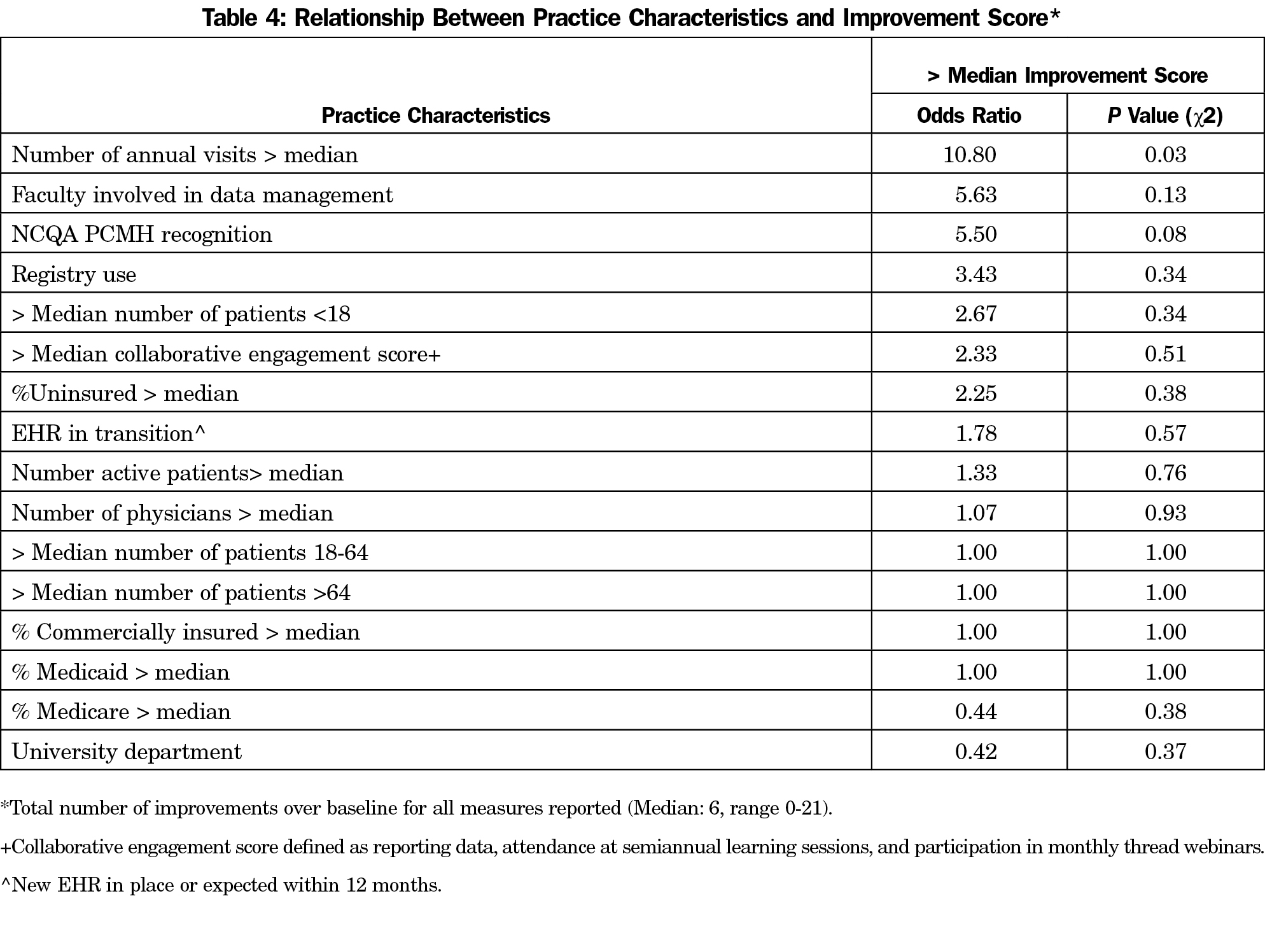

When examining the relationship between practice characteristics and improvement, practices that had a higher number of annual patient visits were more likely to improve in triple aim measures above the collaborative median (OR 10.8, 95% CI: 0.68–172.2, P=0.03, Table 4). There were nonstatistically significant trends for having PCMH recognition with improvement (OR 5.50, P=0.08).

When examining survey responses, a total of 18 programs provided feedback on the I3 collaborative. Key facilitators for the programs included having engaged departmental leadership support, aligning I3 work with ongoing system goals, and having a multidisciplinary team approach to getting the work done. Challenges included disruptions with a nationally-certified EHR conversion, data collection that required off site collection, multiple competing demands of teaching practices, and disseminating information to those not directly involved in I3. When asked what aspects of the triple aim the practice found easiest to address, responses were mixed and seemed to correlate with ease of data access. Matching outcomes with interventions was difficult, as practices may have been working in one area of the triple aim, but the outcome measure failed to capture the work that they were doing (eg, collecting outcomes of patient continuity and time to third available visit, but working on a different aspect of patient experience). When asked what aspects were most beneficial to their practice, several programs noted learning from each other, increasing resident awareness of and enthusiasm for population health, and having time set aside for face-to-face meetings to grapple with these issues.

In our study of residency teaching practices attempting to work on triple aim goals, 24% made improvements in all three aims. All practices that were able to pull and report data improved on at least one measure. However, there were no statistically significant improvements in the collaborative as a whole. This is likely due to an array of factors, including wide variation in triple aim baseline measures across practices. The specific approaches to improving care may be more local than hypothesized, as some sites were able to make significant improvement. Additionally, allowing participants to select the measures they wanted to improve, while enhancing their engagement, may have missed an important assessment of their capacity to engage in practice improvement activities. The triple aim, often thought of as an iron triangle,15 where cost, experience, and quality goals are in constant competition with each other, is also difficult; working on more than one of these areas at a time may mean that individual measures are tougher to move within a short time period.

Many practices experienced substantial difficulty obtaining data, even those that were part of large health systems. Barriers included limited capabilities of the nationally certified EHR to pull needed measures; policy limits internal to the health system or length of reporting queue; and insufficient training or skill of staff to obtain needed EHR data. Barriers to improvement will continue if these supports are not addressed.

The structure of the I3 POP Collaborative, which varied in design from its earlier two counterpart programs, may have also contributed to inability to demonstrate significance of outcomes overall. Managing three different thematic areas simultaneously contributed to insufficient focus on targeted aims for improvement. Allowing practices to have freedom choosing measures without limits and not providing incentives or tangible support for the work involved, beyond educational sessions, may have contributed to the collaborative taking on a more diffused or lesser priority within the teaching practices. Finally, the variables measured were compared using traditional statistical methods that may not account for clinical significance or ability to surpass a clinically significant threshold. Furthermore, the rate for several measures already exceeded national benchmarks16,17 at baseline. However, this experience provides insight into the challenges and strains that primary care microsystems are experiencing within health systems.

When reflecting on the facilitators of improvement, having departmental leadership support, aligning I3 work with local health system goals, and having a multidisciplinary team approach to getting the work done were described as key facilitators. Having a vision and commitment for systemic change is important for success.18 Future work needs to focus on cultivating leadership and systems supports in large-scale improvement.

Limited literature describes specific curricular activities or experiences that incorporate QI principles and methodologies into graduate medical education.19-31 Several of these studies have shown an improvement in patient care as measured by specific quality indicators. The I3 POP takes a broader approach and incorporates the triple aim for both learners and faculty development.

There are several limitations to the I3 POP evaluation. I3 POP is a decentralized, regional collaborative, with most data obtained through EHR. Although these results are subject to the limitations of data pulls, these are the same data that our health care systems are evaluated upon, making it paramount that data are as accurate as possible. Additionally, many of these residency programs are working on QI initiatives in chronic disease, prevention and utilization independent of this collaborative. It is not possible to tease out the effects of the I3 collaborative from those of other practice- and system-based improvement efforts.

While the collaborative did not demonstrate effectiveness on measures of overall quality of care, several individual sites and residency programs did achieve improvement and related the positive impact of working in a collaborative on their ability to improve care. Future design of I3 Collaborative activities will take into account the design lessons learned from I3 POP to enhance outcomes, including greater degree of focus, increased structure, more attention to leadership buy-in and support, and assurance of data collection capabilities.

Acknowledgments

Financial support for I3 collaborative was provided by grants from the Duke Endowment #6365-SP, the Fullerton Foundation, Inc #12-05 and #15-02, and the North Carolina Area Health Education Centers Program.

Presentations: Oral presentation at the November 2015 NAPCRG meeting, Cancun, Mexico.

Special thanks to the UNC family medicine writing group for their thoughtful reviews of the drafts.

References

- Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff (Millwood). 2008;27(3):759-769.

https://doi.org/10.1377/hlthaff.27.3.759.

- Centers for Medicare and Medicaid Services. Accountable Care Organizations (ACO). https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/ACO/index.html?redirect=/aco. Accessed July 2015.

- Bajner RF, Burnett JW, Prescott B, Barth P. Issue brief: medicare access and CHIP reauthorization act (MACRA) quality payment program. https://www.navigant.com/insights/healthcare/2016/macra-whitepaper. Accessed October 2016.

- Carney PA, Eiff MP, Green LA, et al. Transforming primary care residency training: a collaborative faculty development initiative among family medicine, internal medicine, and pediatric residencies. Acad Med. 2015;90(8):1054-1060.

https://doi.org/10.1097/ACM.0000000000000701.

- Newton W, Baxley E, Reid A, Stanek M, Robinson M, Weir S. Improving chronic illness care in teaching practices: learnings from the I³ collaborative. Fam Med. 2011;43(7):495-502.

- Blayney DW. Measuring and improving quality of care in an academic medical center. J Oncol Pract. 2013;9(3):138-141.

https://doi.org/10.1200/JOP.2013.000991.

- Dzau VJ, Cho A, Ellaissi W, et al. Transforming academic health centers for an uncertain future. N Engl J Med. 2013;369(11):991-993.

https://doi.org/10.1056/NEJMp1302374.

- Athena Health. Academic medical centers: disrupt, transform and grab the mantle of change. https://www.athenahealth.com/whitepapers/academic-medical-center-challenges. Published March 2014. Accessed June 2016.

- Reid A, Baxley E, Stanek M, Newton W. Practice transformation in teaching settings: lessons from the I³ PCMH collaborative. Fam Med. 2011;43(7):487-494.

- Institute for Healthcare Improvement. http://www.ihi.org. Accessed Feb 28, 2017.

- Donahue KE, Reid A, Lefebvre A, Stanek M, Newton WP. Tackling the triple aim in primary care residencies: the I3 POP Collaborative. Fam Med. 2015;47(2):91-97.

- Weir SS, Page C, Newton WP. Continuity and Access in an Academic Family Medicine Center. Fam Med. 2016;48(2):100-107.

- Stata Statistical Software. Release 10.1. College Station, TX: StataCorp LP; 2009.

- Lincoln YS, Guba E. Naturalistic Inquiry. Beverly Hills, California: SAGE Publications; 1985.

- Carroll A. JAMA forum-The “iron triangle” of health care: access, cost and quality. https://newsatjama.jama.com/2012/10/03/jama-forum-the-iron-triangle-of-health-care-access-cost-and-quality/. Accessed October 2016.

- Health Policy Brief: Medicare Hospital Readmissions Reduction Program. Health Aff. 2013;12(November). http://healthaffairs.org/healthpolicybriefs/brief_pdfs/healthpolicybrief_102.pdf. Accessed March 2, 2017.

- Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418-1428.

https://doi.org/10.1056/NEJMsa0803563.

- Nutting PA, Gallagher KM, Riley K, White S, Dietrich AJ, Dickinson WP. Implementing a depression improvement intervention in five health care organizations: experience from the RESPECT-Depression trial. Adm Policy Ment Health. 2007;34(2):127-137.

https://doi.org/10.1007/s10488-006-0090-y.

- Carek PJ, Dickerson LM, Boggan H, Diaz V. A limited effect on performance indicators from resident-initiated chart audits and clinical guideline education. Fam Med. 2009;41(4):249-254.

- Daniel DM, Casey DE Jr, Levine JL, et al. Taking a unified approach to teaching and implementing quality improvements across multiple residency programs: the Atlantic Health experience. Acad Med. 2009;84(12):1788-1795.

https://doi.org/10.1097/ACM.0b013e3181bf5b46.

- Djuricich AM, Ciccarelli M, Swigonski NL. A continuous quality improvement curriculum for residents: addressing core competency, improving systems. Acad Med. 2004;79(10)(suppl):S65-S67.

https://doi.org/10.1097/00001888-200410001-00020.

- Ellrodt AG. Introduction of total quality management (TQM) into an internal medicine residency. Acad Med. 1993;68(11):817-823.

https://doi.org/10.1097/00001888-199311000-00002.

- Gould BE, Grey MR, Huntington CG, et al. Improving patient care outcomes by teaching quality improvement to medical students in community-based practices. Acad Med. 2002;77(10):1011-1018.

https://doi.org/10.1097/00001888-200210000-00014.

- Holmboe ES, Prince L, Green M. Teaching and improving quality of care in a primary care internal medicine residency clinic. Acad Med. 2005;80(6):571-577.

https://doi.org/10.1097/00001888-200506000-00012.

- Krajewski K, Siewert B, Yam S, Kressel HY, Kruskal JB. A quality assurance elective for radiology residents. Acad Radiol. 2007;14(2):239-245.

https://doi.org/10.1016/j.acra.2006.10.018.

- Oyler J, Vinci L, Arora V, Johnson J. Teaching internal medicine residents quality improvement techniques using the ABIM’s practice improvement modules. J Gen Intern Med. 2008;23(7):927-930.

https://doi.org/10.1007/s11606-008-0549-5.

- Paulman P, Medder J. Teaching the quality improvement process to junior medical students: the Nebraska experience. Fam Med. 2002;34(6):421-422.

- Vinci LM, Oyler J, Johnson JK, Arora VM. Effect of a quality improvement curriculum on resident knowledge and skills in improvement. Qual Saf Health Care. 2010;19(4):351-354.

https://doi.org/10.1136/qshc.2009.033829.

- Voss JD, May NB, Schorling JB, et al. Changing conversations: teaching safety and quality in residency training. Acad Med. 2008;83(11):1080-1087.

https://doi.org/10.1097/ACM.0b013e31818927f8.

- Carek PJ, Dickerson LM, Stanek M, et al. Education in quality improvement for practice in primary care during residency training and subsequent activities in practice. J Grad Med Educ. 2014;6(1):50-54.

https://doi.org/10.4300/JGME-06-01-39.1.

- Diaz VA, Carek PJ, Johnson SP. Impact of quality improvement training during residency on current practice. Fam Med. 2012;44(8):569-573.

There are no comments for this article.