Background and Objectives: Entrustable professional activities (EPAs) is a novel assessment framework in competency-based medical education. While there are published pilot reports about utilization and validation of EPAs within undergraduate medical education (UME), there is a paucity of research within graduate medical education (GME). This study aimed to explore the landscape of EPAs within family medicine GME, particularly related to the understanding of EPAs, extent of utilization, and benefits and challenges of EPAs implementation as an assessment framework within family medicine residency programs (FMRPs) in the United States.

Methods: A cross-sectional survey, as part of the 2017 Council of Academic Family Medicine (CAFM) Educational Research Alliance (CERA) Family Medicine Residency Program (FMRP) Director omnibus online survey was conducted in fall, 2017. ACGME-accredited FMRP directors were invited by email to participate.

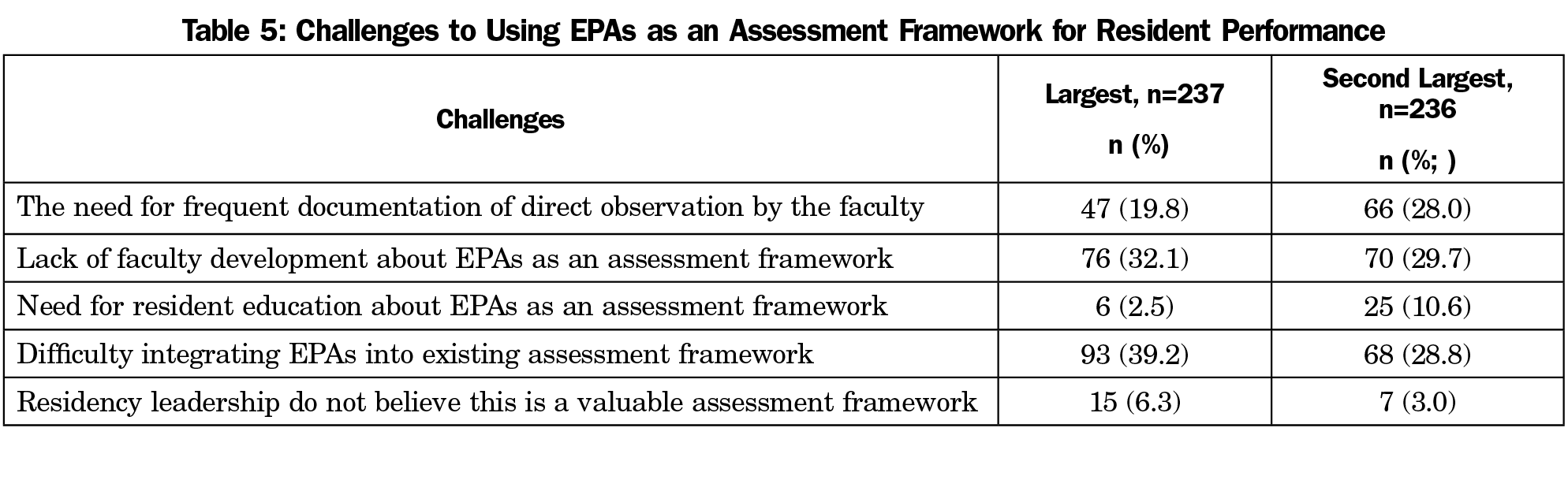

Results: The survey response rate was 53.1% (267/503). Overall, 90.1% (237/263) of FMRP directors were aware of EPAs as an assessment framework and 82.8% (197/238) understood the principles of EPAs, but 39.9% (95/238) were not confident in utilizing EPAs. Only 15.1% (36/238) of FMRP directors reported currently employing EPAs as an assessment tool. Identified benefits of EPAs use included increased transparency and congruence of expectations between learners and FRMP as well as facilitation for formative feedback. Identified barriers of EPA incorporation included difficulty integrating EPAs into the current assessment framework and faculty development.

Conclusions: While EPAs are well recognized and understood by FMRP directors, there is significant lack of utilization of this assessment framework within FMRP in the United States.

Competencies represent knowledge, attitude, and skill components that need to be mastered by a learner to be able to perform a complete professional activity.1,2 Competencies in this context are finite, observable actions that can determine the ability of a learner in a specific knowledge and skill area. Objective assessment of competencies in the clinical setting is a consistent and longstanding challenge for medical educators where direct observations are limited by time and frequency. Entrustable professional activities (EPAs) are a combination of multiple competencies that, when successfully performed together, compose a professional task.3 By combining competencies within tangible, clinical tasks, or EPAs, assessment of a learner’s competence in the clinical setting can be streamlined and more meaningful. The EPA framework has relevance across the continuum of undergraduate, graduate, and continuing medical education in preparation for health professionals’ roles.

In 2014 the American Association of Medical Colleges (AAMC) added the Core EPAs for entering residency to its competency-based education teaching and assessment framework; EPAs described activities medical students should be able to perform upon entering residency.4 Currently there are 10 pilot medical schools implementing and utilizing AAMC’s list of EPAs as an assessment tool.5 Through this pilot, academic clinicians are preliminarily evaluating potential areas of challenges and successes, specifically in four main concept areas: formal entrustment, assessment, curriculum development, and faculty development.5

In the context of graduate medical education, the Accreditation Council for Graduate Medical Education (ACGME) Outcome Project introduced six domains of core competencies to enhance physician resident assessment in late 1990s. These competency domains were later operationalized for outcomes-based assessment through the Next Accreditation System (NAS) Milestones.6-9 EPAs were not included within the Outcome Project assessment framework initially. Shaughnessy and colleagues first described EPAs in family medicine in 2013, identifying 76 EPAs for practicing family medicine physicians.10 Given the large number of EPAs, this framework was understandably daunting to residency educators and limited immediate functionality of EPAs within family medicine residency programs (FMRPs). Family Medicine for America’s Health simplified the EPAs in 2015 by developing a list of 20 EPAs for family medicine supported by the Association of Family Medicine Residency Directors (AFMRD).11 Even with two sets of EPAs for FMRP assessment, there is no published literature on the general understanding and current utilization of EPAs within FMRPs. Questions also remain regarding the most optimal EPAs list to use for an assessment framework and potential successes and challenges in utilizing EPAs in FMRPs. The purpose of this study was to explore the landscape of EPAs within FMRPs as a teaching and assessment framework. With the AAMC EPA initiative to promote use of EPAs within undergraduate medical education, we hypothesized that academic-based or affiliated programs would be more familiar with EPAs and have wider utilization of EPAs as an assessment framework. We examined the perspectives of FMRP directors regarding their understanding and utilization of EPAs in FMRPs, with the intention to use findings to guide next steps in better utilization of EPAs in training and assessment of competencies in graduate medical education.

Study Design

The study questions were part of a larger omnibus survey conducted by the 2017 Council of Academic Family Medicine (CAFM) Educational Research Alliance (CERA) survey of family medicine residency program directors.12 CAFM is a leadership and research collaborative between the Association of Departments of Family Medicine, the Association of Family Medicine Residency Directors, the North American Primary Care Research Group, and the Society of Teachers of Family Medicine. This cross-sectional survey is distributed annually to all ACGME-accredited US FMRPs program directors as identified by the Association of Family Medicine Residency Directors (AFMRD).

Data were collected from September to October 2017. Email invitations to participate were delivered with the survey utilizing the online program SurveyMonkey. Five follow-up emails were sent to encourage nonrespondents to participate after the initial email invitation. There were 526 program directors at the time of the survey. Eleven had previously opted out of CERA surveys, hence the survey was emailed to 515 individuals. Twelve emails could not be delivered. The final sample size was therefore 503 FMRP program directors. The American Academy of Family Physicians Institutional Review Board gave ethical approval for this study in August 2017.

Survey Question Development

Demographic data were obtained from the recurring questions of the omnibus CERA survey. The geographic regions are those defined by the American Association of Medical Colleges (AAMC). Researchers (J.J. and M.H.) devised 10 research questions regarding the cognitive understanding of EPAs by FMRP PDs, the extent of utilization within their FMRPs, and factors contributing to the facilitation or hindrance of the implementation and use of EPAs as an assessment framework. The CERA steering committee evaluated questions for consistency with the overall subproject aim, readability, and existing evidence of reliability and validity. CERA research liaison (J.A.) and study investigators (J.J. and M.H.) edited survey questions in accordance with research objectives. Final research questions are presented in Appendix A (https://journals.stfm.org/media/2293/appendixjarrett-fm19.pdf). Pretesting of the omnibus survey was performed by CERA on family medicine educators who were not part of the target population. Questions were refined following pretesting for flow, timing, and readability.

Analysis

Descriptive statistics were generated for all variables. Statistical comparisons between being aware of EPAs, understanding and confidence in the use of EPAs, academic affiliation of the residency, gender, and years as program director were conducted using Fisher exact test. Statistical analyses were performed using IBM SPSS Statistics for Windows, version 24 (IBM Corp, Armonk, NY). Statistical significance was set at P value of 0.05.

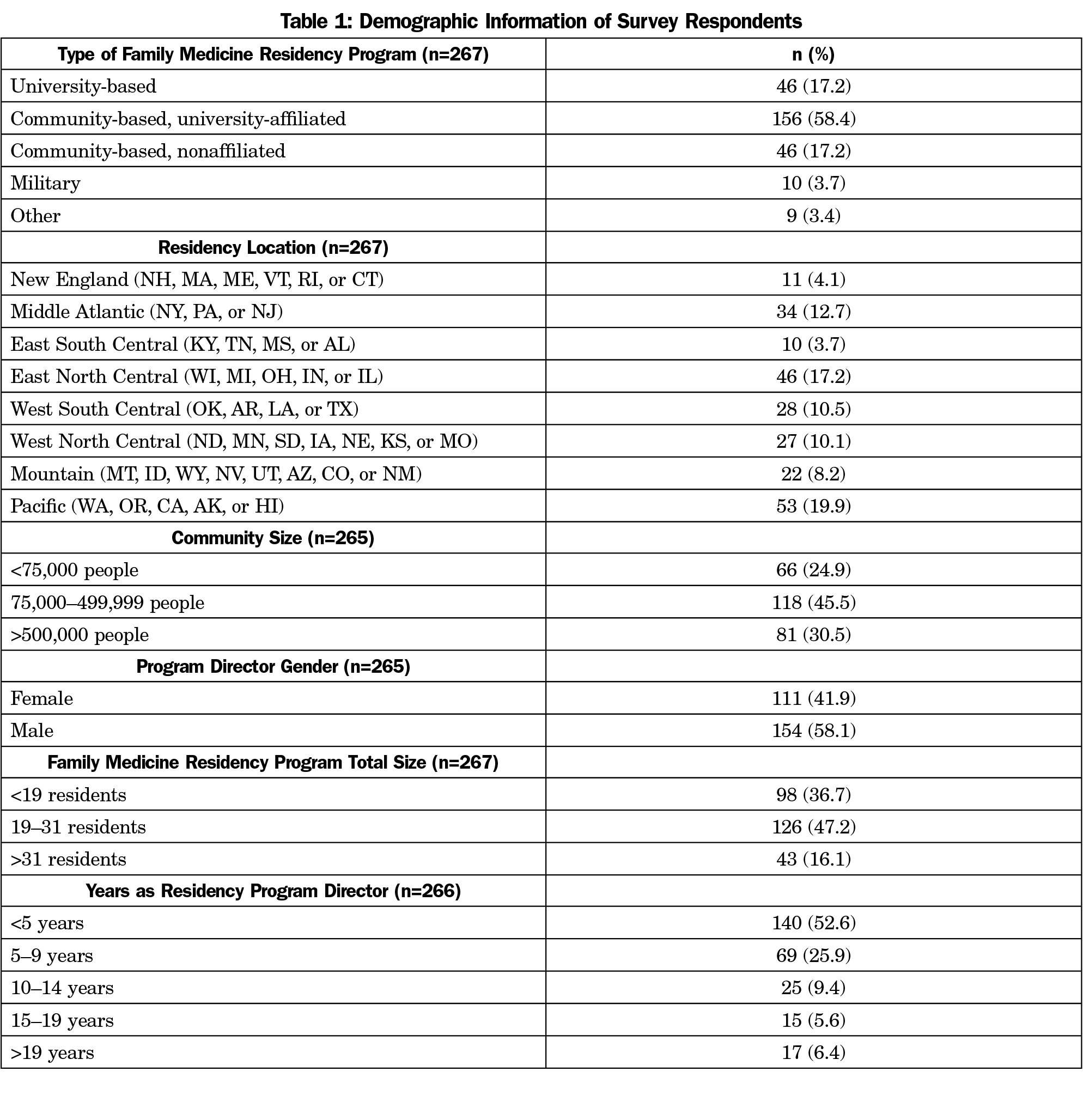

The overall response rate for the survey was 53.1% (267/503). Not every program director answered every question, consequently total denominators for each survey question varied and are noted. The majority (77.7%) of programs were university-affiliated family medicine residency programs, whether community- or university-based. Almost half (45.5%) of the family medicine residency programs were midsized with 19 to 31 total physician residents. Almost half (52.6%) of the program directors had served for less than 5 years as program director and another quarter has served between 5 and 9 years (Table 1).

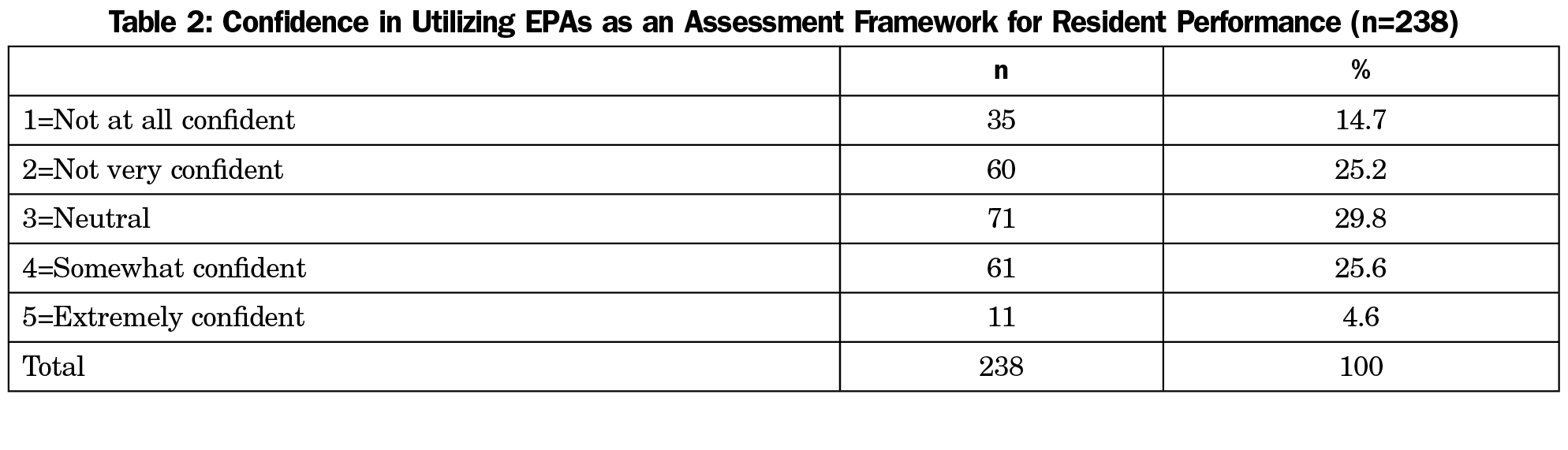

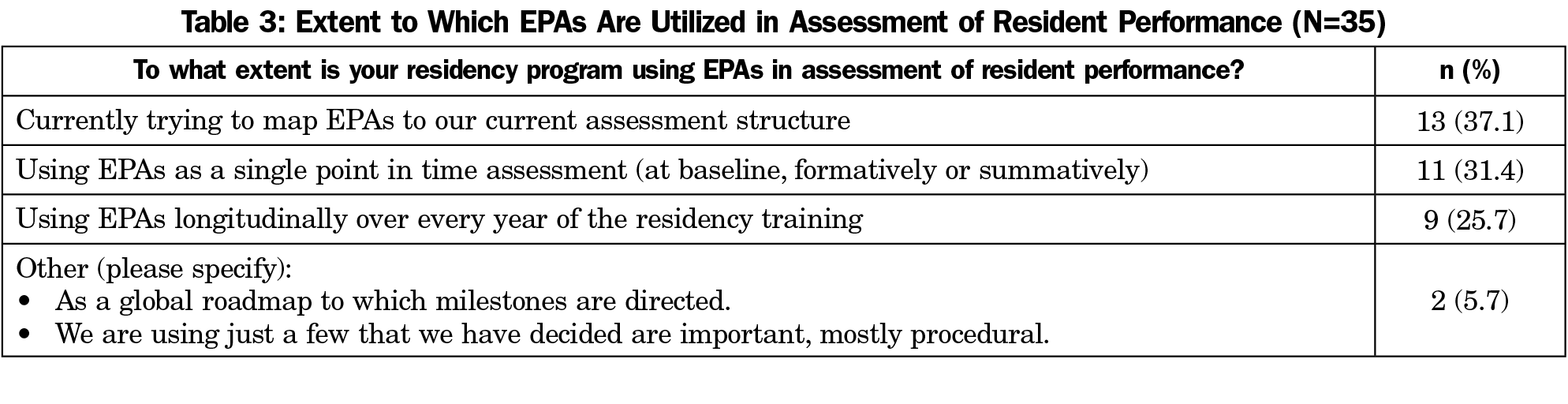

Almost all the program directors (90.1%) were aware of EPAs as an assessment framework for performance of residents. The majority (82.8%) of program directors reported that they understood the principles of EPAs for assessment; yet only 30.2% reported being somewhat or extremely confident in utilizing EPAs (Table 2). We did not find a statistically significant association between understanding or confidence in the use of EPAs and the academic affiliation, gender, or years in residency program. However, program directors at university-affiliated programs were more aware than those at community and military programs (92.5% vs 82.5%, P=0.029; Fisher exact test). Only 15.1% (36/238) of program directors reported that they currently employ EPAs as an assessment framework. The extent of use of the EPAs in the assessment of residents varied among programs (Table 3). Of 36 FMRP directors who reported using EPAs, the most frequently used EPAs list was Family Medicine for American’s Health (44.1%), followed by Shaughnessy et al EPAs (14.7%), and American Association of Medical Colleges EPAs for entering residency (5.9%).4,10,11 Another 35.3% (12 /36) program directors reported the use of a combination of EPAs from these lists.

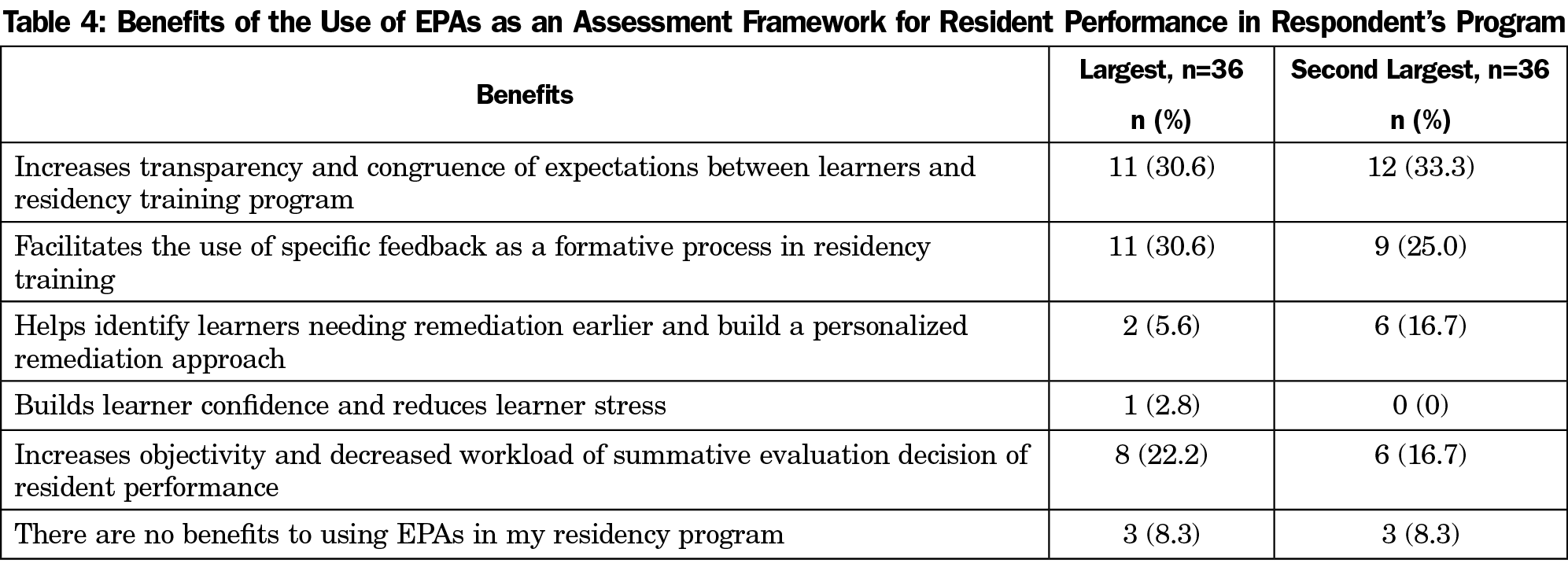

The program directors considered the most important benefits of the use of EPAs as an assessment framework were: (1) increase in transparency and congruence of expectations between learners and residency training program, and (2) facilitation of specific feedback as a formative process (Table 4). The largest challenges to utilization of EPAs were difficulty integrating EPAs into the existing assessment framework and lack of faculty development regarding EPAs (Table 5).

This study outlines significant awareness and understanding of EPAs as an assessment framework among FMRP directors. Benefits associated with utilizing EPAs as an assessment framework were broad and included increased transparency and congruence of learner expectations and facilitating formative feedback for physician residents. Even with clear understanding and perceived benefits of use, FMRP directors were not confident in utilizing EPAs as an assessment framework for their family medicine resident physicians. Barriers to their incorporation centered on difficultly integrating EPAs into the current assessment system and the need for faculty development of this new system.

Our study indicates that there is awareness and understanding of EPAs within family medicine GME; however, there appears to be a discordance in FMRP directors’ confidence in utilizing EPAs as an assessment framework. It is not surprising that FMRP directors are aware of EPAs, given the abundance of literature regarding their theory and framework for competency-based education.1, 3, 8,13,14 Evidence regarding functionality of EPAs within FMRPs may be limited in part due to confusion created by multiple listings of EPAs for family medicine physicians.4,10,11 The results of this study show there is no single list of family medicine EPAs that a majority of family medicine GME programs are utilizing, with over one-third of programs utilizing some combination of published family medicine EPAs. There is a need for consensus and guidance on the preferred list of family medicine EPAs to establish best practices in training and assessment and to build the confidence necessary in family medicine GME faculty to functionalize and increase utilization of this assessment framework.

Three major themes emerged from our findings regarding challenges to utilization of EPAs within family medicine GME: (1) difficulty integrating EPAs within the current assessment mechanism, (2) need for faculty development of the novel framework and (3) lack of time for direct observations. The ACGME Outcomes Project and subsequent NAS Milestones propelled GME toward outcomes-based education to support the quality of education, innovation, and patient safety.15 While the six ACGME core competencies have supported educational outcomes and improvements in physician education, the model is still somewhat abstract and challenging for mapping to EPAs.15 Faculty development may be simpler for EPAs in GME because EPAs are based on clinical descriptions where faculty are well versed, in place of abstract educational outcomes or milestones.16-18 EPAs as an assessment framework will require more faculty time through increased direct observations of learners, which will enhance reliability and validity of assessment.19 Future inquiries regarding learner and faculty outcomes, such as level of competency or faculty ability to provide feedback, would add to understanding potential outcomes of EPA utilization.

Our findings raise questions regarding approaches that might incentivize program directors to overcome obstacles to implementation. We cannot refute the fact that the EPAs, coupled with the NAS Milestones, will add time burden,16 however there is evidence that utilizing these tools will have significant benefit by enhancing the assessment of competence process and learner outcomes.19 Our research supports this evidence and specifies the increases in transparency of expectations and facilitation of feedback as the enhancement of assessment of residents. What is needed is a balanced approach that optimizes the process for assessment of physician competence. We believe the use of EPAs as an assessment framework is an opportunity for bridging competency-based education and assessment across the continuum of medical education.

There are limitations to this study. Family medicine residency program directors who were unfamiliar with or not utilizing EPAs may be less likely to respond to these questions, resulting in selection bias and over- or underreporting of the use of EPAs within family medicine GME. Although study investigators are engaged in the use of EPAs and provided thoughtful multiple-choice options for selection, some important factors may have been missed or not described fully. Findings may not be generalizable outside of CERA members or in other specialties.

The high stakes in the preparation of our future health workforce and its impacts on the health of individuals and communities necessitates a clear vocabulary for the work performed and an integrated assessment framework toward those roles. EPAs is an emerging assessment framework that is growing in recognition within medical education to elucidate competency-based education to practice. While EPAs are well understood within family medicine GME and have noted benefits for their learners, FMRP directors are challenged by the implementation process of EPAs within their current assessment structures. The findings of our study provide impetus and promise for the continued work of incorporation of EPAs within FMRPs. Globally, a focused effort to define a clear list of EPAs for family medicine and map those EPAs to the current core competencies is imperative as a bridge for future utilization.

While EPAs are well recognized and understood by FMRP directors, there is significant lack of utilization of this assessment framework within FMRP in the United States. There are clear benefits to utilization of EPAs within graduate medical education for both teachers and learners. However, significant challenges exist to implementing EPAs in the current assessment structure. Our study adds to the body of knowledge in this area and calls for additional inquiry to delineate best-practices in assessment of trainee physicians.

Acknowledgments

The authors thank Allen F. Shaughnessy, PharmD, MmedEd, for his insight during survey question development.

References

- Ten Cate O. Competency-based education, entrustable professional activities, and the power of language. J Grad Med Educ. 2013;5(1):6-7. https://doi.org/10.4300/JGME-D-12-00381.1

- Wijnen-Meijer M, van der Schaaf M, Nillesen K, Harendza S, Ten Cate O. Essential facets of competence that enable trust in graduates: a delphi study among physician educators in the Netherlands. J Grad Med Educ. 2013;5(1):46-53. https://doi.org/10.4300/JGME-D-11-00324.1

- Ten Cate O, Chen HC, Hoff RG, Peters H, Bok H, van der Schaaf M. Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach. 2015;37(11):983-1002. https://doi.org/10.3109/0142159X.2015.1060308

- American Association of Medical Colleges. Core Entrustable Professional Activities for Entering Residency: Curriculum Developers’ Guide (2014). https://members.aamc.org/eweb/upload/Core%20EPA%20Curriculum%20Dev%20Guide.pdf. Accessed April 11, 2017.

- Loomis K, Amiel JM, Ryan MS, et al. Implementing an Entrustable Professional Activities Framework in Undergraduate Medical Education: Early Lessions from the AAMC Core Entrustable Professional Activities for Entering Residency Pilot. Acad Med. 2017; 92(6):765-770. https://doi.org/10.1097/ACM.0000000000001543

- Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012;366(11):1051-1056. https://doi.org/10.1056/NEJMsr1200117

- Nasca TJ, Weiss KB, Bagian JP, Brigham TP. The accreditation system after the “next accreditation system”. Acad Med. 2014;89(1):27-29. https://doi.org/10.1097/ACM.0000000000000068

- Ten Cate O. Nuts and bolts of entrustable professional activities. J Grad Med Educ. 2013;5(1):157-158. https://doi.org/10.4300/JGME-D-12-00380.1

- Ten Cate O. Entrustment as Assessment: Recognizing the Ability, the Right, and the Duty to Act. J Grad Med Educ. 2016;8(2):261-262. https://doi.org/10.4300/JGME-D-16-00097.1

- Shaughnessy AF, Sparks J, Cohen-Osher M, Goodell KH, Sawin GL, Gravel J Jr. Entrustable professional activities in family medicine. J Grad Med Educ. 2013;5(1):112-118. https://doi.org/10.4300/JGME-D-12-00034.1

- Family Medicine for America’s Health. Preamble: Entrustable Professional Activities for Family Medicine End of Residency Training. http://www.afmrd.org/index.php?mo=cm&op=ld&fid=1149. Accessed April 12, 2017.

- Mainous AG III, Seehusen D, Shokar N. CAFM Educational Research Alliance (CERA) 2011 Residency Director survey: background, methods, and respondent characteristics. Fam Med. 2012;44(10):691-693.

- Chen HC, van den Broek WE, ten Cate O. The case for use of entrustable professional activities in undergraduate medical education. Acad Med. 2015;90(4):431-436. https://doi.org/10.1097/ACM.0000000000000586

- Hauer KE, Kohlwes J, Cornett P, et al. Identifying entrustable professional activities in internal medicine training. J Grad Med Educ. 2013;5(1):54-59. https://doi.org/10.4300/JGME-D-12-00060.1

- Carraccio C, Englander R, Gilhooly J, et al. Building a Framework of Entrustable Professional Activities, Supported by Competencies and Milestones, to Bridge the Educational Continuum. Acad Med. 2017;92(3):324-330. https://doi.org/10.1097/ACM.0000000000001141

- Norman G, Norcini J, Bordage G. Competency-based education: milestones or millstones? J Grad Med Educ. 2014;6(1):1-6. https://doi.org/10.4300/JGME-D-13-00445.1

- Beeson MS, Warrington S, Bradford-Saffles A, Hart D. Entrustable professional activities: making sense of the emergency medicine milestones. J Emerg Med. 2014;47(4):441-452. https://doi.org/10.1016/j.jemermed.2014.06.014

- Favreau MA, Tewksbury L, Lupi C, Cutrer WB, Jokela JA, Yarris LM; AAMC Core Entrustable Professional Activities for Entering Residency Faculty Development Concept Group. Constructing a Shared Mental Model for Faculty Development for the Core Entrustable Professional Activities for Entering Residency. Acad Med. 2017;92(6):759-764. https://doi.org/10.1097/ACM.0000000000001511

- Hasnain M, Connell KJ, Downing SM, Olthoff A, Yudkowsky R. Toward meaningful evaluation of clinical competence: the role of direct observation in clerkship ratings. Acad Med. 2004;79(10)(suppl):S21-S24. https://doi.org/10.1097/00001888-200410001-00007

There are no comments for this article.