Background and Objectives: Barriers to research in family medicine are common. Resident studies are at risk of remaining incomplete. This report describes a process improvement (PI) to optimize survey data collection in a longitudinal research protocol led by family medicine residents. The protocol subject to the process improvement sought to evaluate maternal outcomes in group prenatal care vs traditional care. In the months preceding the PI, the resident researchers noted many surveys were not completed in their intended timeframe or were missing, threatening study validity. We describe a practical case example of the use of a PI tool to resident-led research.

Methods: The residents applied three plan-do-study-act (PDSA) cycles over 8 months. Throughout the cycles, we solicited barriers and proposed solutions from the research team. Process measures included percentage of surveys completed within 2 weeks of the deadline (“on-time” response rate), and percentage of surveys completed overall.

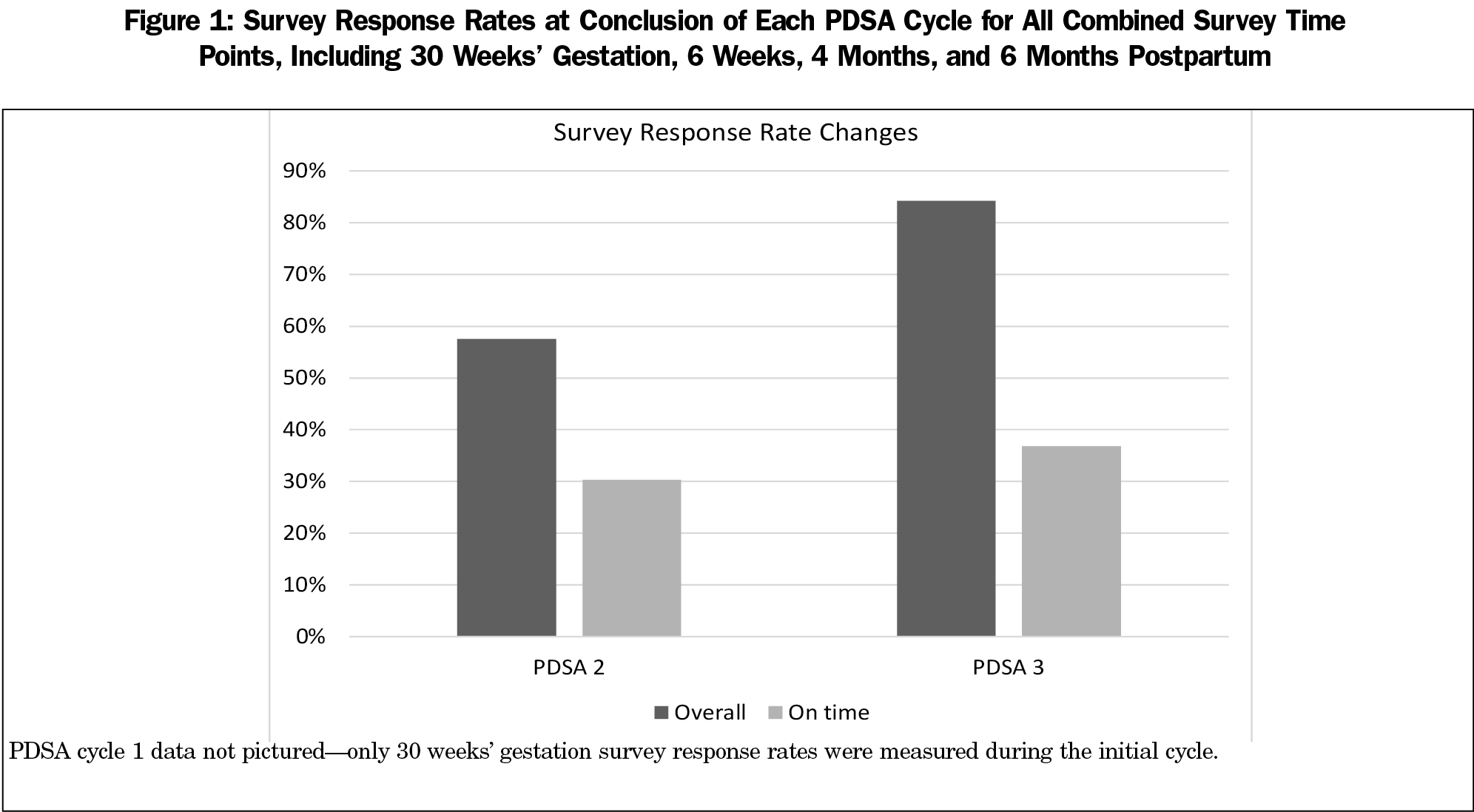

Results: A secure, shared survey tracker was created and optimized during three PDSA cycles to calculate and track survey deadlines automatically upon enrollment in the study. Automated colored flags appeared for due or overdue surveys. On-time response rates did not improve. Overall response rates did improve meaningfully from 57% (19 of 33 eligible) to 84% (16 of 19 eligible).

Conclusions: The PDSA cycles improved survey response rates in this research protocol. This intervention incurred no cost, was easily implemented, and was impactful. Other research teams can apply this PI tool to barriers in their research processes with minimal risk and cost.

Research involvement in residency promotes the practice of evidence-based medicine, lifelong learning, and continued participation in research.1 Despite these benefits, family medicine residents are relatively unlikely to participate in research.2-4 Barriers include a lack of interest, time, mentorship, and skills.5,-8 Residents are more likely to perform research limited to a single time point (particularly case reports) and are unlikely to undertake longitudinal, hypothesis-driven projects.3,9 Studies are at risk of being abandoned and remaining incomplete.2,10 We describe a practical case example of using a process improvement (PI) tool to facilitate resident-led research.

As residents, we led a longitudinal, survey-based, prospective cohort research protocol designed to evaluate group prenatal care (CenteringPregnancy). Survey instruments assessing patient satisfaction, incidence of depression and anxiety, breastfeeding practices, and breastfeeding attitudes were administered at five specific time points across 13 months of follow-up. During the study, we noted that many surveys were not being completed during their intended time frame or were missing entirely. We feared that the response rate was low enough to threaten study validity, and therefore intervened immediately.

Process improvement (PI) tools have been adopted in residency settings to achieve clinical change.11-14 There is a limited body of literature describing application of process improvement concepts to improve research processes.15,16 We applied a PI tool, the Plan-Do-Study-Act (PDSA) cycle, to maximize survey responses in our low-resource setting. The PDSA cycle is commonly used in family medicine residencies.13,14,17-19 It starts with a specific, measurable, achievable, relevant, and time-limited (SMART) objective.20 The process involves continuous cycles of incremental change, assessment of progress toward the objective, and reflection on lessons learned.20,21

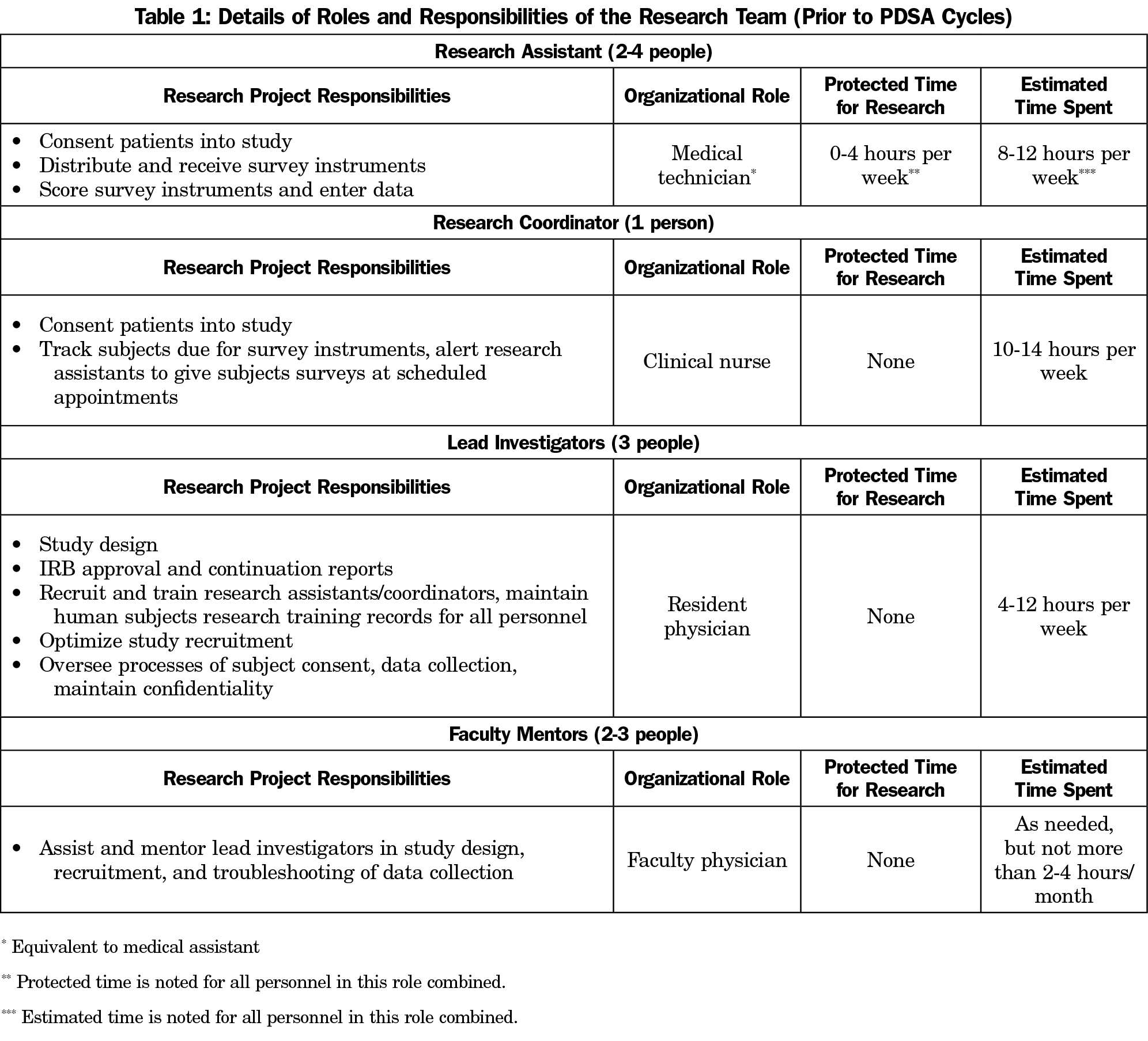

The research team was led by three family medicine residents. The PDSA cycles were not funded and incurred no monetary cost, but did require dedication of nonprotected time by research coordinators, research assistants, faculty mentors, and the resident team leaders (Table 1).

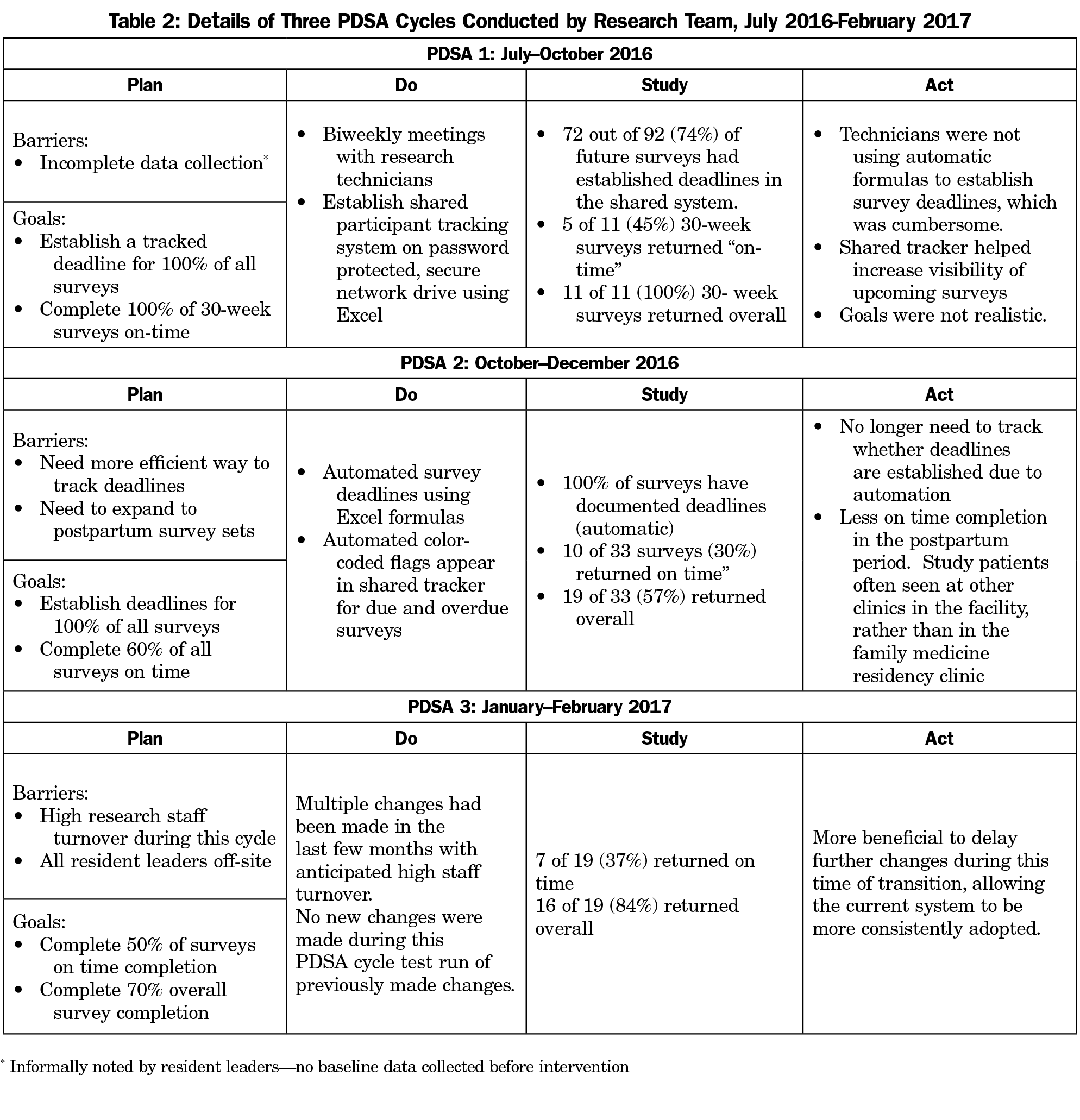

At three intervals over 8 months of the study, PDSA cycles were implemented to improve the survey response rate (Table 2). The resident leaders met with the research coordinator and assistants to solicit barriers and proposed solutions biweekly. We developed objectives following the SMART format. Interventions were chosen by consensus, based on feasibility and impact. An early intervention of the first PDSA cycle involved creation of a shared survey completion tracker. Process measures informed by this tracker included “on-time” response rates and overall response rates. On-time responses were defined by the number of participants who were eligible to complete a survey during the PDSA cycle and completed that survey within 2 weeks of the deadline. This measure was chosen by the research team to raise visibility and prioritize follow-up for those subjects. Survey deadlines were defined by the research protocol: 30 weeks gestation, 6 weeks, 4 months, and 6 months postpartum. Overall response rates were defined by the number of participants who were eligible to complete a survey during the time period of PDSA cycle and completed that survey at any point before the end of the PDSA cycle, even if outside the on-time window. PDSA cycles were process driven and did not require alterations to the existing research protocol approved by the institutional review board.

Details of the iterative process changes and the resultant changes in survey response rates are outlined in Table 2 and Figure 1. Notably, survey response rates in PDSA cycle 1 were tracked only for the participants eligible to complete the 30-week gestation surveys. This served to pilot the tracking tool. Response rates were determined for surveys at all time points for cycles 2 and 3.

This project aimed to increase survey response rates using PDSA cycles. The PDSA cycle yielded a meaningful improvement from 57% to 84% overall survey responses. While the on-time response rate minimally improved, the overarching goal of the PI was to maximize survey responses in a resource-limited setting.

There were several limitations evident in this project. The scope of the PI was limited to a single research team. No baseline data for survey response rates was collected since we felt it was imperative to address the response rate problem immediately. The PDSA cycle has limited utility in research conducted at a single time point—the longitudinal nature of our protocol allowed us the opportunity to address unanticipated barriers. We did not assess whether gains made from the PDSA cycles were sustainable, which would be an important area for future inquiry.

Additional next steps would include replication by other teams, and application of the PDSA cycle to other barriers in the research environment, such as subject recruitment, team turnover, or data collection and processing. It is uncertain based on this single example whether systematic application of PDSA cycles by academic research institutions would help increase resident participation in research or help ensure completion of resident research projects. However, it is reasonable to expect that improving research processes could facilitate resident involvement in research. This is a key area for future inquiry.

While this PI was not designed or evaluated as a curricular intervention, we noted an opportunity to develop an educational model to help learners develop a deeper understanding of the goals and conduct of both PI projects and research studies. By applying the conceptual learning in the same realm, we noted one key difference between PI and research tasks. The hypothesis-driven research study followed a predetermined protocol, while the PI experience involved adopting incremental changes quickly, benefitting or suffering as a result. Unanticipated barriers hindered research but were exactly what PI was designed to address. Similarities also emerged. We needed to answer a question that was measurable, to collect data as completely and correctly as possible, and to work within a team. Notably, we simultaneously accomplished the process improvement and scholarly activity requirements required of residents by ACGME.22

Overall, this serves as an important proof of concept that other research teams could adapt to fit their own needs. Our research team was limited both in funds to hire dedicated personnel and in protected research time for our clinical personnel. We needed to optimize our processes to get the best possible result in a less-than-ideal environment. Our situation is not unique; research resources are relatively scarce in family medicine. PDSA cycles enabled us to overcome unanticipated research barriers in a way that incurred no monetary cost, added minimal additional time, and was impactful. Family medicine researchers will benefit from adopting this strategy for their research challenges.

Acknowledgments

The authors thank program director Dr Heidi Gaddey for her endorsement of this research project and the CenteringPregnancy program to military and residency leaders, as well as her invaluable mentorship during the implementation of the subject research protocol. They thank their research team, including Sheryl Wohleb, RN, Andrea Perez, Danielle Gilder, Elizabeth Wheeler, and Junsup Kim for volunteering their time and energy both to the subject protocol and to the process improvement. They thank the Military Primary Care Research Network (MPCRN) staff and leadership for reviewing an early version of the manuscript.

Funding/Support: This article represents process improvement on a research study supported by a Research Grant, Clinical Research Division, Wilford Hall Ambulatory Surgical Center, Lackland AFB, Texas, as well as a Small Grant, Uniformed Services Academy of Family Physicians. No funding was specifically dedicated to the PDSA cycles.

Disclaimer: The opinions and assertions contained herein are the private views of the authors and are not to be construed as official or as reflecting the views of US Air Force, the US Government, or the Department of Defense at large.

Presentations: This work was presented as an educational research poster at the 2018 Uniformed Services Academy of Family Physicians Annual Meeting, March 15-19, 2018, in Ponte Vedra Beach, Florida.

References

- Paulsen J, Al Achkar M. Factors associated with practicing evidence-based medicine: a study of family medicine residents. Adv Med Educ Pract. 2018;9:287-293. https://doi.org/10.2147/AMEP.S157792

- Crawford P, Seehusen D. Scholarly activity in family medicine residency programs: a national survey. Fam Med. 2011;43(5):311-317.

- Seehusen DA, Weaver SP. Resident research in family medicine: where are we now? Fam Med. 2009;41(9):663-668.

- Carek PJ, Mainous AG. The state of resident research in family medicine: small but growing. Ann Fam Med. 2008;6(suppl_1):S2-S4. https://doi.org/10.1370/afm.779

- Ledford CJW, Seehusen DA, Villagran MM, Cafferty LA, Childress MA. Resident scholarship expectations and experiences: sources of uncertainty as barriers to success. J Grad Med Educ. 2013;5(4):564-569. https://doi.org/10.4300/JGME-D-12-00280.1

- Bammeke F, Liddy C, Hogel M, Archibald D, Chaar Z, MacLaren R. Family medicine residents’ barriers to conducting scholarly work. Can Fam Physician. 2015;61(9):780-787.

- Young RA, Dehaven MJ, Passmore C, Baumer JG. Research participation, protected time, and research output by family physicians in family medicine residencies. Fam Med. 2006;38(5):341-348.

- Young RA, DeHaven MJ, Passmore C, Baumer JG, Smith KV. Research funding and mentoring in family medicine residencies. Fam Med. 2007;39(6):410-418.

- Levine RB, Hebert RS, Wright SM. Resident research and scholarly activity in internal medicine residency training programs. J Gen Intern Med. 2005;20(2):155-159. https://doi.org/10.1111/j.1525-1497.2005.40270.x

- Gill S, Levin A, Djurdjev O, Yoshida EM. Obstacles to residents’ conducting research and predictors of publication. Acad Med. 2001;76(5):477. https://doi.org/10.1097/00001888-200105000-00021

- Oyler J, Vinci L, Johnson JK, Arora VM. Teaching internal medicine residents to sustain their improvement through the quality assessment and improvement curriculum. J Gen Intern Med. 2011;26(2):221-225. https://doi.org/10.1007/s11606-010-1547-y

- Alweis R, Greco M, Wasser T, Wenderoth S. An initiative to improve adherence to evidence-based guidelines in the treatment of URIs, sinusitis, and pharyngitis. J Community Hosp Intern Med Perspect. 2014;4(1):22958. https://doi.org/10.3402/jchimp.v4.22958

- Jones KB, Gren LH, Backman R. Improving pediatric immunization rates: description of a resident-led clinical continuous quality improvement project. Fam Med. 2014;46(8):631-635.

- Weir SS, Page C, Newton WP. Continuity and Access in an Academic Family Medicine Center. Fam Med. 2016;48(2):100-107.

- Coury J, Schneider JL, Rivelli JS, et al. Applying the Plan-Do-Study-Act (PDSA) approach to a large pragmatic study involving safety net clinics. BMC Health Serv Res. 2017;17(1):411. https://doi.org/10.1186/s12913-017-2364-3

- Daudelin DH, Selker HP, Leslie LK. Applying Process Improvement Methods to Clinical and Translational Research: Conceptual Framework and Case Examples. Clin Transl Sci. 2015;8(6):779-786. https://doi.org/10.1111/cts.12326

- Potts S, Shields S, Upshur C. Preparing Future Leaders: An Integrated Quality Improvement Residency Curriculum. Fam Med. 2016;48(6):477-481.

- Hall Barber K, Schultz K, Scott A, Pollock E, Kotecha J, Martin D. Teaching Quality Improvement in Graduate Medical Education: An Experiential and Team-Based Approach to the Acquisition of Quality Improvement Competencies. Acad Med. 2015;90(10):1363-1367. https://doi.org/10.1097/ACM.0000000000000851

- Accreditation Council for Graduate Medical Education. Family Medicine. Family Medicine Milestones. https://www.acgme.org/Specialties/Milestones/pfcatid/8/Family%20Medicine. Published October 2015. Accessed March 15, 2019.

- Institute for Healthcare Improvement. Science of Improvement: Testing Changes. http://www.ihi.org:80/resources/Pages/HowtoImprove/ScienceofImprovementTestingChanges.aspx. Accessed March 12, 2019.

- Leis JA, Shojania KG. A primer on PDSA: executing plan-do-study-act cycles in practice, not just in name. BMJ Qual Saf. 2017;26(7):572-577. https://doi.org/10.1136/bmjqs-2016-006245

- Accreditation Council for Graduate Medical Education. Common Program Requirements. https://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements. Accessed March 12, 2019.

There are no comments for this article.