Direct observation is a critical part of assessing learners’ achievement of the Accreditation Council for Graduate Medical Education (ACGME) Milestones and subcompetencies.1,2 It is well established that direct observations can provide timely, specific, and actionable feedback to allow residents to continue to develop.3,4 Despite this, there remains a paucity of direct observations to inform resident evaluation.5 Resident peer observations may have the ability to fill this gap, and align with the ACGME program requirement to use multisource evaluations (ie, from faculty, peers, staff, and patients). Research demonstrates residents are willing providers of peer feedback6-10 and that peer feedback is a feasible and reliable way to evaluate residents.11-13 The purpose of this paper is to explore the content of residents’ peer observations as they relate to ACGME Milestone subcompetencies.

BRIEF REPORTS

Content Analysis of Family Medicine Resident Peer Observations

Cristen Page, MD, MPH | Alfred Reid, MA | Mallory McClester Brown, MD | Hannah M. Baker, MPH | Catherine Coe, MD | Linda Myerholtz, PhD

Fam Med. 2020;52(1):43-47.

DOI: 10.22454/FamMed.2020.855292

Background and Objectives: Direct observation is a critical part of assessing learners’ achievement of the Accreditation Council for Graduate Medical Education (ACGME) Milestones and subcompetencies. Little research exists identifying the content of peer feedback among residents; this study explored the content of residents’ peer assessments as they relate to ACGME Milestone subcompetencies in a family medicine residency program.

Methods: Using content from a mobile app-based observation tool (M3App), we examined resident peer observations recorded between June 2014 and November 2017, tabulating frequency of observation for each ACGME subcompetency and calculating the proportion of observations categorized under each subcompetency, as well as for each postgraduate year (PGY) class. We also coded each observation on three separate dimensions: “positive,” “constructive,” and “actionable.” We used the χ2 test for independence, and estimated odds ratios and 95% confidence intervals for two-by-two comparisons to compare numbers of observations within each category.

Results: Our data include 886 peer observations made by 54 individual residents. The most frequently observed competencies were in patient care, communication, and professionalism (56%, 47%, and 38% of observations, respectively). Practice-based learning and improvement was observed least frequently (16% of observations). On average, 97.25% of the observations were positive, 85% were actionable, and 6% were constructive.

Conclusions: When asked to review their peers, residents provide comments that are primarily positive and actionable. In addition, residents tend to provide more feedback on certain subcompetencies compared to others, suggesting that programs may rely on peer feedback for specific subcompetencies. Peers can provide perspective on the behaviors and skills of fellow residents.

Setting and Data Collection

The University of North Carolina (UNC) at Chapel Hill’s Family Medicine Residency Program is an academic program with 11 residents per class and two fourth-year chief residents. The residency program uses the M3App, that allows faculty and residents to enter into their phone, laptop, or computer a narrative description of an observation and assign it one or more subcompetencies; results are distributed to residents, advisors, and the program’s Clincal Competency Committee (CCC). M3App was originally developed for family medicine Milestones but is now available to all specialties for a fee, which helps to cover nonprofit costs. Additional details of the M3App’s use and function are described elsewhere.14,15

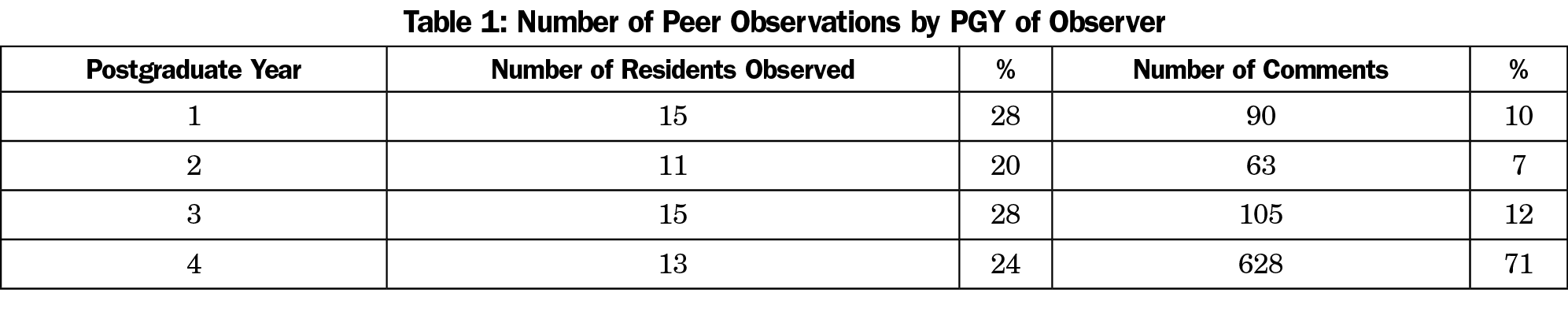

To examine the content of peer observations entered into the M3App, we tabulated resident peer observations recorded between the implementation of the M3App in June 2014 and November 2017 by postgraduate year (PGY) of the resident observed (learner), PGY of the observing resident (observer), and ACGME subcompetency. Fourth-year chief residents and other fellows (all denoted PGY4) provided observations but were not observed in the M3App system.

The UNC Chapel Hill Institutional Review Board determined this study to be exempt (IRB #17-3108).

Analysis

We tabulated the frequency of observations for each of the ACGME subcompetencies and calculated the proportion of observations categorized under each subcompetency. Additionally, we tabulated the proportion of observations made by members of each PGY class and about members of each PGY class.

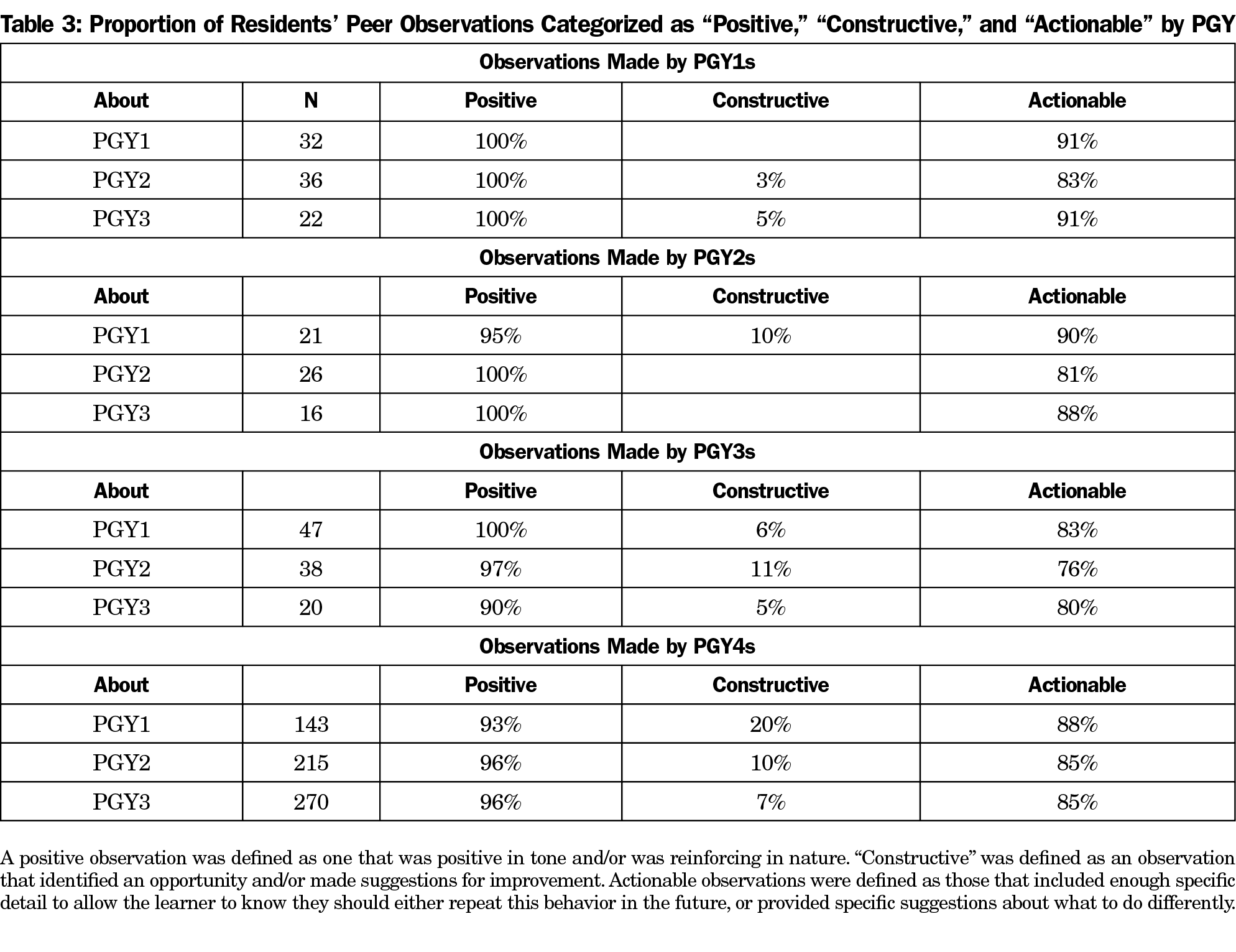

Using a deductive content analysis approach, the research team coded each observation on three separate dimensions: “positive,” “constructive,” and “actionable.” These dimensions are based on common perspectives regarding categorizing feedback, and definitions are found in Table 3. Following best practices of coding qualitative data,16 three researchers coded separate sections of the observations, with a fourth researcher then coding a subset of all to assess intercoder reliability. Through group discussion the team achieved consensus regarding conflicting codes and refined code definitions; observations were then recoded using the refined definitions. We compared numbers of observations within each category across all postgraduate years, using the χ2 test for independence and estimated odds ratios and 95% confidence intervals for two-by-two comparisons.

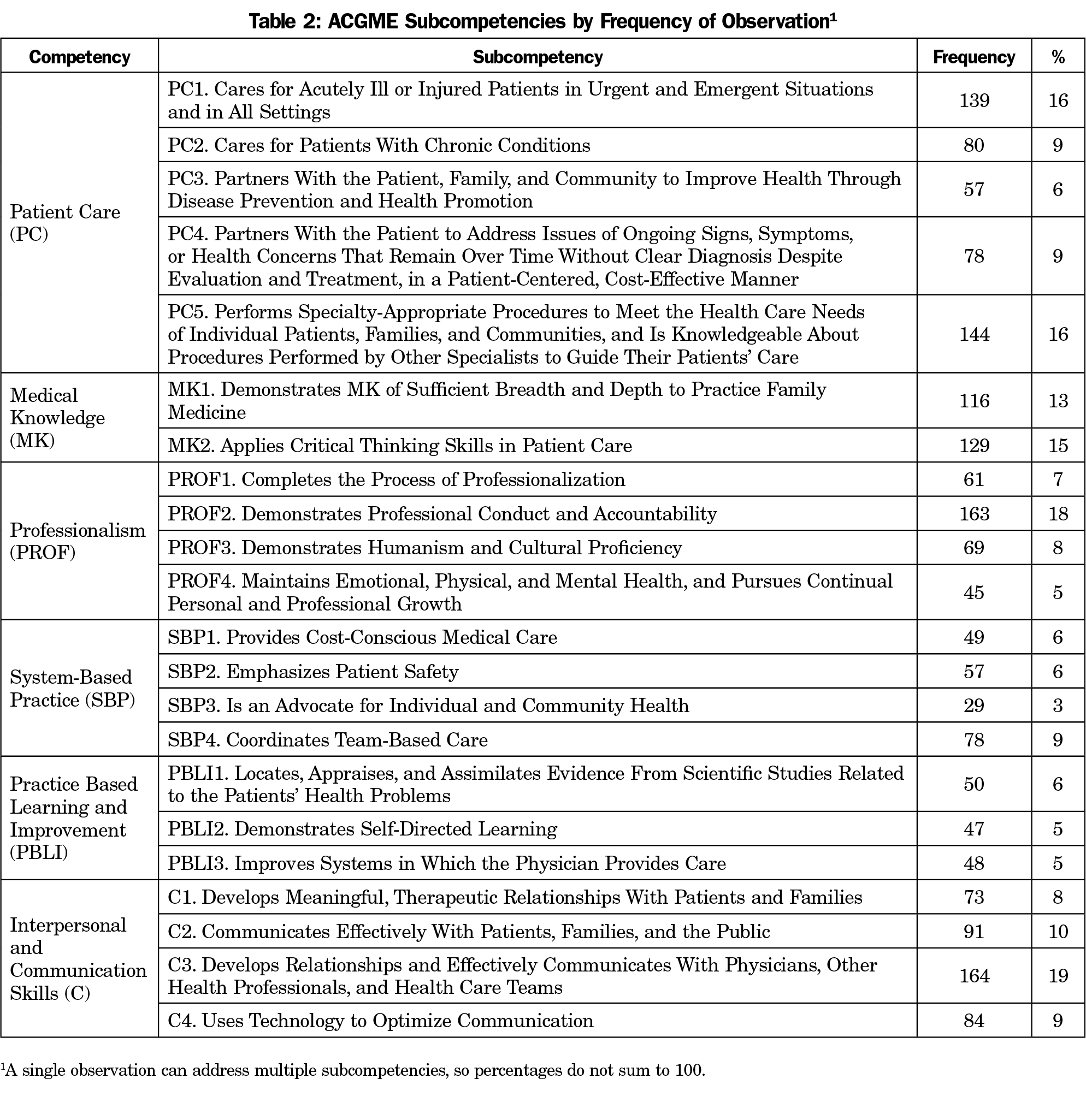

Our data include 886 peer observations made by 54 residents (Table 1) during inpatient and outpatient clinical and academic encounters. The most frequently observed competencies were in patient care (56%), communication (47%), and professionalism (38%), followed by medical knowledge (28%) and systems-based practice (24%). Practice-based learning and improvement (16%) was observed least frequently. Table 2 shows the subcompetencies by frequency of observation, with Communication 3 (C3, n=164) being the most frequent and System-Based Practice 3 (SBP3, n=29) being the least frequent (see Table 2 for a description of each subcompetency).

Figure 1 shows patterns in subcompetencies observed by residents in learners of each postgraduate year. On average, there were more observations on Patient Care (PC) 1-3 for first-year residents and more observations on average for PC4-PC5 for third-year residents. Notably, each subcompetency accounted for a greater-than-average number of observations of at least one PGY class, though none did so for all three classes. Similar to Figure 1, Figure 2 summarizes the proportion of observations by each PGY class. PGY-4 observers, for example, made more than the average number of observations on nine subcompetencies. The PGY-4 residents were more likely on average to make observations about PC2, PC5, and Professionalism 2. None of the PGY classes made more than the average number of observations of SBP4.

Table 3 shows proportions of observations made by and about each PGY class coded as positive, constructive, and actionable. On average, 97% of the observations were positive, 85% were actionable, and 6% were constructive. There were no statistically significant differences in the numbers of observations in any category between PGY1s, PGY2s, and PGY3s. Comparing PGY1-3 observations with those made by PGY4s, we found PGY4s equally likely to make constructive observations (OR 1.1–95% CI 0.75, 1.69), one-third as likely to make positive observations (OR 0.34–95% CI 0.10, 0.90), and more than twice as likely to make actionable observations (OR 2.49–95% CI 1.35, 4.87).

These results indicate that when asked to review their peers, residents provide comments that are primarily positive, which is consistent with published literature6,7 and has been shown to encourage and reinforce positive behaviors.17 They also show that comments provided on peer behavior are largely actionable, which is consistent with best practices for feedback provision.18,19 Furthermore, constructive observations were often imbedded in positive comments; many constructive observations indicated that the feedback was being documented formally in writing following in-person review, reflecting best practices for feedback provision.1 Residents tend to provide more feedback on certain subcompetencies compared to others; this may provide evidence that programs should rely on peers to provide feedback on certain subcompetencies but not on others.8,10 While we did not poll residents on why some subcompetencies are more commonly commented on than others, we hypothesize that these are simpler to understand and perhaps more commonly observed by residents.

Direct observation during residency training allows evaluators to more accurately assess a resident’s progression through the program and ACGME Milestones. Though previously driven by faculty feedback, several studies have identified that peer review is an important part of a residency program’s evaluation system. Peers are able to provide additional contextual information for CCCs regarding resident performance, and residents are able to observe behaviors and actions that faculty members do not, providing unique perspectives into resident performance.8,20,21 However, it is important to consider the implications of requiring peer feedback and the impact that may have on the important relationship dynamics among residents.6,7,22

Our results have limitations. The data are from a single family medicine residency program, limiting generalizability to other programs or specialties. The majority of the observations (71%) were also recorded by PGY-4 residents. This may also impact generalizability given that many residency programs do not have PGY-4 residents. Arguably, PGY-4 residents are not true peers, considering their leadership responsibilities. However, PGY-4 residents have unique relationships and observation opportunities with respect to other PGY levels. Additionally, even if many programs do not have PGY-4 residents, chief residents with additional responsibilities are a common feature of many residencies. Thus, the expectation of increased feedback from residents in leadership roles may be generalizable across other programs. In addition, we analyzed the data without regard to the accuracy of the subcompetency assignment. We did not solicit feedback on why residents choose specific subcompetencies or how comfortable or uncomfortable they felt about giving constructive feedback.

Despite these limitations, the results provide evidence for the content of resident peer feedback. Peers can provide perspective on the behavior and skills of fellow residents. Additional research should investigate the impact of peer feedback on behavior change.

Acknowledgments

Competing interests: Cristen Page, a coinvestigator on this study, serves as chief executive officer of Mission3, the educational nonprofit organization that has licensed the M3App tool from UNC. The data from this study were acquired from the M3App. If the technology or approach is successful in the future, Dr Page and UNC Chapel Hill may receive financial benefits.

References

- Kogan JR, Hatala R, Hauer KE, Holmboe E. Guidelines: the do’s, don’ts and don’t knows of direct observation of clinical skills in medical education. Perspect Med Educ. 2017;6(5):286-305. https://doi.org/10.1007/s40037-017-0376-7

- Accreditation Council for Graduate Medical Education. Milestones. https://www.acgme.org/What-We-Do/Accreditation/Milestones/Overview. Published 2018. Accessed July 17, 2019.

- Holmboe ES. Realizing the promise of competency-based medical education. Acad Med. 2015;90(4):411-413. https://doi.org/10.1097/ACM.0000000000000515

- Hamburger EK, Cuzzi S, Coddington DA, et al. Observation of resident clinical skills: outcomes of a program of direct observation in the continuity clinic setting. Acad Pediatr. 2011;11(5):394-402. https://doi.org/10.1016/j.acap.2011.02.008

- Holmboe ES. Faculty and the observation of trainees’ clinical skills: problems and opportunities. Acad Med. 2004;79(1):16-22. https://doi.org/10.1097/00001888-200401000-00006

- Bing-You R, Varaklis K, Hayes V, Trowbridge R, Kemp H, McKelvy D. The feedback tango: an integrative review and analysis of the content of the teacher-learner feedback exchange. Acad Med. 2018;93(4):657-663. https://doi.org/10.1097/ACM.0000000000001927

- Rudy DW, Fejfar MC, Griffith CH III, Wilson JF. Self- and peer assessment in a first-year communication and interviewing course. Eval Health Prof. 2001;24(4):436-445. https://doi.org/10.1177/016327870102400405

- Dupras DM, Edson RS. A survey of resident opinions on peer evaluation in a large internal medicine residency program. J Grad Med Educ. 2011;3(2):138-143. https://doi.org/10.4300/JGME-D-10-00099.1

- Hale AJ, Nall RW, Mukamal KJ, et al. The effects of resident peer- and self-chart review on outpatient laboratory result follow-up. Acad Med. 2016;91(5):717-722. https://doi.org/10.1097/ACM.0000000000000992

- de la Cruz MSD, Kopec MT, Wimsatt LA. Resident perceptions of giving and receiving peer-to-peer feedback. J Grad Med Educ. 2015;7(2):208-213. https://doi.org/10.4300/JGME-D-14-00388.1

- Thomas PA, Gebo KA, Hellmann DB. A pilot study of peer review in residency training. J Gen Intern Med. 1999;14(9):551-554. https://doi.org/10.1046/j.1525-1497.1999.10148.x

- Dine CJ, Wingate N, Rosen IM, et al. Using peers to assess handoffs: a pilot study. J Gen Intern Med. 2013;28(8):1008-1013. https://doi.org/10.1007/s11606-013-2355-y

- Snydman L, Chandler D, Rencic J, Sung YC. Peer observation and feedback of resident teaching. Clin Teach. 2013;10(1):9-14. https://doi.org/10.1111/j.1743-498X.2012.00591.x

- Page CP, Reid A, Coe CL, et al. Learnings from the pilot implementation of mobile medical milestones application. J Grad Med Educ. 2016;8(4):569-575. https://doi.org/10.4300/JGME-D-15-00550.1

- Page C, Reid A, Coe CL, et al. Piloting the mobile medical milestones application (M3App©): a multi-institution evaluation. Fam Med. 2017;49(1):35-41.

- Saldana J. The Coding Manual for Qualitative Researchers. 3rd ed. Thousand Oaks, CA: SAGE Publications Ltd; 2016. https://www.sagepub.com/sites/default/files/upm-binaries/24614_01_Saldana_Ch_01.pdf. Accessed July 16, 2018.

- Voyer S, Cuncic C, Butler DL, MacNeil K, Watling C, Hatala R. Investigating conditions for meaningful feedback in the context of an evidence-based feedback programme. Med Educ. 2016;50(9):943-954. https://doi.org/10.1111/medu.13067

- Ende J. Feedback in clinical medical education. JAMA. 1983;250(6):777-781. https://doi.org/10.1001/jama.1983.03340060055026

- Gigante J, Dell M, Sharkey A. Getting beyond “good job”: how to give effective feedback. Pediatrics. 2011;127(2):205-207. https://doi.org/10.1542/peds.2010-3351

- Helfer RE. Peer evaluation: its potential usefulness in medical education. Br J Med Educ. 1972;6(3):224-231. https://doi.org/10.1111/j.1365-2923.1972.tb01662.x

- Wendling A, Hoekstra L. Interactive peer review: an innovative resident evaluation tool. Fam Med. 2002;34(10):738-743.

- Van Rosendaal GMA, Jennett PA. Comparing peer and faculty evaluations in an internal medicine residency. Acad Med. 1994;69(4):299-303. https://doi.org/10.1097/00001888-199404000-00014

Lead Author

Cristen Page, MD, MPH

Affiliations: Department of Family Medicine, University of North Carolina, Chapel Hill

Co-Authors

Alfred Reid, MA - Department of Family Medicine, University of North Carolina, Chapel Hill

Mallory McClester Brown, MD - Department of Family Medicine, University of North Carolina, Chapel Hill

Hannah M. Baker, MPH - Lineberger Comprehensive Cancer Center, University of North Carolina, Chapel Hill

Catherine Coe, MD - Department of Family Medicine, University of North Carolina, Chapel Hill

Linda Myerholtz, PhD - Department of Family Medicine, University of North Carolina, Chapel Hill

Corresponding Author

Linda Myerholtz, PhD

Correspondence: 590 Manning Drive CB# 7595, Chapel Hill, NC 27599-7595. 919-962-4764. Fax: 919-843-3418.

Email: Linda_Myerholtz@med.unc.edu

Fetching other articles...

Loading the comment form...

Submitting your comment...

There are no comments for this article.