Background and Objectives: Residents as teachers (RAT) and medical students as teachers (MSAT) programs are important for the development of future physicians. In 2010, Northwestern University Feinberg School of Medicine (NUFSM) aligned RAT and MSAT programs, which created experiential learning opportunities in teaching and feedback across the graduate and undergraduate medical education continuum. The purpose of this study was to provide a curricular overview of the aligned program and to evaluate early outcomes through analysis of narrative feedback quality and participant satisfaction.

Methods: Program evaluation occurred through analysis of written feedback quality provided within the aligned program and postparticipation satisfaction surveys. A total of 445 resident feedback narratives were collected from 2013 to 2016. We developed a quality coding scheme using an operational definition of feedback. After independent coding of feedback quality, an expert panel established coding consensus. We evaluated program satisfaction and perceived importance through posttraining surveys in residents and fourth-year medical students (M4s).

Results: Seventy-nine residents participated in the aligned program and provided high-quality feedback with a relative quality rating of 2.71 (scale 0-3). Consistently high-quality written feedback was provided over the duration of the program and regardless of years of resident participation. Posttraining surveys demonstrated high levels of satisfaction and perceived importance of the program to both residents and M4s.

Conclusions: The aligned RAT and MSAT program across the medical education continuum provided experiential learning opportunities for future physician educators with evidence of high-quality written feedback to learners and program satisfaction.

Teaching continues to be recognized by the Accreditation Council for Graduate Medical Education (ACGME) and Liaison Committee on Medical Education (LCME) as an essential skill for future physicians.1-3 Residents as teachers (RAT) and medical students as teachers (MSAT) programs are attracting more attention in graduate and undergraduate medical education as an approach to improving teaching skills,4,5 with RAT programs established at 55% of ACGME-accredited residencies across multiple specialties.6

As separate entities, both RAT and MSAT programs have been described and evaluated in the literature.1,7,8 MSAT programs have demonstrated positive outcomes in learner assessment, knowledge attainment, and development of future physician educators.4,9 RAT programs have also demonstrated benefits to both the resident teacher and student learner in attitude, knowledge, communication, and professional development.10-16 For the purpose of this paper, we will more generally define both RAT and MSAT programs as teacher-training programs. Despite the well-documented benefits, there are difficulties implementing such teacher-training programs given faculty and resident time constraints.4,17 Furthermore, there is significant curricular variability across institutions in graduate and undergraduate medical education teacher-training programs.7,8 Linking teacher-training programs across graduate medical education (GME) and undergraduate medical education (UME) promotes alignment of medical education resources and curricula. This linkage is strongly supported by statements from the ACGME Sponsoring Institution 2025, whose future vision for residency programs included increased alignment of UME/GME educational methods.18

In 2010, Northwestern University Feinberg School of Medicine (NUFSM), in collaboration with McGaw Medical Center of Northwestern University (McGaw), aligned teacher-training programs to generate a program across the GME and UME continuum. The goal of the aligned program was for resident and fourth-year medical student (M4) trainees to demonstrate effective techniques for teaching in small groups and to give effective feedback as a clinical teacher. The alignment of teaching programs satisfied a resident desire to teach, decreased faculty time constraints, created consistency in training across the continuum, and provided experiential learning in teaching and feedback to residents and medical students.

We hypothesize that the aligned teacher-training program across the medical education continuum provided educational benefits for both residents and medical students through high-quality feedback and satisfying experiential learning opportunities. Given the potential benefits of the aligned teacher-training program across the medical education continuum, the purpose of this report was to provide a curricular overview and evaluate the early outcomes of the program through analysis of narrative feedback quality and participant satisfaction.

Program Description

Since 2005, all M4s at NUSFM have been required to teach M1/M2s in a course called the Teaching Selective. GME-UME program alignment occurred in 2010, with creation of an elective resident teaching program that invited residents to provide feedback to M4s on their teaching skills. Both programs had the same objectives, including giving effective feedback as a clinical teacher; and assessing and improving personal performance through the creation of an individual improvement plan. We chose these two objectives since the relevant accrediting bodies for each group (LCME and ACGME) both have standards in these domains. In designing the RAT program, aligning resources and teaching the same standards for both groups was the key to achieving high-quality feedback across the continuum. Both residents and M4s receive separate, yet the identical training (in-person and video modules) in providing effective feedback and teaching, followed by experiential learning opportunities to develop skills through practice and reflection.

Both residents and M4s participate in multiple experiential learning opportunities for teaching and feedback. In the Teaching Selective, M4s teach and provide feedback on history, communication, and physical exam skills to M1/M2 small groups. Throughout the year, M4s teach an average of 12 hours. The M4s are videotaped while teaching, and all M4s review their teaching videos for self-assessment. Each M4 teaching video is also reviewed by a resident who provides in-person verbal and written feedback to the M4. Residents provide feedback to four to five M4s throughout the academic year. Both residents and M4s complete posttraining satisfaction surveys.

Medical student participation in the aligned program is mandatory and resident participation is voluntary. Residents receive a letter of completion signed by the course director, designated institutional official, and the senior associate dean for medical education to include in their record, but receive no additional incentives for their participation.

Program Evaluation

Evaluation of the aligned teacher-training program occurred through analysis of written feedback quality and program satisfaction, providing an evaluation of the program goal to give feedback as a clinical teacher. Sources of data for the program evaluation included written feedback from residents to M4s on their teaching skills, and posttraining satisfaction survey data from residents and M4s. Two raters analyzed written feedback provided during the teaching program using preestablished codes (described below) to assess the quality of feedback. Evaluation of participant reaction to the program occurred through analysis of resident and M4 satisfaction survey data.

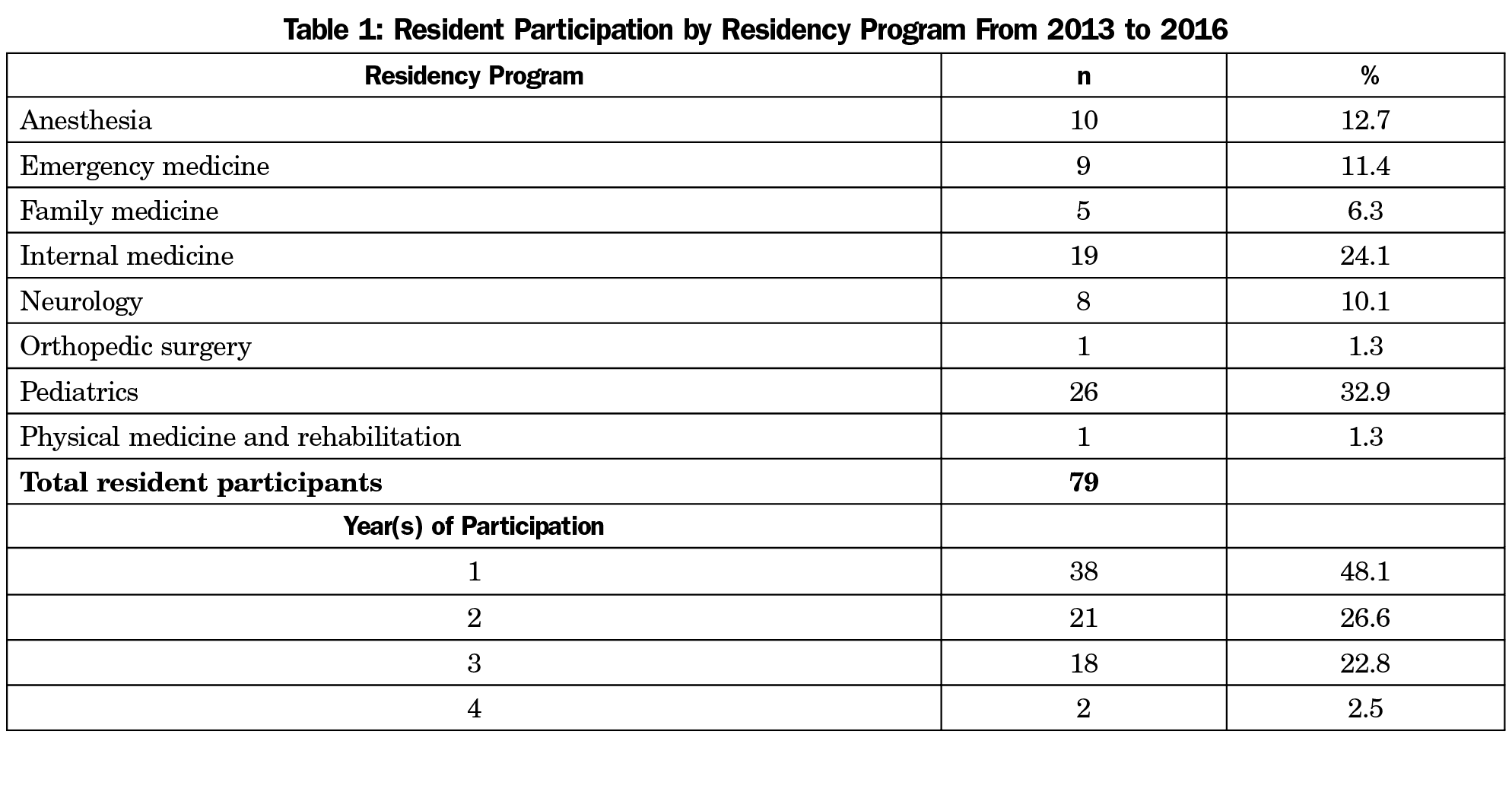

Residents provided in-person and written feedback to M4s on their teaching skills over 3 academic years from 2013 to 2016. Only written feedback was captured and analyzed for the purpose of this initial program evaluation. In this time period, a total of 79 residents and 445 M4s participated in the program, including residents from multiple residency programs with varying years of participation (Table 1). Prior to analysis in 2017, written feedback was deidentified, and codes were utilized to distinguish academic year, residency program, and number of years participating in the teacher-training program.

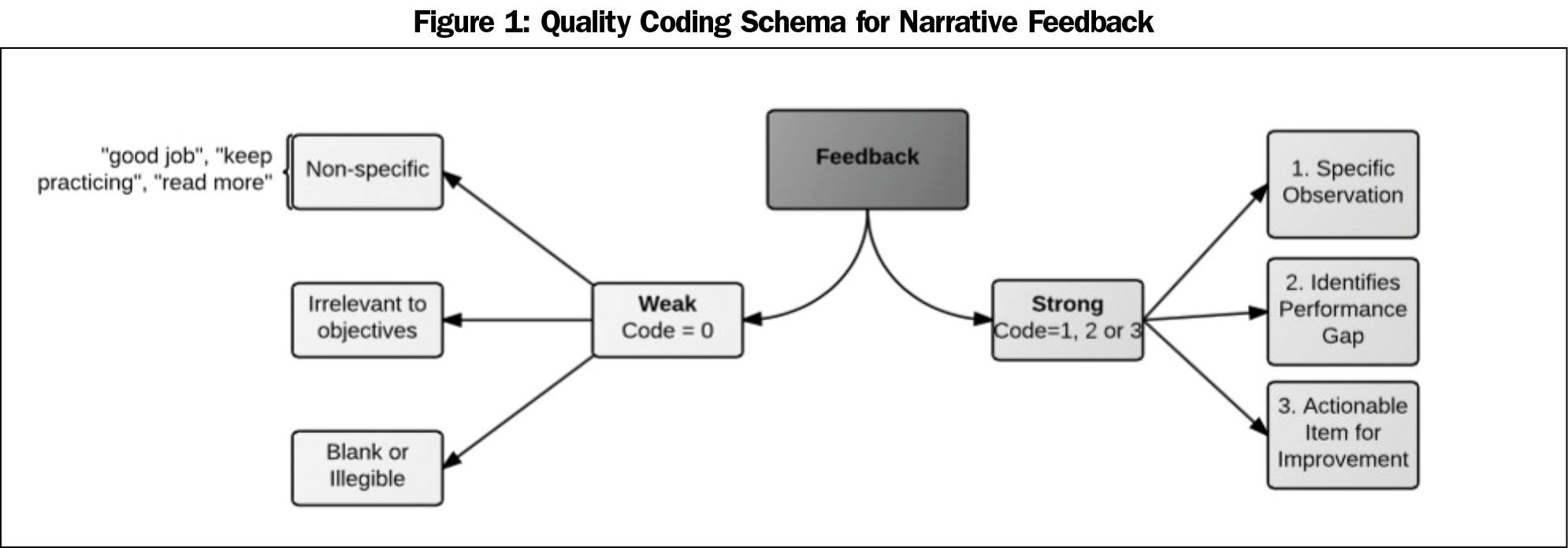

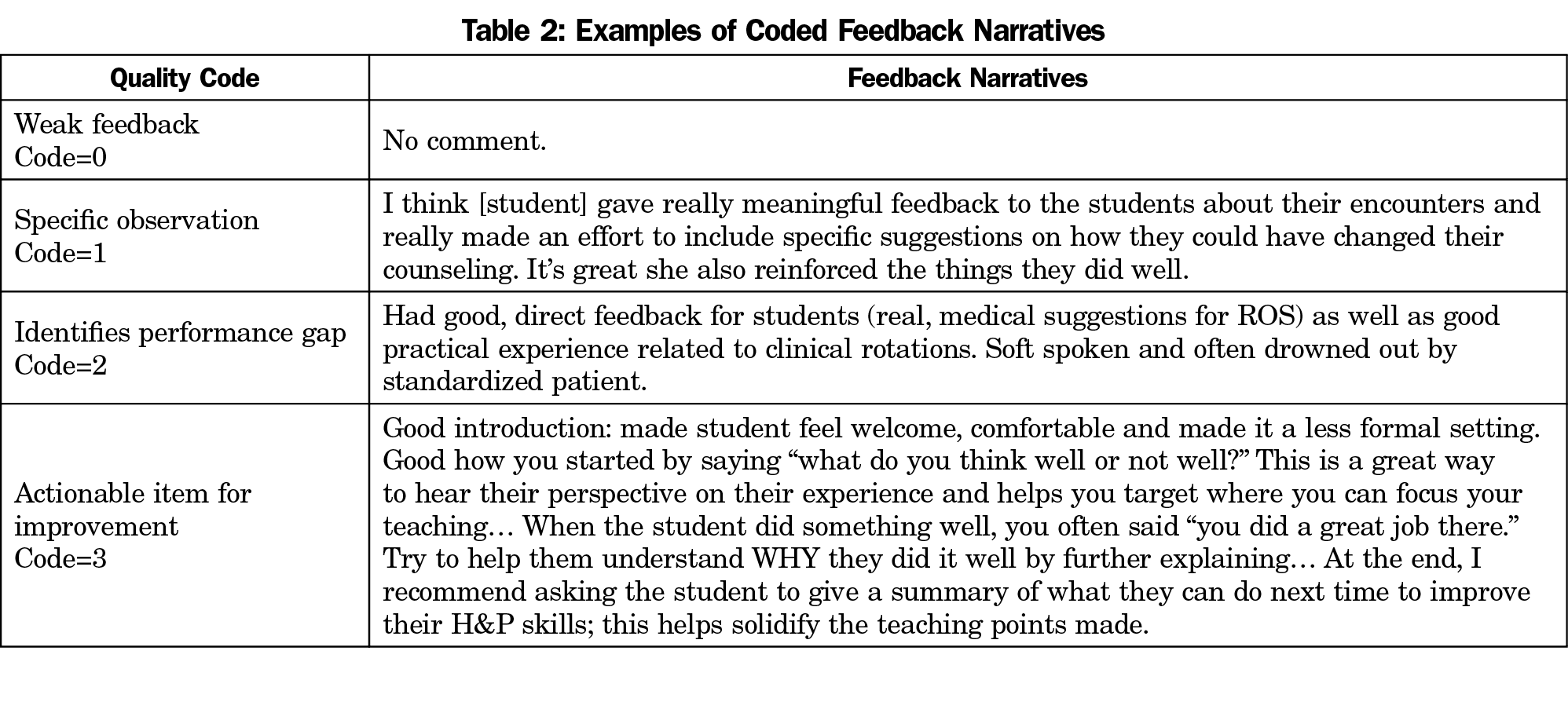

Quality ratings of written feedback were devised from a previously established operational definition of feedback in clinical education developed by van de Ridder et al, that describes quality feedback as “specific information about the comparison between a trainee’s observed performance and a standard, given with the intent to improve.”19 Using the three components of quality feedback defined by van de Ridder, a single numeric quality coding scheme was developed by the authors (Figure 1). Those three components of quality feedback include: specific information (ie, specific observation), comparison between observed performance and a standard (ie, performance gap) and given with an intent to improve (ie, actionable item for improvement). This is in alignment with family medicine program director and resident identification of quality feedback as containing actionable, specific information.20

Feedback containing any of the qualities defined by van de Ridder was deemed as strong with further coding quantification depending on the content. Specifically, strong feedback was coded on a scale from 1 to 3, with 3 being the highest quality rating. With the goal in feedback to ultimately improve or modify the performance of the learner, we gave the highest quality code (code=3) to feedback stating an actionable item for improvement. Identifying a performance gap (code=2) captured modifying feedback without a specific item for improvement. Finally, making a specific observation (code=1) captured reinforcing feedback and specific performance traits without identifying a performance gap or an area for improvement. Feedback containing none of these qualities was deemed weak in the quality coding scheme. Specifically, weak feedback (code=0) included nonspecific, blank, or irrelevant feedback. Specific examples of each type of feedback and the accompanying codes are provided in Table 2.

Two independent coders (R.B. and T.U.) pilot coded eighteen feedback narratives to discover discrepancies among coding definitions and establish concordance between the two independent coders. Coders and an expert panel (R.B., T.U., K.W., C.P., E.R.) discussed ratings to reach a consensus on code definitions before completing full data analysis. After independent qualitative coding of all 445 resident narratives, discrepancies between the two independent coders (R.B. and T.U.) were discussed with a panel of experts (R.B., T.U., C.P., E.R.) to reach consensus on the final code.

Quantitative analysis of participant reaction to the program was based on resident and M4 posttraining satisfaction survey data from 2011 to 2016. Upon completion of the teacher-training program, both groups were emailed a link to a final course satisfaction survey. The survey asked residents and M4s to rank on a scale of 1 to 6 (1=extremely dissatisfied, 6=extremely satisfied) their “overall satisfaction participating in the Teaching Selective program,” and “perceived importance of this program to your experience/education as a learner.”

We analyzed data using SPSS version 24 for Windows. All tests used an α level of 0.05. We calculated descriptive statistics including means and standard deviations, and we calculated grand means by averaging overall quality ratings across residents in each residency program. To determine whether or not quality ratings varied by residency program, we used a mixed-model analysis to account for repeated observations by the same resident. Northwestern University Feinberg School of Medicine Institutional Review Board determined this program evaluation to be exempt from further review (ID STU00203143).

Resident to M4 Narrative Feedback

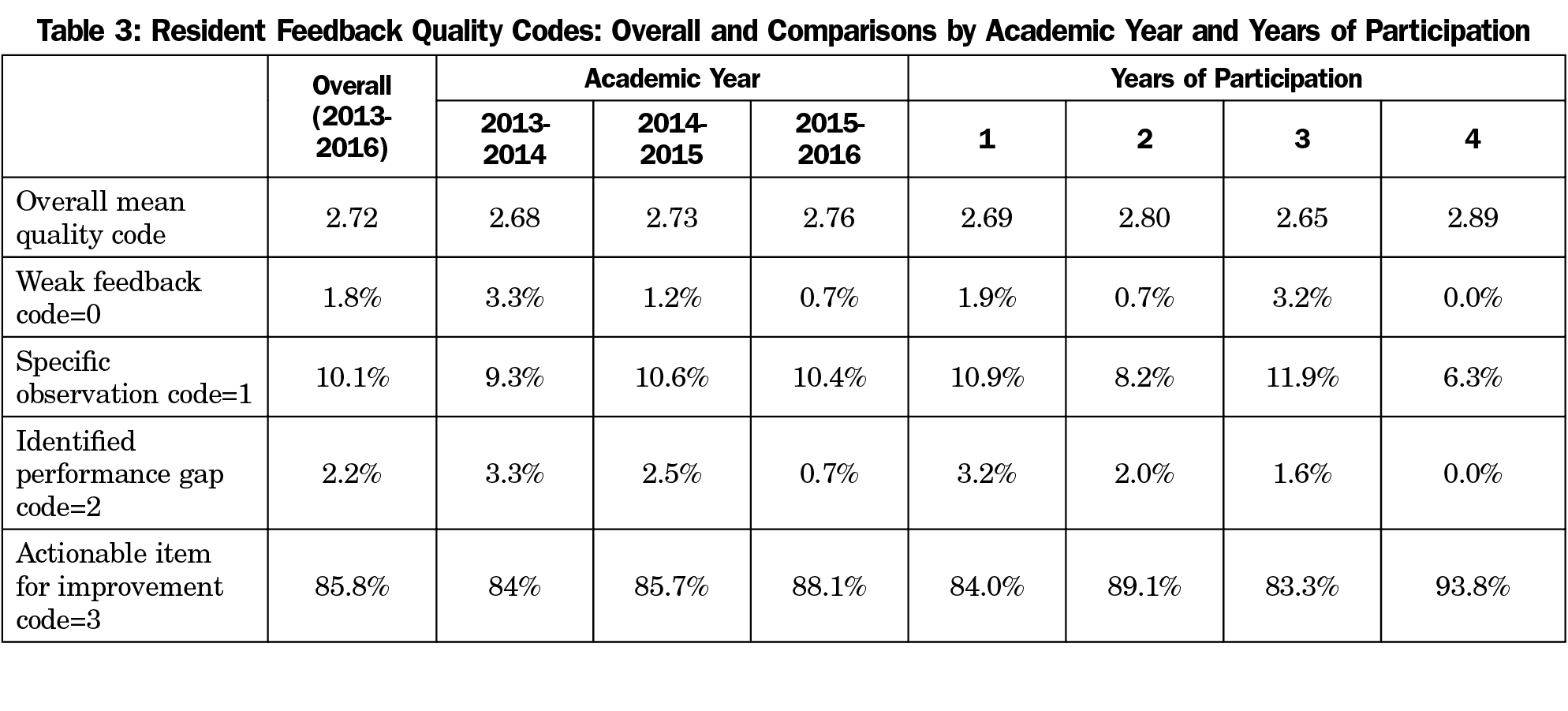

Seventy-nine total residents participated in the aligned program from 2013 to 2016, developing 445 feedback narratives, of which all were included for a 100% response rate. The overall mean quality rating of resident feedback was 2.72 with a standard deviation of 0.71 (Table 3). Among the narratives 1.8% (8 of 445 total) were coded as weak feedback, 10.1% (45/445) were coded as providing a specific observation, and 2.2% (10/445) were coded as identifying a performance gap. The remaining 85.8% (382/445) received the highest quality code of providing an actionable item for improvement.

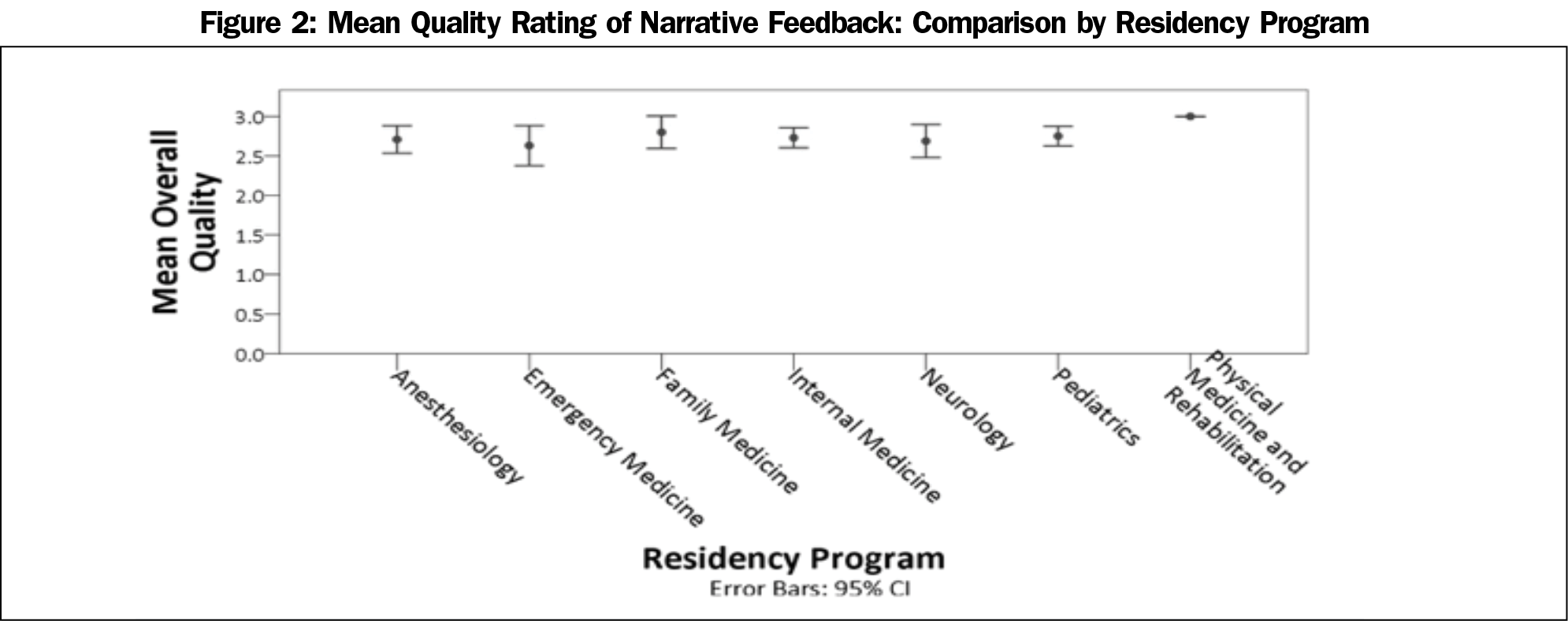

We analyzed quality ratings of written feedback by academic year, years of resident participation in the aligned program, and residency program (Table 3 and Figure 2). The quality of feedback provided by residents to M4s remained consistently high over time. The highest quality code of actionable item for improvement (code 3) was assigned to 84% (126/150) of narratives in 2013-14, 85.7% (138/161) in 2014-15, and 88.1% (118/134) in 2015-16. The frequency of weak feedback narratives also remained consistently low over the years, ranging from 3.3% (5/150) in 2013-14, to 1.2% (2/161) in 2014-15, and to 0.7% (1/118) in 2015-16. Differences by academic year were not statistically significant (P=.87). When analyzed by years of participation, feedback with an actionable item for improvement occurred in 84% of narratives (131/ 156) with 1 year of participation, 89% (131/147) with 2 years of participation, 83.3% (105/126) with 3 years of participation, and 93.8% (15/16) with 4 years of participation. Differences by years of participation were not statistically significant (P=.74). When divided by subspecialty, the mean quality coding for each residency program was greater than 2.5 (Figure 2).

Program Satisfaction

Postparticipation resident and medical student satisfaction surveys evaluated the program as a whole. Resident mean satisfaction with participation in the aligned teacher-training program ranged from 4.7 to 5.1 on a Likert scale of 1 to 6 (1=extremely dissatisfied, 6=extremely satisfied) from 2011 to 2016. Medical student mean satisfaction with participation in the program ranged from 4.7 to 5.2 on the same scale. Resident perceived importance of the aligned program to their experience or education as a learner ranged from a mean of 4.1 to 5.6 on a scale of 1 to 6 (1=extremely unimportant, 6=extremely important) from 2011 to 2016. Medical student mean perceived importance of the program ranged from 4.4 to 4.7.

Evaluation of the aligned teacher-training program found evidence of high-quality written feedback from resident trainees in all programs and satisfaction by all participants, while providing experiential learning opportunities in teacher-training across the UME-GME continuum. High-quality written feedback was provided across all of the residency specialties. M4 trainees received high-quality feedback and all trainees received experiential learning through recurrent practice in teaching and feedback. Our study demonstrated the aligned RAT and MSAT program achieved high-quality written feedback, which is one component of effective teaching. However, future research is needed to evaluate the magnitude of effect of high-quality feedback on learners. The curriculum also fostered new near-peer opportunities to socialize with residents and medical students outside of their own training programs through meetings established within the aligned program, although this was not directly studied in this initial program evaluation.

Feedback is essential in clinical learning and has been shown to change a physician’s clinical performance.21 For that reason, it is vital that our trainees receive high-quality feedback in the aligned teacher-training program as it can impact their performance as a learner and future clinician. The quality of resident to M4 feedback remained consistently high through each academic year, years of participation, and residency program, thus ensuring M4s received this essential feedback regardless of the resident with whom they were paired. For the resident participants, this continued to satisfy a desire to teach while reinforcing the practice of providing high-quality feedback without the need for significant faculty intervention. Lastly, as an organization, the creation of the program aligned UME and GME educational resources while fulfilling shared ACGME and LCME standards. This high-quality feedback was likely multifactorial and cannot be fully credited to the aligned program. Outside influences included an institutional focus on giving feedback and teaching opportunities outside of the aligned program.

Kirkpatrick’s model of program evaluation encourages evaluation beyond learner satisfaction.22 This program evaluation incorporated multiple levels of Kirkpatrick’s model, including reaction via satisfaction surveys and behavior via feedback quality analysis. A high level of resident satisfaction was essential to the sustainability of the aligned teacher-training program given that resident participation was optional. This sustainability was evident as a secondary finding in the multiple years of participation and wide range of resident participants from various residency programs (Table 1).

We utilized several strategies to increase validity and reliability in this program evaluation. We addressed the validity of feedback quality by utilizing an operational definition of feedback from the literature, independent rater coding, and use of an expert panel to establish coding consensus. Although a singular definition of feedback is not universally accepted in the education literature, feedback as defined by van de Ridder et al has been frequently referenced in recent literature.23-26 In analysis of satisfaction surveys and feedback quality, attempts were made to decrease construct underrepresentation by including all resident data from several years. By increasing the sample size, we achieved a more reliable reflection of the population mean quality feedback and satisfaction ratings.

Despite efforts to minimize threats to validity and reliability, a limitation to this study is self-selection bias, as resident participation in the program was optional. Secondly, a ceiling effect limits the ability to distinguish the degree of feedback quality with the majority of learners receiving the highest quality code. Further, we suspect the feedback quality ratings may actually underrepresent the quality of feedback through construct-irrelevant variance, as written feedback did not reflect all narrative (verbal and written) feedback given during the feedback session. For example, in-person verbal feedback may have a higher level of detail and feedback quality than that captured in the coded written feedback as “no comment.”

The aligned teacher-training program demonstrated evidence of success across the educational continuum in providing high-quality written feedback, providing experiential learning opportunities, and contributing to accreditation standards for both learner groups. Medical students received high-quality feedback from residents, and trainees reported satisfaction with the aligned program. Further investigation is needed to demonstrate the impact of high-quality feedback on learners and the long-term impact of the aligned teacher-training program on future faculty development and professional identify formation.

Acknowledgments

The authors thank senior leadership from Northwestern University Feinberg School of Medicine (Marianne Green, MD, Diane B. Wayne, MD, John X Thomas, PhD, Raymond Curry, MD), McGaw Medical Center of Northwestern University Designated Institutional Official Joshua Goldstein, MD, and Program Directors Lousanne Carabini, MD, John Bailitz, MD, Mike Gisondi, MD, Deborah Edberg, MD, Deborah Smith Clements, MD, Aashish Didwania, MD, Danny Bega, MD, Monica Rho, MD, Matthew Beal, MD, John Sullivan, MD, Richard Dsida, MD, Susan Gerber, MD, Magdy Milad, MD, Roneil Malkani, MD, Tanya Simuni, MD and Sharon Unti, MD, for supporting the alignment of the resident and student teaching programs.

Funding/Support: Funding for the Teaching Selective course was internally provided by the Augusta Webster Office of Medical Education at Northwestern University Feinberg School of Medicine. The authors report no external funding for this study.

Previous Presentation: Data in this manuscript was presented by Robyn Bockrath, MD, MEd, at the 2017 AAMC Learn Serve Lead conference in Boston, Massachussets, Educating Physicians and Scientists session titled “Highlights in Medical Education Innovations: Education for Learners.”

References

- Hill AG, Yu TC, Barrow M, Hattie J. A systematic review of resident-as-teacher programmes. Med Educ. 2009;43(12):1129-1140. https://doi.org/10.1111/j.1365-2923.2009.03523.x

- Accreditation Counsel for Graduate Medical Education. ACGME Common Program Requirements. https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/CPRResidency2019.pdf. Accessed March 4, 2020.

- 3. Liaison Committee on Medical Education. Functions and Structure of a Medical School: Standards for Accreditation of Medical Education Programs Leading to the MD Degree. https://med.virginia.edu/ume-curriculum/wp-content/uploads/sites/216/2016/07/2017-18_Functions-and-Structure_2016-03-24.pdf. Published March, 2016. Accessed March 4, 2020.

- Soriano RP, Blatt B, Coplit L, et al. Teaching medical students how to teach: a national survey of students-as-teachers programs in U.S. medical schools. Acad Med. 2010;85(11):1725-1731. https://doi.org/10.1097/ACM.0b013e3181f53273

- Morrison EH, Hafler JP. Yesterday a learner, today a teacher too: residents as teachers in 2000. Pediatrics. 2000;105(1 Pt 3):238-241.

- Morrison EH, Friedland JA, Boker J, Rucker L, Hollingshead J, Murata P. Residents-as-teachers training in U.S. residency programs and offices of graduate medical education. Acad Med. 2001;76(10)(suppl):S1-S4. https://doi.org/10.1097/00001888-200110001-00002

- Pasquinelli LM, Greenberg LW. A review of medical school programs that train medical students as teachers (MED-SATS). Teach Learn Med. 2008;20(1):73-81. https://doi.org/10.1080/10401330701798337

- Wamsley MA, Julian KA, Wipf JE. A literature review of “resident-as-teacher” curricula: do teaching courses make a difference? J Gen Intern Med. 2004;19(5 Pt 2):574-581. https://doi.org/10.1111/j.1525-1497.2004.30116.x

- Naeger DM, Conrad M, Nguyen J, Kohi MP, Webb EM. Students teaching students: evaluation of a “near-peer” teaching experience. Acad Radiol. 2013;20(9):1177-1182. https://doi.org/10.1016/j.acra.2013.04.004

- Chokshi BD, Schumacher HK, Reese K, et al. A “Resident-as-Teacher” Curriculum Using a Flipped Classroom Approach: Can a Model Designed for Efficiency Also Be Effective? Acad Med. 2017;92(4):511-514. https://doi.org/10.1097/ACM.0000000000001534

- Muzyk AJ, White CD, Kinghorn WA, Thrall GC. A psychopharmacology course for psychiatry residents utilizing active-learning and residents-as-teachers to develop life-long learning skills. Acad Psychiatry. 2013;37(5):332-335. https://doi.org/10.1176/appi.ap.12060124

- Gandy RE, Richards WO, Rodning CB. Action of student-resident interaction during a surgical clerkship. J Surg Educ. 2010;67(5):275-277. https://doi.org/10.1016/j.jsurg.2010.07.003

- Luciano GL, Carter BL, Garb JL, Rothberg MB. Residents-as-teachers: implementing a toolkit in morning report to redefine resident roles. Teach Learn Med. 2011;23(4):316-323. https://doi.org/10.1080/10401334.2011.611762

- Post RE, Quattlebaum RG, Benich JJ III. Residents-as-teachers curricula: a critical review. Acad Med. 2009;84(3):374-380. https://doi.org/10.1097/ACM.0b013e3181971ffe

- Morrison EH, Shapiro JF, Harthill M. Resident doctors’ understanding of their roles as clinical teachers. Med Educ. 2005;39(2):137-144. https://doi.org/10.1111/j.1365-2929.2004.02063.x

- Zabar S, Hanley K, Stevens DL, et al. Measuring the competence of residents as teachers. J Gen Intern Med. 2004;19(5 Pt 2):530-533. https://doi.org/10.1111/j.1525-1497.2004.30219.x

- Rotenberg BW, Woodhouse RA, Gilbart M, Hutchison CR. A needs assessment of surgical residents as teachers. Can J Surg. 2000;43(4):295-300.

- Duval JF, Opas LM, Nasca TJ, Johnson PF, Weiss KB. Report of the SI2025 Task Force. J Grad Med Educ. 2017;9(6)(suppl):11-57. https://doi.org/10.4300/1949-8349.9.6s.11

- van de Ridder JM, Stokking KM, McGaghie WC, ten Cate OT. What is feedback in clinical education? Med Educ. 2008;42(2):189-197. https://doi.org/10.1111/j.1365-2923.2007.02973.x

- Myerholtz L, Reid A, Baker HM, Rollins L, Page CP. Residency Faculty Teaching Evaluation: What Do Faculty, Residents, and Program Directors Want? Fam Med. 2019;51(6):509-515. https://doi.org/10.22454/FamMed.2019.168353

- Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systematic review of the literature on assessment, feedback and physicians’ clinical performance: BEME Guide No. 7. Med Teach. 2006;28(2):117-128. https://doi.org/10.1080/01421590600622665

- Kirkpatrick DL. Evaluating Training Programs: The Four Levels, 2nd ed. San Francisco, CA: Berret-Koehler Publishers; 1998.

- Ajjawi R, Regehr G. When I say … feedback. Med Educ. 2019;53(7):652-654. https://doi.org/10.1111/medu.13746

- Armson H, Lockyer JM, Zetkulic M, Könings KD, Sargeant J. Identifying coaching skills to improve feedback use in postgraduate medical education. Med Educ. 2019;53(5):477-493. https://doi.org/10.1111/medu.13818

- van der Leeuw RM, Teunissen PW, van der Vleuten CPM. Broadening the scope of feedback to promote its relevance to workplace learning. Acad Med. 2018;93(4):556-559. https://doi.org/10.1097/ACM.0000000000001962

- Ramani S, Könings KD, Ginsburg S, van der Vleuten CP. Feedback Redefined: principles and Practice. J Gen Intern Med. 2019;34(5):744-749. https://doi.org/10.1007/s11606-019-04874-2

There are no comments for this article.