Introduction: Pelvic examination training and competency-based assessment are expensive and time consuming. Our goal was to use the results of skill evaluation early in residency to identify residents who required training.

Methods: Incoming residents performed pelvic examinations with gynecological teaching assistants. Faculty observed residents performing examinations with clinic patients to assess for competency. Written assessment of residents by teaching assistants and faculty were completed. A regression-based software tool was used to determine items in early resident performance to best predict subsequent competency.

Results: Sixty-eight residents were evaluated. Thirty-eight (56%) residents were not able to demonstrate competency in three clinical exams and therefore received more observation. One third of evaluations were completed by faculty performing ≤1 evaluation per year. Two items were found most likely to identify a resident who required further training (“identifies cervix” and “properly assembles equipment”). The model based on these items had a sensitivity of 100% (95% confidence interval [CI]=86-100%) and specificity of 36.8% (95% CI=22-54%).

Conclusions: A model using early skills assessments was not sufficiently specific to identify residents who needed more training. Next steps include limiting the number of faculty assessors, faculty development to improve discriminatory capacity, and creating separate processes for (1) providing feedback and identifying learning needs in new residents, and (2) documenting competency in performance of pelvic exams.

Accreditation Council for Graduate Medical Education (ACGME) Milestones Project requirements include competency-based evaluation of resident physicians in many areas, including pelvic examination.1 Although the US Preventative Services Task Force found insufficient evidence to assess the balance of risks and harms of screening pelvic examinations in asymptomatic, nonpregnant women, pelvic examination is essential for evaluation of abdominal, urologic, and pelvic symptoms.2

Training and assessment is expensive and time consuming. Residents matriculate with varied levels of prior experience. Prior to initiating formal pelvic examination training, our residency received reports from staff, residents, and faculty that some residents were not comfortable performing pelvic examinations. In response, we created a training program for residents to improve their skills using gynecology teaching assistants (GTAs). Briefly, a GTA is a trained layperson who allows a learner to examine her in order to provide feedback regarding the learner’s bimanual, speculum, and communication skills. Although programs using GTAs to teach pelvic examination skills to medical students have demonstrated benefit, no published studies have evaluated the use of GTAs with resident physicians.3-7 Our goal was to accurately predict residents who required additional pelvic examination training based on results of early skill evaluation and/or demographic characteristics of residents (such as self-reported exam experience or gender, since anecdotally we found female residents had more pelvic exam experience in medical school). Our hypothesis was that the quality of early performance of one or more microskills would predict subsequent performance and allow residency faculty to better allocate training resources.

Incoming residents self-identified learning needs related to pelvic examinations. Residents performed two pelvic examinations with GTAs who were aware of their learning needs. During intern year, the residents were subsequently observed by residency faculty while performing pelvic examinations for at least three continuity clinic patients. Faculty and GTAs evaluated residents using a checklist of microskills that included domains of communication, planning, and dexterity.8 Additionally, faculty provided a global assessment of the residents’ competency in performing each pelvic examination. Each resident required three satisfactory evaluations by faculty before they were considered by the residency program as competent in performing pelvic exams without supervision. Serial faculty evaluations were halted when a resident’s overall performance during three exams was rated as competent. Exploratory linear regression analysis of resident demographics and assessment results was performed using an Excel-based software tool (described in Braun and Oswald in 2011, and available by direct download from this author at http://dl.dropbox.com/u/2480715/ERA.xlsm?dl=1).9 This model performs ordinary least squares (OLS) regression on the (2P-1) possible combination of predictors and produces a number of indicators of predictor importance.

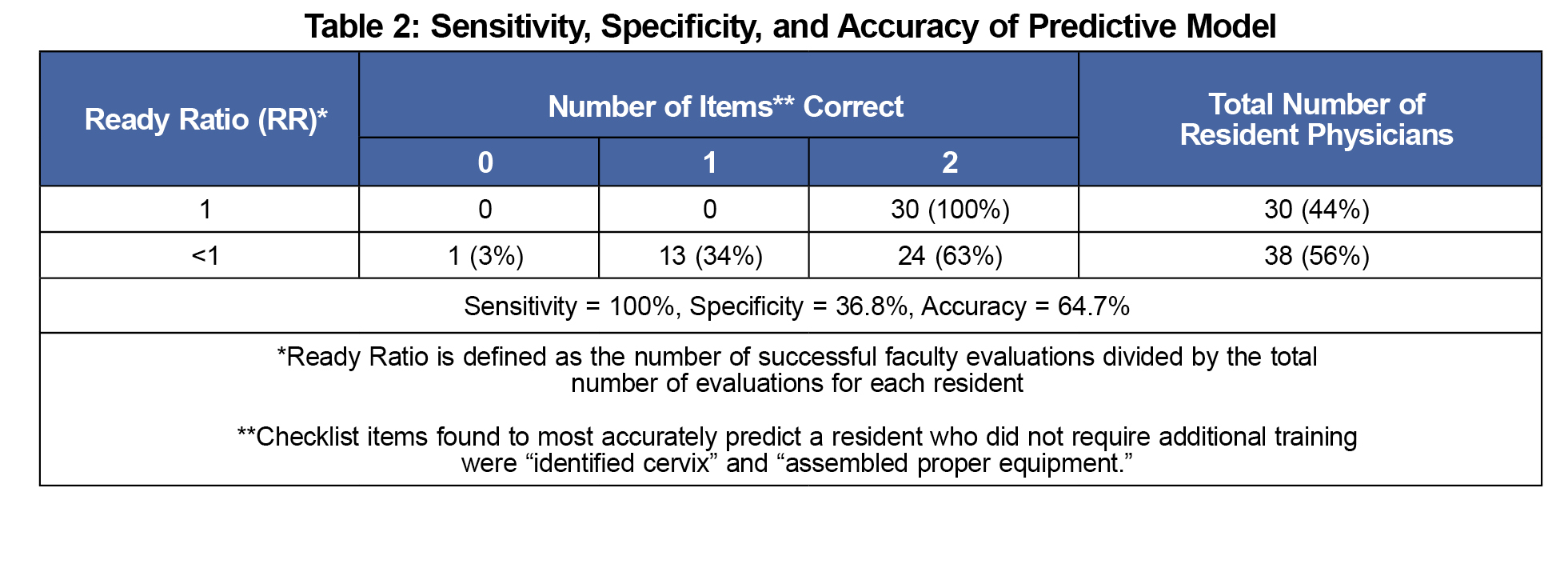

Results of this analysis were used to create a model in which early resident performance predicted subsequent competency. To quantify the success of each resident in performing the pelvic exam in clinic, a Ready Ratio (RR) was calculated. The Ready Ratio is defined as the number of successful faculty evaluations divided by the total number of evaluations for each resident. The potential values for RR are limited to combinations of the number of successful evaluations divided by the total number of evaluations.

This research was granted an exemption from formal review by the University of Wisconsin School of Medicine and Public Health institutional review board.

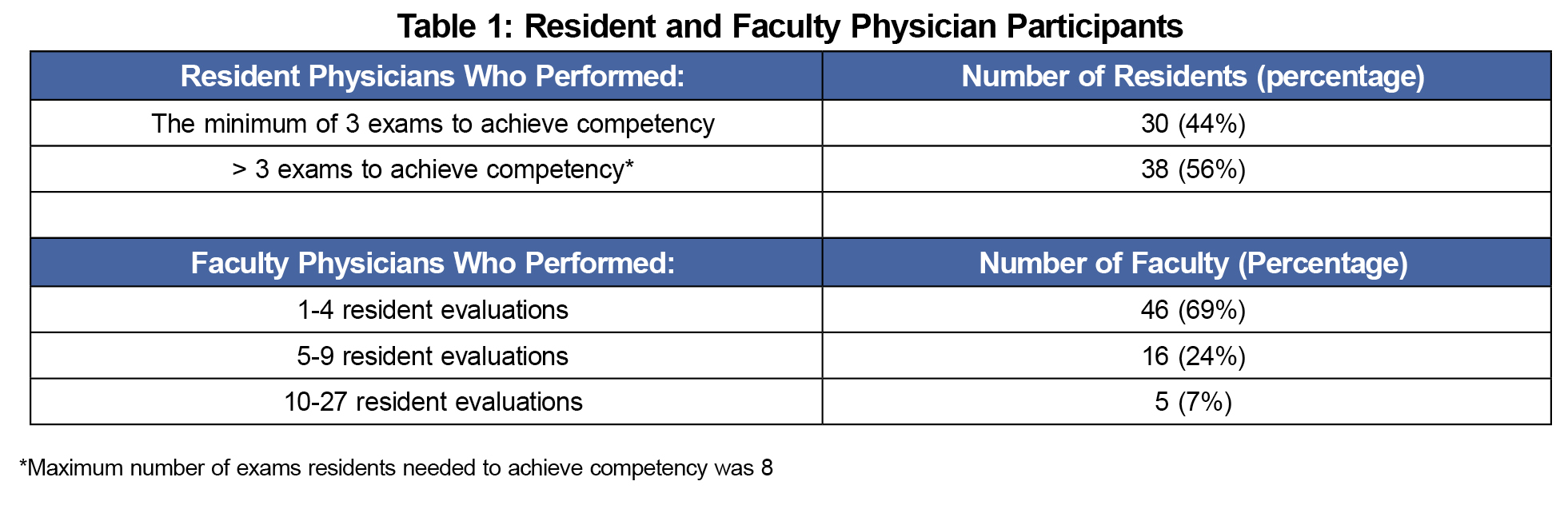

Sixty eight residents were evaluated by 67 faculty members from 2009 to 2014 in a US family medicine residency program with five continuity clinic sites in urban, suburban, and rural locations. The number of evaluations needed to qualify as competent ranged from three to eight. More than half of the residents (56%) required more than the minimum of three observed pelvic exams (Table 1). The amount of time between the residents’ GTA examinations and the completion of their minimum of three observed pelvic exams with clinic patients ranged from 1 to 12 months. Most of the faculty who performed evaluations (69%) performed between one and four evaluations over a 5-year period (Table 1).

Ready Ratios ranged from 0.43-1.0. These values were in a negatively skewed distribution; commonly used transformations (eg, square root) could not remedy this.

The Braun and Oswald software tool9 generated 511 possible regression analysis models involving nine (limit of the tool) microskill predictors with the highest regression weights and indices of model adequacy (incremental R2 [change in coefficient of determination], general dominance weights, and relative importance weights).

We included demographic information such as gender and clinic site in possible models because (1) anecdotally we found incoming female residents completed more pelvic exams during medical school, and (2) our residency clinic sites were varied in location and patient population. We included the date that the first pelvic examination was evaluated in clinic because we postulated that an early exam would suggest interest in this skill and/or opportunity to apply what was learned with the GTAs. However, neither the items above nor the residents’ average score given by the GTAs accurately predicted RR.

The checklist items most likely to be missed (and therefore the items that most accurately predicted the RR) were “identifies the cervix” (unstandardized coefficient=0.730 [P<.001, 95% confidence interval {CI}=0.351 to 1.110]), and “properly assembles equipment” (unstandardized coefficient=0.618 [P=.004, 95% CI = 0.210 to 1.026]). The model with these two checklist items had the best indices of adequacy as defined by the software tool. This model was 100% sensitive (95% CI=0.859-1.000) but only 36.8% specific (95% CI= 0.223-0.540) in predicting a high RR. In addition, these two items explained only eight percent of the variance in RR. See Table 2 for calculation of sensitivity, specificity, and accuracy of our model.

Our model based on early skills assessments was not sufficiently specific to identify residents who would need more extensive training to achieve competency in performing pelvic examination.

The results show that 44% of residents completed all supervised examinations successfully. This observed high pass rate does not coincide with anecdotal stories of former residents struggling with the pelvic exam. There are several possible explanations for this discrepancy. The current residents could be highly skilled, or we may have underestimated the abilities of former residents. Our tool did not assess residents’ self-confidence in performing this exam. More likely, however, the current faculty or the checklist method of evaluation were not sufficiently discriminating. Since many faculty evaluate only a few examinations per year, they may require more training to be able to distinguish competent residents from those who require more training. Additionally, even for residents who were not judged to be competent on any one evaluation, most individual microskill checklist items were noted to be completed successfully. This suggests evaluators were using criteria other than the microskills (and not captured in the current checklist) to determine competency.

Our study was limited by the lack of a control group (ie residents who did not receive training by GTAs). The specificity of our model would likely be improved by (1) standardizing faculty evaluations through faculty development, (2) qualitative interviews of faculty performing many evaluations to determine criteria not currently on the checklist that they use to determine competency, and/or (3) using a smaller number of faculty to perform the assessments. Additionally, assessment of an intern’s learning needs versus assessment of competency (later in residency) may require different processes or tools.

A predictive model based on early skills assessments by gynecological teaching assistants and family medicine faculty was not sufficiently specific to identify residents who would need more extensive training to achieve competency in performing pelvic examination. Next steps could include limiting the number of faculty assessors, faculty development to improve discriminatory capacity, and/or creating separate processes for (1) providing feedback and identifying learning needs in new residents, and (2) documenting competency in performance of pelvic exams.

Acknowledgments

The authors wish to express thanks for the Summer Student Research and Clinical Assistantship program of the University of Wisconsin School of Medicine and Public Health, Department of Family Medicine which provided LW a stipend for her work on this project.

Presentations: Streamlining Assessment of Resident Physician Competency in Performing the Pelvic Examination. Presented at the Wisconsin Research and Education Network (WREN) Convocation, September 2014, Wisconsin Dells, WI.

Financial support: Dr Weyenberg was provided a stipend of $3,000 through the Summer Student Research and Clinical Assistantship program of the University of Wisconsin School of Medicine and Public Health, Department of Family Medicine.

References

- Accreditation Council for Graduate Medical Education. Family Medicine Overview. http://www.acgme.org/Specialties/Overview/pfcatid/8/Family-Medicine. Accessed September 1, 2017.

- Bibbins-Domingo K, Grossman DC, Curry SJ, et al; US Preventive Services Task Force. Screening for gynecologic conditions with pelvic examination. JAMA. 2017;317(9):947-953.

https://doi.org/10.1001/jama.2017.0807.

- Kleinman DE, Hage ML, Hoole AJ, Kowlowitz V. Pelvic examination instruction and experience: a comparison of laywoman-trained and physician-trained students. Acad Med. 1996;71(11):1239-1243.

https://doi.org/10.1097/00001888-199611000-00021.

- Carr SE, Carmody D. Outcomes of teaching medical students core skills for women’s health: the pelvic examination educational program. Am J Obstet Gynecol. 2004;190(5):1382-1387.

https://doi.org/10.1016/j.ajog.2003.10.697.

- Wånggren K, Fianu Jonassen A, Andersson S, Pettersson G, Gemzell-Danielsson K. Teaching pelvic examination technique using professional patients: a controlled study evaluating students’ skills. Acta Obstet Gynecol Scand. 2010;89(10):1298-1303.

https://doi.org/10.3109/00016349.2010.501855.

- Goldstein CE, Helenius I, Foldes C, McGinn T, Korenstein D. Internists training medical residents in pelvic examination: impact of an educational program. Teach Learn Med. 2005;17(3):274-278.

https://doi.org/10.1207/s15328015tlm1703_13.

- Watkins RS, Moran WP. Competency-based learning: the impact of targeted resident education and feedback on Pap smear adequacy rates. J Gen Intern Med. 2004;19(5 Pt 2):545-548.

https://doi.org/10.1111/j.1525-1497.2004.30150.x.

- Evensen, A. Checklist for pelvic examination competency (Word document). Retrieved from The STFM Resource Library: https://resourcelibrary.stfm.org/viewdocument/checklist-for-pelvic-examination-co?CommunityKey=2751b51d-483f-45e2-81de-4faced0a290a. Accessed June 2, 2017.

- Braun MT, Oswald FL. Exploratory regression analysis: a tool for selecting models and determining predictor importance. Behav Res Methods. 2011;43(2):331-339.

https://doi.org/10.3758/s13428-010-0046-8.

There are no comments for this article.