Introduction: There has been a recent transition from the use of “competencies” to “entrustable professional activities” (EPAs) in medical education assessment paradigms. Although this transition proceeds apace, few studies have examined these concepts in a practical context. Our study sought to examine how distinct the concepts of competencies and EPAs were to front-line clinical educators.

Methods: A 20-item survey tool was developed based on the University of Calgary Department of Family Medicine’s publicly available lists of competencies and EPAs. This tool required participants to identify given items as either a competency or an EPA, after reading a description of each. The tool was administered to a convenience sample of consenting clinical educators at 5 of the 14 training sites at the University of Toronto Department of Family and Community Medicine in 2018. We also collected information on years in practice, hours spent supervising per week, and direct involvement in medical education.

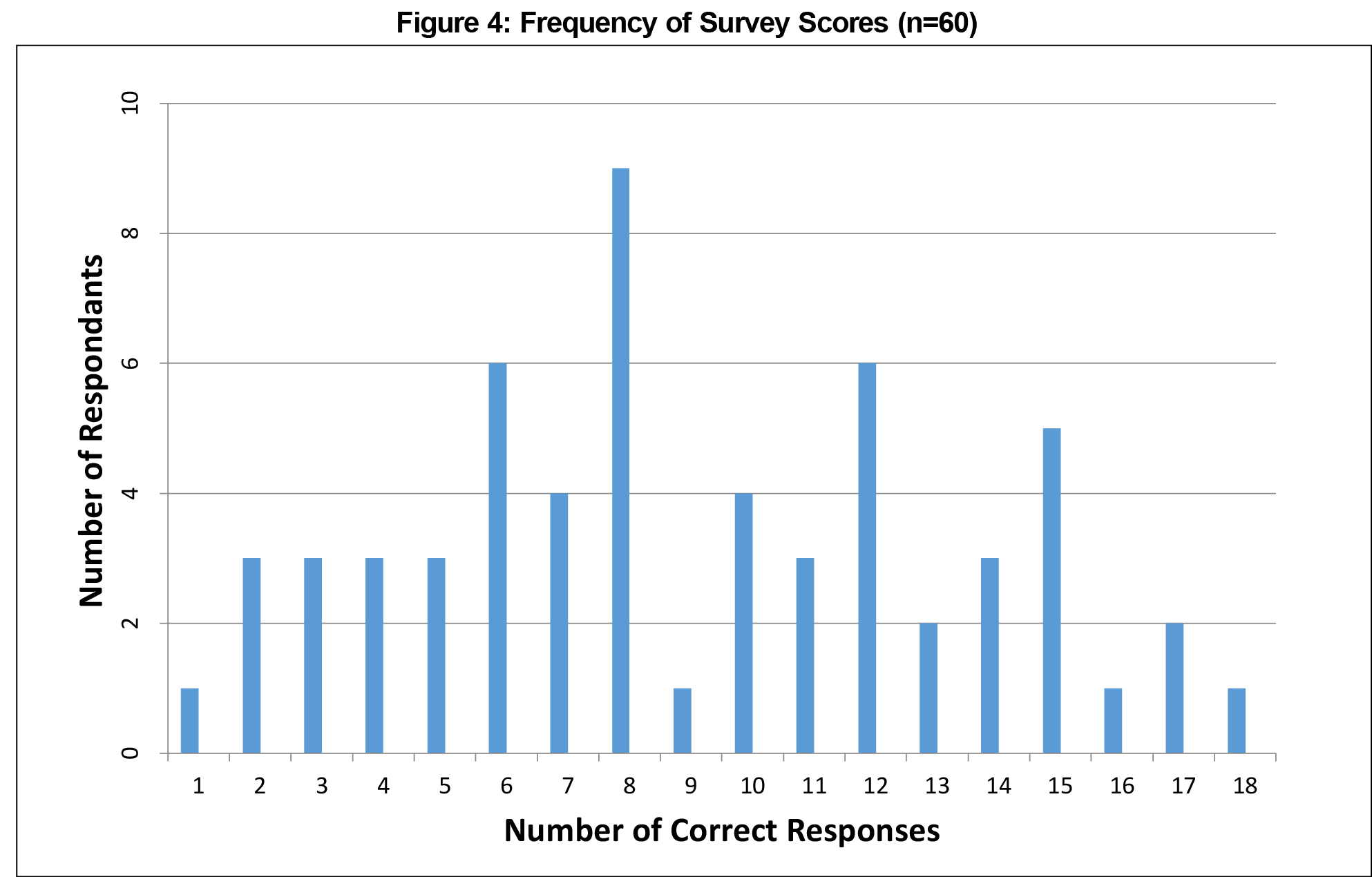

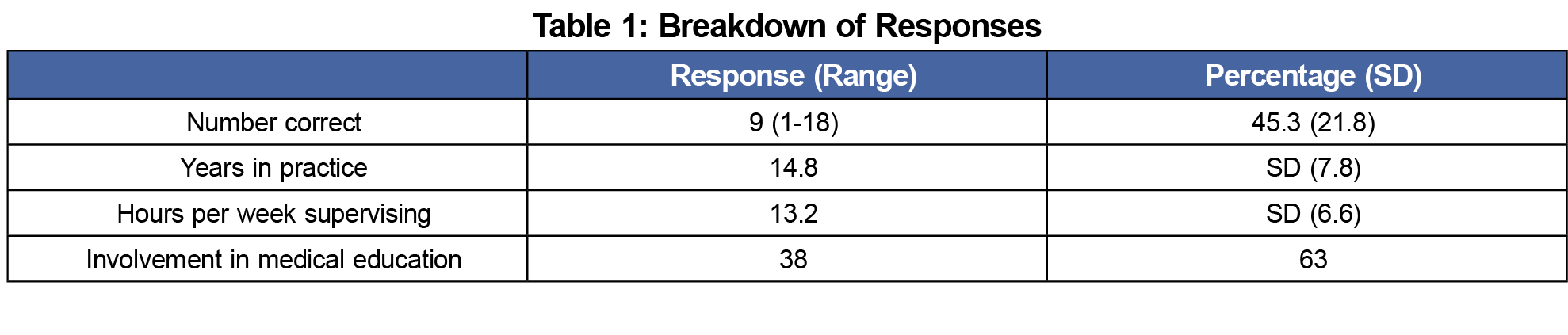

Results: We analyzed a total of 60 surveys. The mean rate of correct responses was 45.3% (+/- 21.8%). Subgroup analysis failed to reveal any correlation between any of the secondary characteristics and correct responses.

Conclusion: Clinical educators in our study were not able to distinguish between competencies and EPAs. Further research is recommended prior to intensive curricular changes.

Competency-based medical education (CBME) emerged out of a need to more rigorously evaluate trainees’ readiness to practice. Prior to the CBME era, completion of a prespecified duration of training typically led to licensure, and trainee competence was not assessed using explicit standards; instead trainees were often assessed via an ambiguous gestalt of “readiness for practice.” There have always been tests as parts of the licensure process (in Canada, jointly administered by the Medical Council of Canada through the Licentiate of the Medical Council of Canada [LMCC] and the Canadian College of Family Physicians [CCFP] examinations), however these assess trainee performance on standardized examinations in simulated environments, rather than real-world practice. The elements of the CCFP and LMCC examination that are not written (observed standardized clinical encounters [OSCEs], simulated office orals [SOOs], etc) represent a simulation of clinical practice. The elaboration of competencies—skills to be mastered in increasingly proficient ways throughout training—represented a significant step forward, allowing both teachers and learners to clearly articulate what was expected at each stage, and therefore to both teach and assess its acquisition more intentionally1-3,7,8,11,12 Moreover, these could be—and almost exclusively are—observed in true clinical settings, rather than test conditions. In that sense, competencies and the forms of assessment that aim to supplement or replace them represent a key parallel to formal licensure examinations. Whereas licensure examinations offer a template for standardization in the simulated setting, competencies provide a similar framework of standardized evaluation in a practice setting.

In recent years there has been increasing focus on a novel assessment approach using entrustable professional activities (EPAs).6 EPAs are defined as “professional activities that together constitute the mass of critical elements that operationally define a profession.” EPAs are not intended to replace competencies, but provide a means to integrate multiple competencies into units of work that are observable, measurable, and independently executed, rendering them ideal for trainee assessment.14 There is also indication that evaluation on the basis of EPAs allows teachers to safely and appropriately decrease direct trainee supervision, a common concern in shorter residency training programs.5

The increasing shift to EPAs has brought controversy. Concerns have been raised regarding the specific content of EPAs, as well as practical implementation processes.9,10 At our specific institution, clinical educators on the front lines seemed to have trouble distinguishing competencies from EPAs. Unfortunately, there has been a paucity of studies examining such real-world issues with the contrasting frameworks, limiting evidence-based decision-making. Due to the effort involved in wholesale curricular change, we therefore sought to inform our transition by formally exploring how practically distinguishable competencies and EPAs are in the minds of clinical teachers.

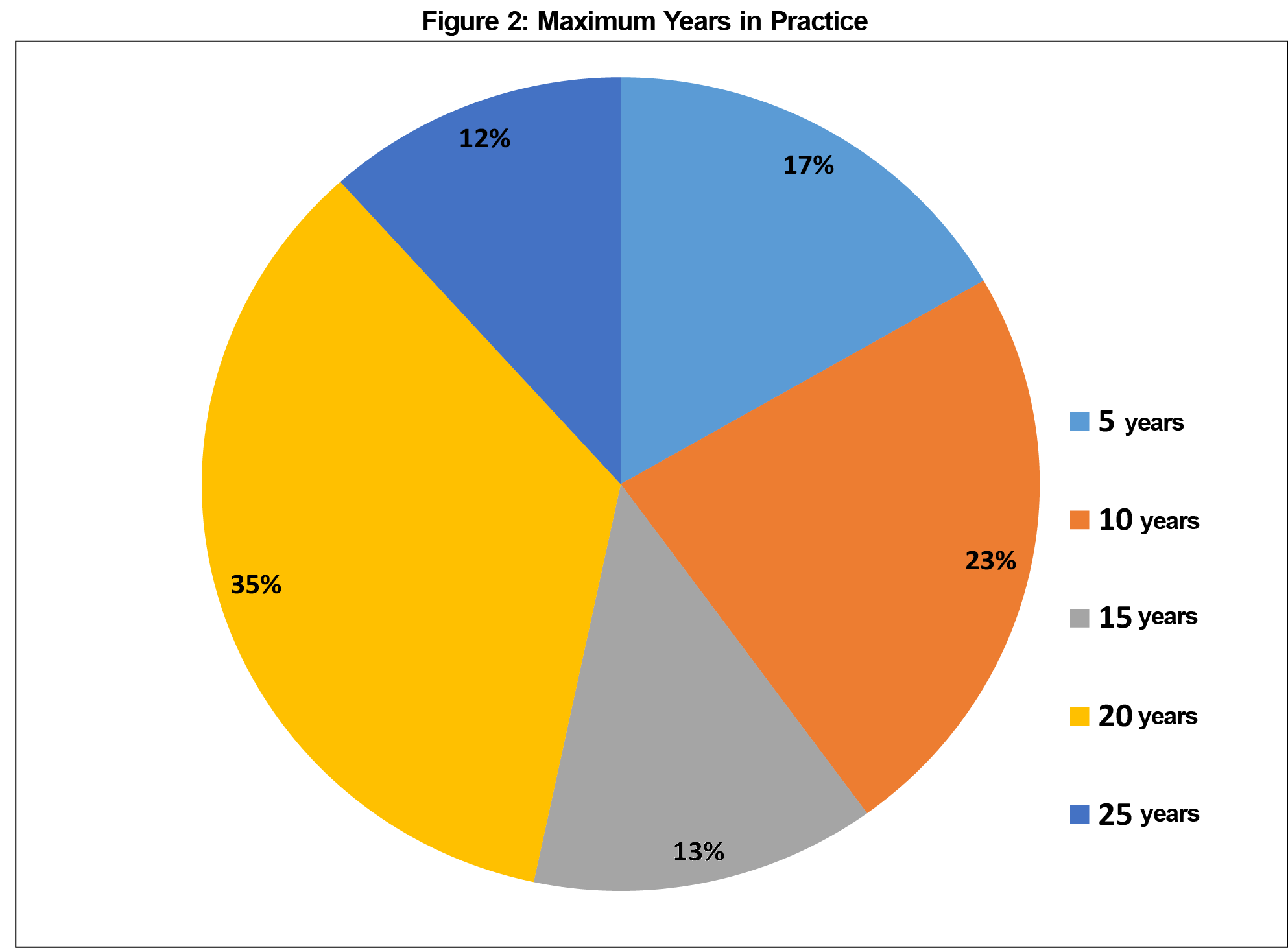

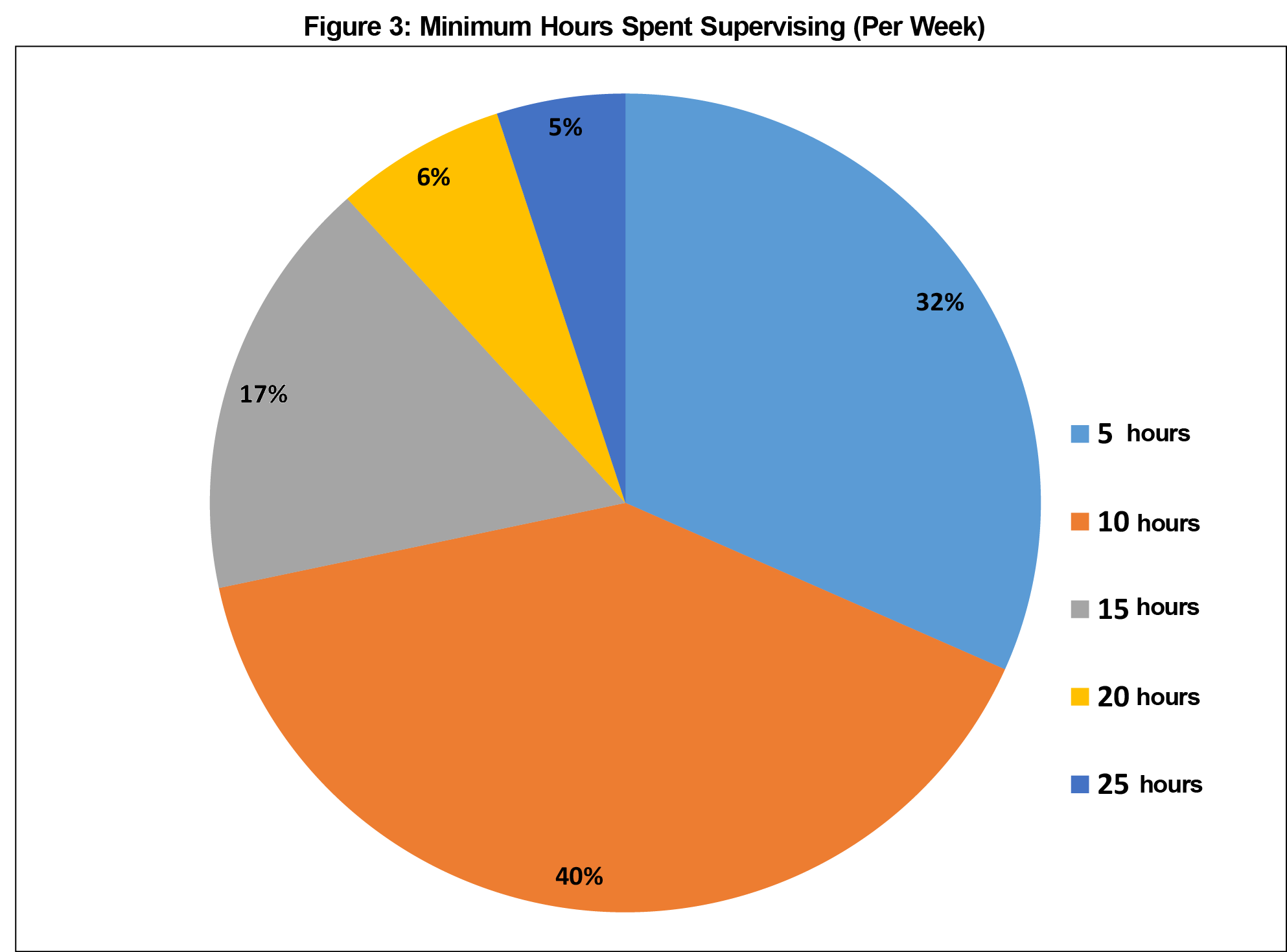

We created a 20-item survey tool (Appendix 1) based on the frameworks developed and shared online by the University of Calgary Department of Family Medicine.4 The tool provided basic definitions drawn from the literature, followed by a list of randomly ordered competencies and EPAs, and then asked participants to identify each item as either a competency or EPA. We consulted several local educational scholars to ensure the tool’s design was adequate. Basic demographic data, including length of time in practice (in 5-year intervals), number of hours spent supervising per week (in 5-hour intervals), and past/present involvement in an educational leadership or scholarship role were collected. Due to concerns regarding the potential identifiability of respondents, data on sex/gender, employment status (full/part time) and ethnicity were not collected. For that reason, length in practice and weekly supervision were collected as a range, rather than a specific number.

We recruited academic family physicians from five of 14 teaching sites at the University of Toronto’s Department of Family and Community Medicine (Toronto Western Hospital, Mount Sinai Hospital, Women’s College Hospital, St Michael’s Hospital and Credit Valley Hospital). This was felt to appropriately sample the variety of learning environments at our institution (eg, downtown vs suburban locales). These sites were chosen primarily on the basis of convenience. Because the survey tool was to be distributed at a staff meeting, practical considerations eliminated sites that either did not have a staff meeting scheduled within the time of the study, or were unwilling to participate. All sites involved in this research train both medical students and family medicine residents of all levels. Faculty at these sites typically have their own practices and are involved in medical education. Although we did not have access to comprehensive demographic information for all 14 training sites, we believe that these sites are fairly representative of the department as a whole, as the level of experience, requirements for teaching/supervision, academic output, etc required for consideration of a faculty position is fairly standard across all University of Toronto training sites. All full/part-time faculty members were eligible for inclusion, although we did not collect data regarding employment status, as we believe that the number of hours spent supervising is a more accurate reflection of involvement in teaching. There were no formal exclusion criteria for participation.

We distributed the survey tool at faculty meetings to consenting educators over approximately 1 month. All responses were provided anonymously, and subsequently scored manually by two independent, blinded assessors. We discarded illegible or incomplete surveys and neither solicited nor considered any narrative comments. Once collated, we analyzed the data set using tests of statistical significance in Microsoft Excel and R software.

The University of Toronto Research Ethics Board approved this study (Protocol #35393).

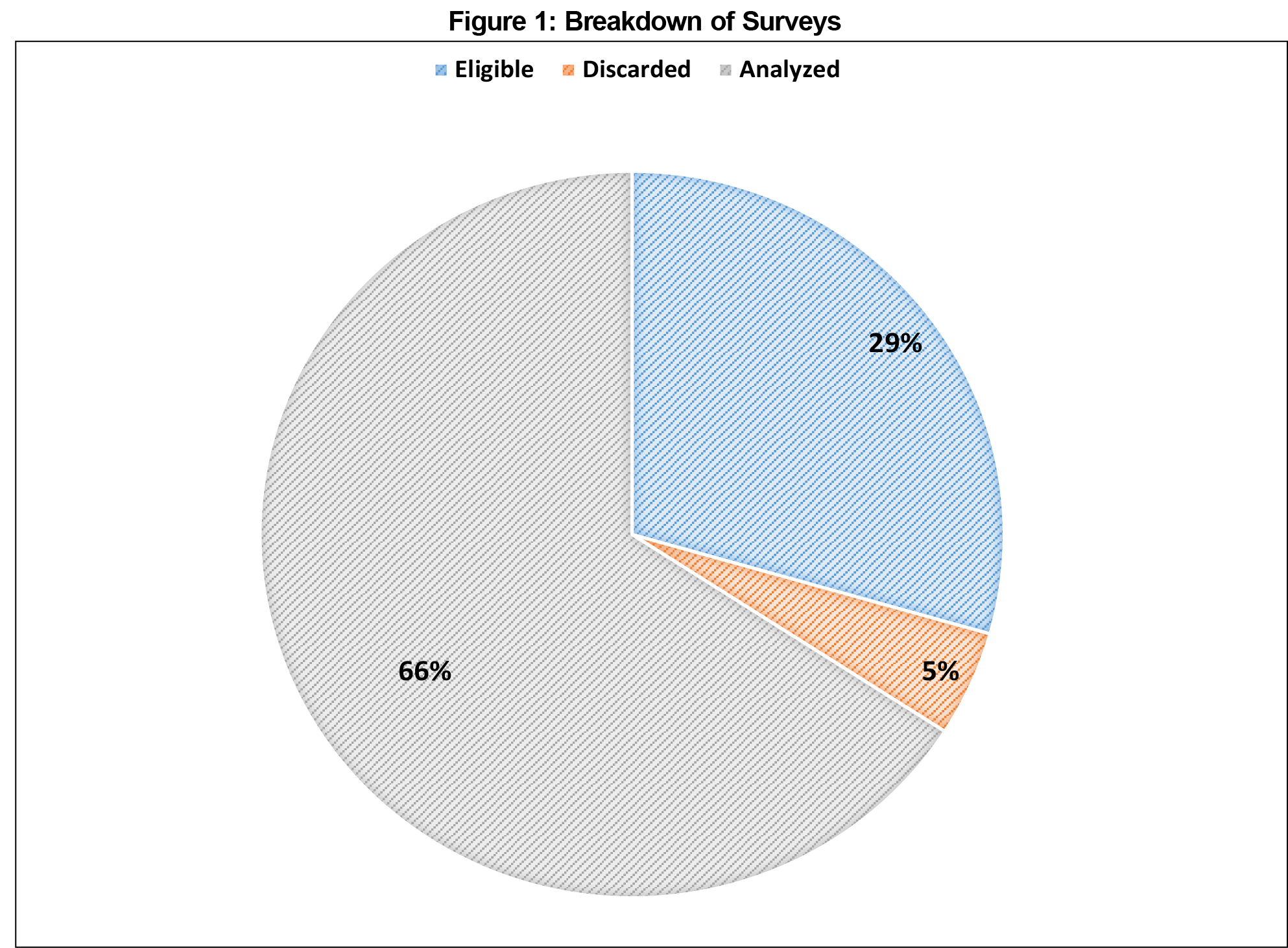

A total of 64 participants returned surveys within the timeframe allocated for collection (Figure 1). A total of 85 physicians were eligible to complete the surveys, representing a response rate of 75%. Four surveys were excluded from analysis due to being incomplete or illegible. There were no other exclusions. The average number of years in practice for participants was 14.8 (SD 7.0, Figure 2), with an average reported 13.2 hours per week spent supervising trainees (SD 6.6, Figure 3). Thirty-eight participants (63%) reported formal involvement in medical education. Averages for values reported as a range were calculated assuming the midpoint of the range for each respondent (ie, a response of 0-5 would count as a 2.5 for the purposes of determining the average for the entire sample).

The mean number of correct responses to survey items was nine (range 1-18), translating to an overall score of 45.3% (21.8%; Figure 4 and Table 1). The median and mode values were both eight. None of these values deviated from results expected by random chance with a frequency of less than 5%.

Using a one-dimensional analysis of variance, none of the other variables examined (years in practice, hours spent supervising each week, or formal educational involvement) were predictive of statistically significant improvements in response accuracy.

This study was adequately powered (calculated using PowerUpR package for R), and power analysis assumed frequentist, rather than Bayesian distributions to demonstrate statistical significance at a 20% difference above the expected result. The expected result was 50% correct, and the study was designed to capture a rate of discrimination of less than 30% or more than 70%. This parameter was not met under any subgroup analysis.

This study suggests that clinical educators are not able to reliably distinguish between competencies and EPAs. Furthermore, duration in practice, time spent directly supervising trainees, and formal involvement in medical education did not make them any more likely to do so.

There are several limitations to this study. As previously observed,13 the ways in which EPAs are specified may fall short of the original defining parameters put forth by ten Cate et al.15 They may fail to outline specific tasks to be entrusted, or list educational objectives rather than entrustable tasks. This would represent an error at the point of elaboration that could be addressed by a more rigorous development process.

Another possible issue is that our educators do not possess a sufficiently sophisticated understanding of competencies and EPAs. We are not convinced that this is a major explanatory factor, in part because teaching experience and involvement in medical education were not predictive of improved discernment. Moreover, if exhaustive professional development (ie, above and beyond a simple definitional explanation) is required for these concepts to be understood by clinical educators, their utility will be compromised in the fast-paced world of front-line clinical training.

As it currently stands, many training programs have undertaken (or will undertake) massive efforts to recast their assessment frameworks to bring them in line with the EPA movement. It behooves us all to consider whether we have sufficient evidence and scholarship on record to undertake such efforts.

Overall, our study demonstrates that clinical educators at the University of Toronto Department of Family and Community Medicine are unable to reliably distinguish between competencies and EPAs, raising concerns about the transition from the former to the latter assessment framework. Further research, particularly involving front-line educators, is needed prior to widespread adoption of EPAs.

Acknowledgments

The authors thank the clinical teachers at the University of Toronto who participated in this study by completing the survey.

References

- Bleker OP, ten Cate J, Holdrinet RS. De algemene competenties van de medisch specialist in de toekomst [General competencies for the medical specialist in the future]. Tijdschr Med Onderwijs. 2004;23(1):4-14. Dutch. https://doi.org/10.1007/BF03056628

- Can MEDS. CanMEDS 2000: Extract from the CanMEDS 2000 Project Societal Needs Working Group Report. Med Teach. 2000;22(6):549-554. https://doi.org/10.1080/01421590050175505

- Carraccio C, Wolfsthal SD, Englander R, Ferentz K, Martin C. Shifting paradigms: from Flexner to competencies. Acad Med. 2002;77(5):361-367. https://doi.org/10.1097/00001888-200205000-00003

- University of Calgary Cumming School of Medicine. Department of Family Medicine residency program entrustable professional activities (“EPA’s"). 2017. https://calgaryfamilymedicine.ca/residency/dox/container/Assessment-FM-EPA-List&Guidance-17-10-24.pdf. Accessed February 12, 2018.

- Drolet BC, Brower JP, Miller BM. Trainee involvement in patient care: A necessity and reality in teaching hospitals. J Grad Med Educ. 2017;9(2):159-161. https://doi.org/10.4300/JGME-D-16-00232.1

- El-Haddad C, Damodaran A, McNeil HP, Hu W. The ABCs of entrustable professional activities: an overview of ‘entrustable professional activities’ in medical education. Intern Med J. 2016;46(9):1006-1010. https://doi.org/10.1111/imj.12914

- General Medical Council. Tomorrow’s doctors: outcomes and standards for undergraduate medical education. London, UK: General Medical Council; 2009.

- Long DM. Competency-based residency training: the next advance in graduate medical education. Acad Med. 2000;75(12):1178-1183. https://doi.org/10.1097/00001888-200012000-00009

- Klamen DL, Williams RG, Roberts N, Cianciolo AT. Competencies, milestones, and EPAs - Are those who ignore the past condemned to repeat it? Med Teach. 2016;38(9):904-910. https://doi.org/10.3109/0142159X.2015.1132831

- Krupat E. Critical thoughts about the core entrustable professional activities in undergraduate medical education. Acad Med. 2018;93(3):371-376. https://doi.org/10.1097/ACM.0000000000001865

- Pangaro L, ten Cate O. Frameworks for learner assessment in medicine: AMEE Guide No. 78. Med Teach. 2013;35(6):e1197-e1210. https://doi.org/10.3109/0142159X.2013.788789

- Swing SR. The ACGME outcome project: retrospective and prospective. Med Teach. 2007;29(7):648-654. https://doi.org/10.1080/01421590701392903

- Tekian A. Are all EPAs really EPAs? Med Teach. 2017;39(3):232-233.

- ten Cate O. Nuts and bolts of entrustable professional activities. J Grad Med Educ. 2013;5(1):157-158. https://doi.org/10.4300/JGME-D-12-00380.1

- ten Cate O, Chen HC, Hoff RG, Peters H, Bok H, van der Schaaf M. Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach. 2015;37(11):983-1002. https://doi.org/10.3109/0142159X.2015.1060308

There are no comments for this article.