Introduction: There is no established baseline for how frequently clinical researchers personally encounter manuscript rejection, making it difficult for faculty to put their own evolving experience in context. The purpose of this study was to determine the feasibility of obtaining personal acceptance per submission (APS) and acceptance per manuscript (APM) rates for individual faculty members.

Methods: We performed a cross-section survey pilot study of clinical faculty members of two departments (family medicine and pediatrics), in one academic health center in the academic year 2017-2018. The survey asked participants to report the number of attempted submissions required per journal article they have had accepted in the prior 2 years as well as any submissions that did not lead to publication.

Results: Sixty-eight of 136 eligible faculty (50%) completed the questionnaire. Academic clinicians in the sample eventually published 80% of the manuscripts submitted, with 39% of papers rejected per submission attempt. Associate professors had the highest APS (0.71) and APM (0.88).

Conclusions: In this pilot, we demonstrated the feasibility of retrospectively collecting data that could identify baseline manuscript acceptance rates and were able to generate department averages and rank specific averages for manuscript acceptance and rejection. We confirmed that rejection is common among academic clinicians. The APS and APM can be used by academic clinicians to track their own progress from day one of their publishing careers as a method of self-assessment, rather than having to wait for citations to accumulate.

Manuscript rejection is a common barrier to dissemination of scholarly output.1-4 Despite the awareness that many journals accept far fewer papers than they reject,5-8 there are no manuscript rejection rate baselines for clinical researchers and rejections are often met with negative emotional responses including discouragement, disillusionment, alienation, and damaged egos by academic clinicians.1,3,9 These predictable reactions to rejection can contribute to decreased professional satisfaction, abandonment of the manuscript, and may even dissuade the author from future manuscript preparation.1,3-5,9,10 Further, negative responses to rejection have led promising scholars to abandon careers in academic medicine.10

As many departments regard the number of articles published by faculty as a metric of success, it is an expectation for faculty to overcome the challenges of rejection. Despite this expectation, the metrics typically used by academic review committees only provide information about eventual success (ie, publications, citations),11 and accordingly, may fail to provide information that could be useful for tailored mentoring and self-assessment.12,13 For example, a common metric adopted by academic review committees is an author’s h-index, introduced by Jorge Hirsch to give a better estimate of the significance and impact of a scientist’s cumulative research contribution.11 As this index requires time for citations to accumulate, and can only increase over time, it provides no real-time feedback and does little to assess academic production for early-career researchers.12,13

Because there are no manuscript rejection rate norms for clinicians at different career stages, and individual metrics such as the h-index provide ways of tracking an author’s ultimate success in publication, little help is available for faculty to put their own evolving experience with rejection into context. However, if authors were to calculate their own personal acceptance rates per submission and eventual acceptance rates of their manuscripts, tracking these dynamic rates might provide meaningful context when compared to peers at various career stages. We wished to determine the feasibility and potential utility of calculating these metrics among primary care faculty at an academic health center.

Faculty deal with two types of rejection regarding manuscript submissions: the per-submission decision (ie, the editorial decision after an author submits a particular manuscript on a given attempt), and the eventual acceptance or rejection of a given paper (ie, whether that paper is eventually published somewhere). We propose the following metrics:

- Acceptance per submission (APS)=acceptances/submissions: percent of the time a manuscript is accepted for any given submission to a unique journal.

- An APS of 1 indicates that every manuscript submitted is accepted for publication by the first journal it was submitted to.

- Acceptance per manuscript (APM)=acceptances/manuscripts: percent of manuscripts submitted that were eventually accepted by a journal.

- An APM of 1 indicates that every manuscript is eventually accepted for publication.

The purpose of this study was to determine the feasibility of obtaining personal acceptance per submission (APS) and acceptance per manuscript (APM) for individual faculty members and whether they differ by academic title.

We developed a voluntary cross-section survey pilot study to determine the feasibility of obtaining these novel metrics among clinical faculty in two departments (family medicine and pediatrics). We administered the survey in 2017 after the University of Minnesota Institutional Review Board determined that the study met the criteria for exemption from IRB review. Participants included departmental clinical faculty and did not include adjunct faculty or clinical professors.

Research staff created a list of publications for each individual faculty using Manifold, a web-accessible interface that generates reports of scholarly output for faculty in the University of Minnesota Medical School. Research staff downloaded article data from Scopus (www.scopus.com, a large abstract and citation database of peer-reviewed literature), to verify that the faculty were from our institution and to assess the type of article published.

We provided faculty members with an individualized link to an internet-based survey (Qualtrics, Provo, UT) that included demographics (gender identity, academic rank, professional designation, percent clinical time) and a list of their published manuscript titles listed in the Scopus database from the prior 2 years. For each listed article, faculty indicated the number of journals to which each manuscript was submitted prior to acceptance. We also asked participants to list any manuscripts from the last 2 years not indexed in Scopus and provide the number of journals those manuscripts were submitted to. Finally, participants were asked to report manuscripts that had been submitted in the last 2 years but not yet accepted by a journal, and to report the number of submissions for each.

A research staff member not involved in academic advancement decision making in either department (D.F.) linked the survey responses to the publication statistics available in Scopus. Only this research team member had access to the link to identify responders. Prior to data analysis, we reviewed the survey responses for outliers, duplicate records, and inconsistent responses. We included all responses where there were multiple authors within our institution because the goal was to calculate the APS and APM for each faculty member. Responses that included the response “I have no idea” regarding the number of submissions before publication were modified to include the accurate number of submissions when that data was available from the responses of coauthors. If that information was not available from the coauthors’ responses, the manuscript was removed from the calculation of APS and APM. Faculty who indicated “I have no idea” were included in a subanalysis to determine if author position or number of authors on the paper correlated with the author being unaware of manuscript submission decisions.

The data were then provided to authors A.S. and M.P. without direct identifiers for analysis. We excluded book chapters, notes, letters to the editor, editorials, newsletter publications, blog posts and any other similar publications from the analysis. Individuals who had 0% clinical time and those who had not submitted any manuscripts in the 2 years prior to the survey were also excluded as we were specifically focused on the experience of clinic faculty with regards to their manuscript acceptances.

We calculated the APS and APM for each faculty member, summarizing with descriptive statistics, assessing differences in demographics using t tests (continuous and ordinal variables) and Fisher exact tests (categorical variables).

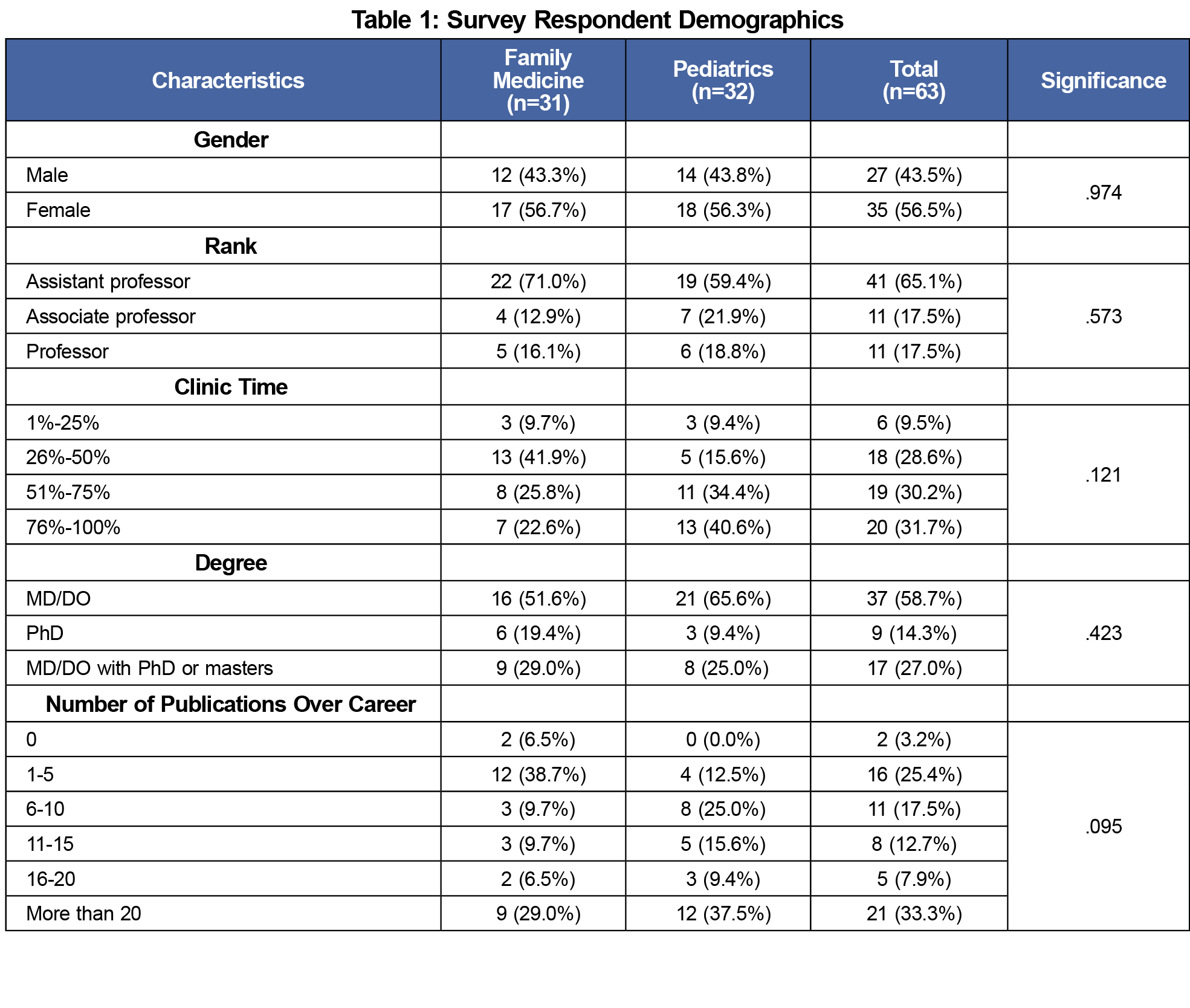

Sixty-eight of 136 eligible faculty (50%) completed the questionnaire, with respondents equally distributed by department. Sixty-three faculty members met inclusion criteria, with demographic breakdowns similar to the makeup of the departments (Table 1).

Table 2 summarizes the experience with rejection and acceptance. Academic clinicians in the sample eventually published 80% of the manuscripts submitted, yet manuscript rejection (1-APS) was common, with 39% of papers rejected per submission attempt. Associate professors had the highest APS (0.71) and APM (0.88). Associate professors also had the highest average number of submissions (11.3) and published manuscripts (9.6) in the 2-year period, followed by professors (9.1 APS; 8.1 APM) and assistant professors (4.9 APS; 3.8 APM). The differences were significant for both the average number of manuscript submissions and number of published manuscripts (P=.001). The APS and APM were similar between clinical psychologists with PhD, physicians with additional graduate training (PhD and masters), and physicians without additional training. Manuscripts that included both physicians and clinical psychologists had similar APS and APM to those that had only physicians or only PhDs.

A total of 402 manuscripts were submitted; 214 (53.2%) were uploaded by study staff prior to survey distribution. Sixty-three (15.7%) published articles and 50 (12.4%) in-press articles were added by survey respondents. Seventy-five (18.7%) manuscripts that were submitted but not accepted by a journal to date were also added by survey respondents. Thirty-five (9%) of the manuscripts did not have a response by the faculty when asked “How many submissions did this manuscript have prior to acceptance?” and were removed from the analysis of APS and APM.

Subanalysis was done on the 13 faculty who answered “I have no idea” for the number of journal submissions on at least one of their manuscripts. In all, this subgroup submitted 93 manuscripts and did not know the number of journals that their manuscripts were submitted to 38% (n=35) of the time. When further asked what position author they were in those manuscripts, 80% (n=28) were a middle author or did not know what author position they held on that manuscript. Having more than five authors on a manuscript was associated with a small, nonsignificant difference in whether the faculty knew the number of journals their manuscript was submitted to (63% vs 59%).

We proposed two novel metrics, the APS and APM, that may help academics track their progress from day one of their publishing careers, rather than having to wait for citations to accumulate. We generated department- and rank-specific averages for manuscript acceptance and rejection, and confirmed that rejection is common, yet surmountable among academic clinicians.

There is growing awareness that the overall health of the United States could benefit from increased primary care research, yet that has been underdeveloped in part due to limited and concentrated federal funding.14-19 Despite these barriers, the percentage of academic faculty who have published manuscripts has increased, often as the result of publications of research presented at major conferences.20,21 The primary care research enterprise is positioned to build on that past work and capitalize on emerging opportunities through the contributions and initiatives of national organizations.22-24 Academic departments are vital to this process and best practices for departments that do this well include faculty who are supported by mentorship and resources.23,25,26 The manuscript metrics APS and APM provide data previously unavailable and have the potential to enhance discussions with the individual faculty member regarding their evolving experience with manuscript acceptance and rejection. By comparing these metrics to peers at various career stages, departments will be able to provide feedback, mentoring, and appropriate resources to clinical faculty. In contrast to previously used metrics that relied on manuscript publication numbers and citation counts, these dynamic metrics could enhance ongoing faculty development efforts aimed at increasing scholarly productivity and have the added benefit of destigmatizing rejection.

In this pilot, we analyzed two departments within a single academic center, which limits the generalizability of our APS and APM norms. Although we have presented benchmark data in this pilot, broader collection of these metrics would allow for the establishment of norms at various career stages within an academic center or medical specialty. Additionally, further qualitative research is warranted to determine how faculty decide where to submit their manuscripts and what specific actions and behaviors researchers use to overcome manuscript rejection. This is especially important considering that in our sample additional graduate training (PhD, MD/DO plus PhD or masters) did not affect the outcome of a manuscript, but academic rank did.

The retrospective nature of data reporting in this pilot is also a limitation, as evidenced by 13 of the 63 faculty answering “I have no idea” to the number of journals their manuscript was submitted to for at least one of their manuscripts, which included 9% of the manuscripts in our analysis. This gap in the level of awareness was most evident in manuscripts where faculty were not the first or last author. Further, our data includes the responses from half of the faculty who received the survey, and those who did not respond may have had different experiences with their manuscript acceptances. Removing the retrospective data collection is one method that could decrease the response bias inherent in our methods. We are working to create a platform to enable personal tracking of manuscript fates at the time of submission. We have already demonstrated that such prospective reporting is feasible on a web-based platform, as part of work we have done to bring gamification to academic pursuits where participants log their submissions and acceptances in real time.27,28 Even before institutional infrastructure is in place, however, individuals can begin tracking their manuscript submissions and calculate their personal manuscript acceptance rates as a method of self-assessment.

Acknowledgments

The authors acknowledge the efforts of the research services staff at the University of Minnesota Department of Family Medicine and Community Health. They also praise the dedication and grace of the late Ellen Dodds who was instrumental in moving this project from a concept into reality.

Presentations:

“Personal Rejection Rate: A Novel Metric for Self-Assessment

in Scholarship” included portions of the material in this manuscript and was presented by Andrew H. Slattengren, DO, at the Minnesota Academy of Family Physicians Innovation and Research Forum on March 3, 2018 in Bloomington, Minnesota.

“Personal Manuscript Acceptance Rates—Novel Metrics for Self-Assessment in Scholarship” included portions of the material in this manuscript and was presented by Andrew H. Slattengren, DO at the Society of Teachers of Family Medicine Annual Spring Conference on April 28, 2019 in Toronto, Canada.

“Normalizing Rejection via Personal Manuscript Acceptance Rates—Novel Metrics for Self-Assessment in Scholarship” included portions of the material in this manuscript and was presented by Michael B. Pitt, MD at the Pediatric Academic Societies Annual Meeting on April 30, 2019 in Baltimore, Maryland.

References

- Woolley KL, Barron JP. Handling manuscript rejection: insights from evidence and experience. Chest. 2009;135(2):573-577. https://doi.org/10.1378/chest.08-2007

- Campanario JM, Acedo E. Rejecting highly cited papers: the views of scientists who encounter resistance to their discoveries from other scientists. J Am Soc Inf Sci Technol. 2007;58(5):734-743. https://doi.org/10.1002/asi.20556

- Day NE. The silent majority: manuscript rejection and its impact on scholars. Acad Manag Learn Educ. 2011;10(4):704-718. https://doi.org/10.5465/amle.2010.0027

- Venketasubramanian N, Hennerici MG. How to handle a rejection. Teaching course presentation at the 21st European Stroke Conference, Lisboa, May 2012. Cerebrovasc Dis. 2013;35(3):209-212. https://doi.org/10.1159/000347106

- Ray J, Berkwits M, Davidoff F. The fate of manuscripts rejected by a general medical journal. Am J Med. 2000;109(2):131-135. https://doi.org/10.1016/S0002-9343(00)00450-2

- Green R, Del Mar C. The fate of papers rejected by Australian Family Physician. Aust Fam Physician. 2006;35(8):655-656. http://www.ncbi.nlm.nih.gov/pubmed/16915616. Accessed June 7, 2018.

- Earnshaw CH, Edwin C, Bhat J, et al. An analysis of the fate of 917 manuscripts rejected from Clinical Otolaryngology. Clin Otolaryngol. 2017;42(3):709-714. https://doi.org/10.1111/coa.12820

- Hall SA, Wilcox AJ. The fate of epidemiologic manuscripts: a study of papers submitted to epidemiology. Epidemiology. 2007;18(2):262-265. https://doi.org/10.1097/01.ede.0000254668.63378.32

- Whitman N, Eyre S. The pattern of publishing previously rejected articles in selected journals. Fam Med. 1985;17(1):26-28.

- DeCastro R, Sambuco D, Ubel PA, Stewart A, Jagsi R. Batting 300 is good: perspectives of faculty researchers and their mentors on rejection, resilience, and persistence in academic medical careers. Acad Med. 2013;88(4):497-504. https://doi.org/10.1097/ACM.0b013e318285f3c0

- Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci USA. 2005;102(46):16569-16572. https://doi.org/10.1073/pnas.0507655102

- Carpenter CR, Cone DC, Sarli CC. Using publication metrics to highlight academic productivity and research impact. Acad Emerg Med. 2014; 21(10):1160-1172. https://doi.org/10.1111/acem.12482

- Kelly CD, Jennions MD. H-index: age and sex make it unreliable. Nature. 2007;449(7161):403. https://doi.org/10.1038/449403c

- Bowman MA, Lucan SC, Rosenthal TC, Mainous AG III, James PA. Family Medicine Research in the United States From the late 1960s Into the Future. Fam Med. 2017;49(4):289-295.

- Cameron BJ, Bazemore AW, Morley CP. Federal Research Funding for Family Medicine: Highly Concentrated, with Decreasing New Investigator Awards. J Am Board Fam Med. 2016;29(5):531-532. https://doi.org/10.3122/jabfm.2016.05.160076

- Cameron BJ, Bazemore AW, Morley CP. Lost in translation: NIH funding for family medicine research remains limited. J Am Board Fam Med. 2016;29(5):528-530. https://doi.org/10.3122/jabfm.2016.05.160063

- Morley CP, Cameron BJ, Bazemore AW. The impact of administrative academic units (AAU) grants on the family medicine research enterprise in the United States. Fam Med. 2016;48(6):452-458.

- Lucan SC, Bazemore AW, Xierali I, Phillips RL Jr, Petterson SM, Teevan B. Greater NIH investment in family medicine would help both achieve their missions. Am Fam Physician. 2010;81(6):704.

- Lucan SC, Barg FK, Bazemore AW, Phillips RL Jr. Family medicine, the NIH, and the medical-research roadmap: perspectives from inside the NIH. Fam Med. 2009;41(3):188-196.

- Post RE, Weese TJ, Mainous AG III, Weiss BD. Publication productivity by family medicine faculty: 1999 to 2009. Fam Med. 2012;44(5):312-317.

- Post RE, Mainous AG III, O’Hare KE, King DE, Maffei MS. Publication of research presented at STFM and NAPCRG conferences. Ann Fam Med. 2013;11(3):258-261. https://doi.org/10.1370/afm.1503

- deGruy FV III, Ewigman B, DeVoe JE, et al. A plan for useful and timely family medicine and primary care research. Fam Med. 2015;47(8):636-642.

- Ewigman B, Davis A, Vansaghi T, et al Building research & scholarship capacity in departments of family medicine: a new joint ADFM-NAPCRG initiative. Ann Fam Med. 2016;14(1):82-83. https://doi.org/10.1370/afm.1901

- Hester CM, Jiang V, Bartlett-Esquilant G, et al. Supporting family medicine research capacity: the critical role and current contributions of US family medicine organizations. Fam Med. 2019;51(2):120-128. https://doi.org/10.22454/FamMed.2019.318583

- Liaw W, Petterson S, Jiang V, et al. The scholarly output of faculty in family medicine departments. Fam Med. 2019;51(2):103-111. https://doi.org/10.22454/FamMed.2019.536135

- Liaw W, Eden A, Coffman M, Nagaraj M, Bazemore A. Factors associated with successful research departments: a qualitative analysis of family medicine research bright spots. Fam Med. 2019;51(2):87-102. https://doi.org/10.22454/FamMed.2018.652014

- Pitt MB, Furnival RA, Zhang L, Weber-Main AM, Raymond NC, Jacob AK. Positive peer-pressured productivity (P-QUAD): novel use of increased transparency and a weighted lottery to increase a division’s academic output. Acad Pediatr. 2017;17(2):218-221. https://doi.org/10.1016/j.acap.2016.10.004

- Borman-Shoap EC, Zhang L, Pitt MB. Longitudinal experience with a transparent weighted lottery system to incentivize resident scholarship. J Grad Med Educ. 2018;10(4):455-458. https://doi.org/10.4300/JGME-D-18-00036.1

There are no comments for this article.