Background and Objectives: Social distancing and quarantine requirements imposed during the COVID-19 pandemic necessitated remote training in many learning situations that formerly focused on traditional in-person training. In this context, we developed an adaptive approach to teaching laceration repair remotely while allowing for synchronous instruction and feedback.

Methods: In April 2020, 35 family medicine residents from four programs in the Midwest United States participated in a real-time, remotely-delivered, 2-hour virtual procedure workshop of instruction in suture techniques for laceration repair. Paired-sample t tests compared scores of learner self-confidence obtained during pre- and posttests. We interpreted short-answer responses with a mixed-methods analysis. Residents submitted photos and videos of suture techniques and received formative feedback based on a predefined rubric.

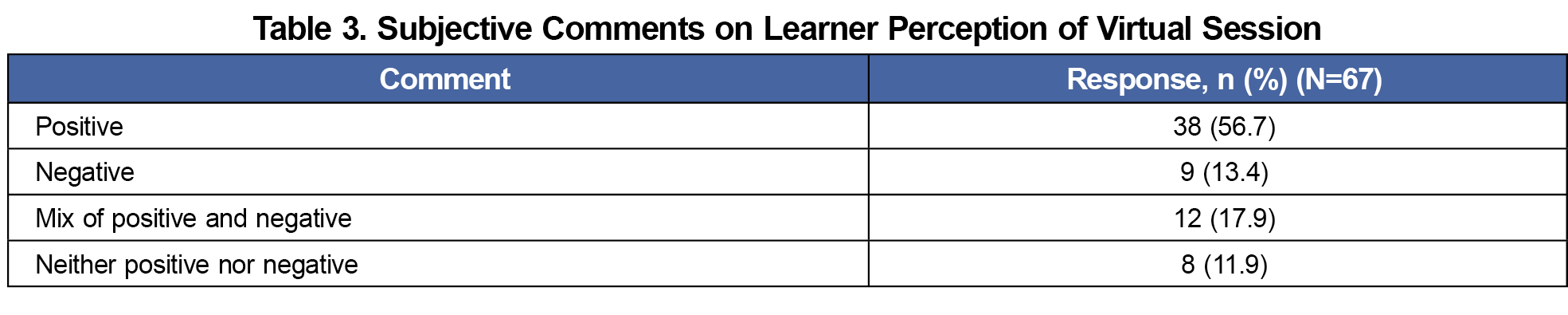

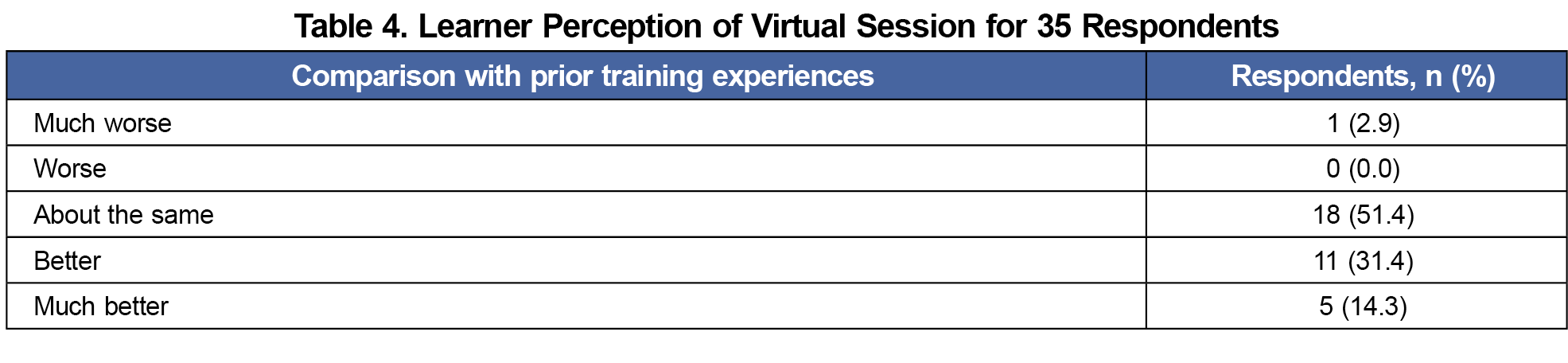

Results: All residents completed the pre- and posttests. The posttest scores for self-confidence across the participants showed significant improvement for all suture techniques. Of the 67 short-answer responses, 38 responses (56.7%) were positive; 9 (13.4%) negative; 8 (11.9%) neutral; and 12 (17.9%) a combination of positive and negative. The workshop was rated by 34 residents (97.1%) as either “about the same as prior training experiences,” “better than prior training experiences,” or “much better than prior training experiences.”

Conclusion: Learners reported that a remotely-delivered, real-time, synchronous suture technique workshop was a valuable experience. Further research is needed to establish the efficacy of this platform to promote procedural competence.

COVID-19 fostered shifts in training of resident physicians. During the initial outbreak, social distancing and isolation requirements by hospitals and the Centers for Disease Control and Prevention (CDC) limited in-person gatherings. This forced training institutions to abandon traditional in-person teaching methods. Asynchronous online didactics have been considered comparable to in-person teaching, though traditional procedural training involves direct supervision.1 Procedural self-study and blended virtual courses with faculty facilitation have been described as methods for learning procedures remotely.2 Remote simulation teaching of procedural skills has shown efficacy and favorability in surgical residencies.3 Synchronous remote teaching of procedural skills for primary care has not been widely reported.4,5

There are no widely adopted best practices for virtual learning in family medicine. In a review of 24 articles about health science e-learning, Regmi and Jones identified enablers and barriers to teaching cognitive content and procedural skills.6 Videorecording of suture and knot-tying skills has been used to remotely and asynchronously assess performance and provide feedback.7 Teaching surgical skills with internet-based video for obstetric interns has been demonstrated as an effective and well received method.8 While studies of e-learning have shown live sessions to be more highly rated than static prerecorded sessions, essential components and techniques for teaching procedural skills with virtual remote technology have not been identified.4,9,10

Our primary objective was to assess how learners would compare remote procedural skills training with traditional, in-person instruction. We focused on laceration repair as an essential primary care skill. To comply with social distancing recommendations from the CDC, we were unable to include a comparison group.11

Setting and Participants

A total of 35 resident physicians from 4 family medicine programs in the Midwest United States participated in a 2-hour workshop designed for synchronous remote instruction of laceration repair techniques. The Mayo Clinical Institutional Review Board deemed the study project exempt from approval.12

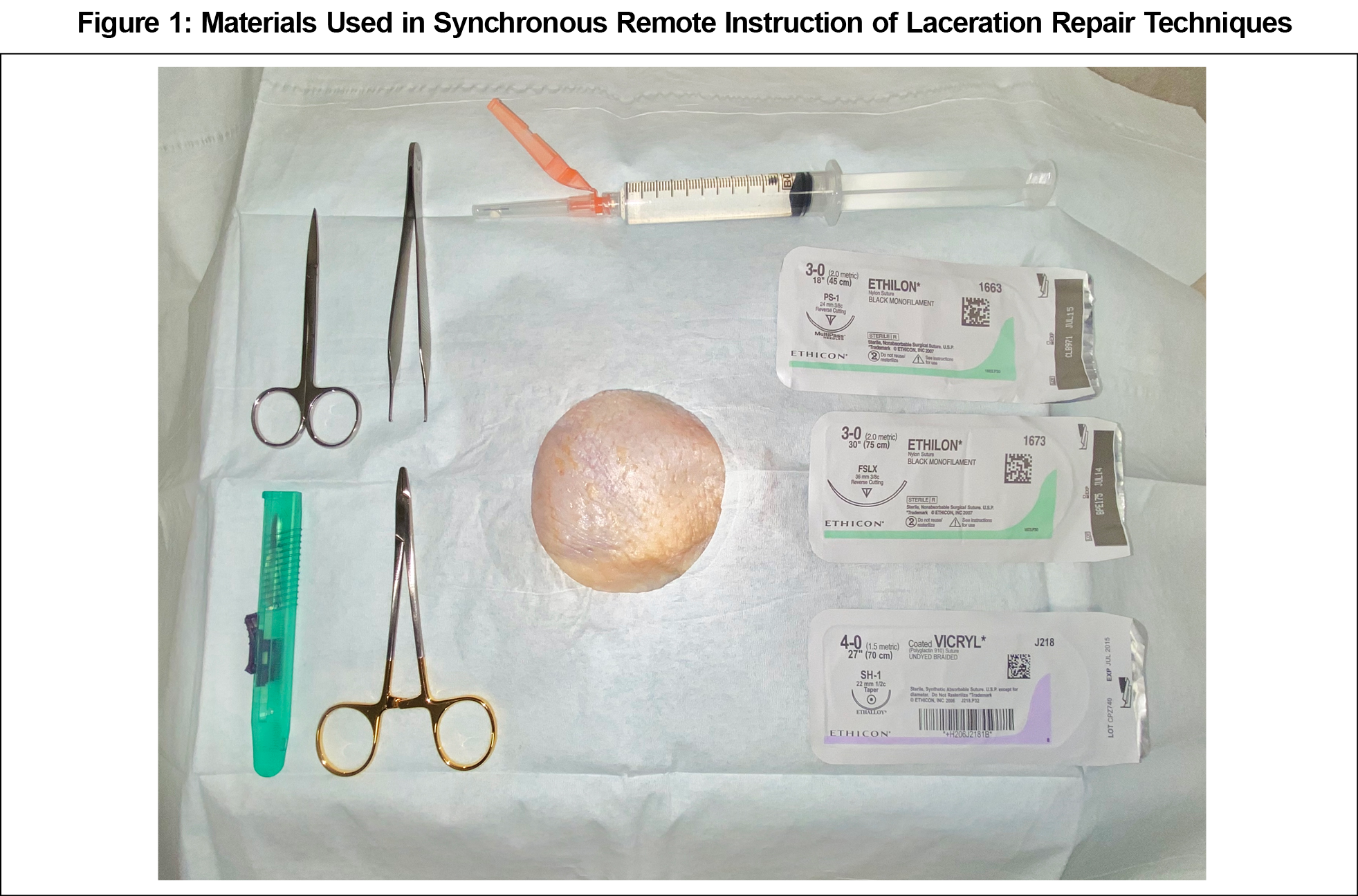

Participants prepared by reviewing articles about laceration repair and wound care.13,14 Participants completed a pretest that assessed cognitive understanding of key points. They also rated self-confidence regarding knowledge of indications and performance of techniques for laceration repair on a 10-point scale. An uncooked chicken model was chosen for reasonable fidelity, availability, and low cost (Figure 1).15 Faculty at each site were responsible for providing supplies and ensuring video was set up appropriately.

The online session was delivered via Zoom. A faculty physician presented critical learning points interspersed with explanations and demonstrations of instrument handling for five suturing techniques (simple interrupted, vertical mattress, horizontal mattress, subcuticular, and corner suture), including instrument ties. Another faculty assisted the presenter to ensure video quality and smooth screen sharing. Live stream was created with computer tablet camera positioned horizontally on a platform above the model.

Participants set up practice stations remotely. They practiced each technique along with the demonstration and had opportunity to ask questions in real time. Residents were instructed to provide a photo or video of their work for review. Faculty provided formative feedback to the residents using a grading rubric that was created for this activity.16 Rated characteristics included edge approximation, pattern, symmetry, spacing, suture tension and adequacy of ties. If video was submitted, handling of tissue and instruments, and execution of suture patterns were also evaluated.

The session concluded with the posttest, including participant feedback. Learners were asked to rate the learning experience overall using a 10-point scale. They compared the workshop with previous laceration repair educational experiences using a 6-point rating scale with anchor descriptions. Learners had opportunity to provide reasons for their answers to each question.

Analyses

Methods to assess value of the workshop included comparison of pretest/posttest responses, feedback from participants, and analysis of submitted images. Pre/posttest scores and self-confidence ratings were compared with use of paired-samples t test assuming unequal variance. We interpreted short-answer responses with a mixed-methods analysis.

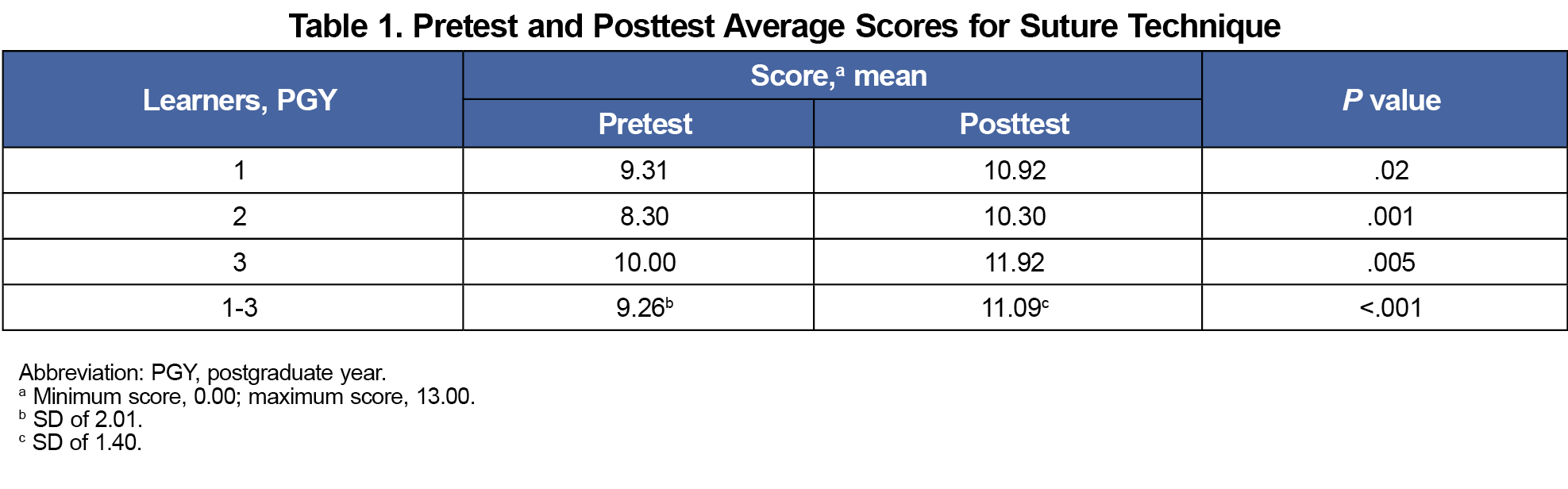

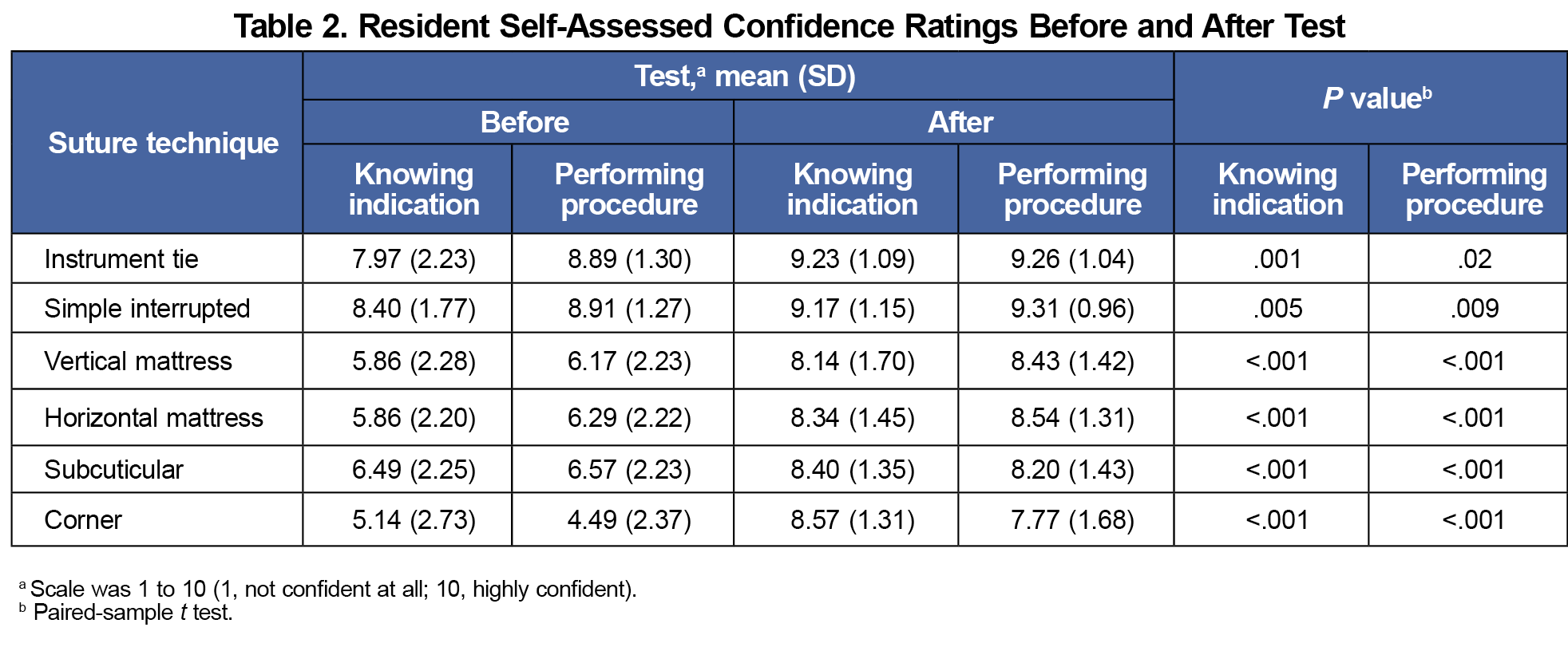

Thirteen postgraduate year (PGY)-1, 10 PGY-2, and 12 PGY-3 family medicine residents completed both the pretest and posttest. Posttest score for all (mean [SD], 11.09 [1.40]) was significantly higher than pretest score (mean [SD], 9.26 [2.01]; P<.001; Table 1). Pre/post self-confidence ratings showed statistically significant improvement for every question on posttest (Table 2).

We completed mixed-methods analysis to assess the learners’ perception of the workshop. We categorized comments as positive, negative, neutral, or a combination. Of the 67 responses, 38 (56.7%) were positive; 9 (13.4%) negative; 8 (11.9%) neutral; and 12 (17.9%) a combination (Table 3). Comparing to previous education, 5 of 35 (14.3%) rated the session “much better than prior training experiences”; 11 (31.4%) rated it “better than prior”; 18 (51.4%) rated it “about the same as prior”; no learner rated it “worse than prior”; and 1 of 35 (2.9%) rated it “much worse than prior” (Table 4). Overall satisfaction for the course was higher for PGY-2 (80.0%) and PGY-3 (61.9%) participants than for PGY-1 (34.6%), but results between classes were not statistically significant.

Limitations of the video platform prevented faculty from observing all the learners in real time, so we asked participants to upload photos or videos for evaluation and feedback. Fifteen learners submitted media for review. Using the grading rubric we developed to assess learner skill, a faculty member provided individualized formative feedback to learners based on their observed strengths and deficiencies. The rubric evaluated learners on individual suture skills such as instrument handling, respect for tissue, and characteristics of the sutures themselves.16 A maximum of 24 points were given for satisfactory performance on all domains. The average score was 95%. The media was stored on a secure server with access limited to investigators.

The main objective of this study was to evaluate learner perception of remote procedural training. We did not use an objective measure of learning performance, but instead relied on learner self-assessment. It is widely reported that self-assessments often fail to accurately reflect true learning.17,18 Due to the social distancing requirement, we were unable to have a comparison group for study. We attempted to overcome these limitations by asking learners to directly compare their experience with remote training to their experiences of prior in-person training. Additionally, qualitative evaluation of free-text comments provided insight into learner attitudes regarding remote training. While we were able to gather learner performance data following the training, we cannot draw conclusions about how learners would have performed in this training compared with different circumstances.

Now that social distancing guidelines have been relaxed, further investigation into remote procedure training should include direct comparison between remote vs in-person methods. Although the grading rubric appeared useful for providing formative feedback, this study was not designed to provide validity evidence for this tool.19 We suggest further investigation into the utility and validity of this rubric, which could be promising as a tool for formative assessment of laceration repair.

COVID-19 social distancing guidelines promoted development of instruction techniques that will engage, challenge, and increase competency for resident physicians. Research on virtual procedural training demonstrates the potential for this to be an effective teaching method. Most residents reported this workshop was a valuable, positive experience. Our results support use of virtual procedural education to augment skill for laceration repair in primary care.

Acknowledgments

Scientific publications staff at Mayo Clinic provided proofreading, administrative, and clerical support.

Disclaimer:

Mayo Clinic does not endorse specific products included in this article.

References

- McCutcheon K, Lohan M, Traynor M, Martin D. A systematic review evaluating the impact of online or blended learning vs. face-to-face learning of clinical skills in undergraduate nurse education. J Adv Nurs. 2015;71(2):255-270. doi:10.1111/jan.12509

- Deffenbacher B, Langner S, Khodaee M. Are self-study procedural teaching methods effective? a pilot study of a family medicine residency program. Fam Med. 2017;49(10):789-795.

- Dickson EA, Jones KI, Lund JN. Skype™: a platform for remote, interactive skills instruction. Med Educ. 2016;50(11):1151-1152. doi:10.1111/medu.13200

- Maertens H, Madani A, Landry T, Vermassen F, Van Herzeele I, Aggarwal R. Systematic review of e-learning for surgical training. Br J Surg. 2016;103(11):1428-1437. doi:10.1002/bjs.10236

- Ikeyama T, Shimizu N, Ohta K. Low-cost and ready-to-go remote-facilitated simulation-based learning. Simul Healthc. 2012;7(1):35-39. doi:10.1097/SIH.0b013e31822eacae

- Regmi K, Jones L. A systematic review of the factors - enablers and barriers - affecting e-learning in health sciences education. BMC Med Educ. 2020;20(1):91. doi:10.1186/s12909-020-02007-6

- Hu Y, Tiemann D, Michael Brunt L. Video self-assessment of basic suturing and knot tying skills by novice trainees. J Surg Educ. 2013;70(2):279-283. doi:10.1016/j.jsurg.2012.10.003

- Autry AM, Knight S, Lester F, et al. Teaching surgical skills using video internet communication in a resource-limited setting. Obstet Gynecol. 2013;122(1):127-131. doi:10.1097/AOG.0b013e3182964b8c

- Williamson JA, Farrell R, Skowron C, et al. Evaluation of a method to assess digitally recorded surgical skills of novice veterinary students. Vet Surg. 2018;47(3):378-384. doi:10.1111/vsu.12772

- McLeod RS, MacRae HM, McKenzie ME, Victor JC, Brasel KJ; Evidence Based Reviews in Surgery Steering Committee. A moderated journal club is more effective than an Internet journal club in teaching critical appraisal skills: results of a multicenter randomized controlled trial. J Am Coll Surg. 2010;211(6):769-776. doi:10.1016/j.jamcollsurg.2010.08.016

- COVID-19 and Your Health. Centers for Disease Control and Prevention. Published June 11, 2021. Accessed October 13, 2021. https://www.cdc.gov/coronavirus/2019-ncov/prevent-getting-sick/social-distancing.html

- Exemptions (2018 Requirements). HHS.gov. Published March 8, 2021. Accessed October 13, 2021. https://www.hhs.gov/ohrp/regulations-and-policy/regulations/45-cfr-46/common-rule-subpart-a-46104/index.html

- Forsch RT, Little SH, Williams C. Laceration repair: a practical approach. Am Fam Physician. 2017;95(10):628-636.

- Worster B, Zawora MQ, Hsieh C. Common questions about wound care. Am Fam Physician. 2015;91(2):86-92.

- Denadai R, Saad-Hossne R, Martinhão Souto LR. Simulation-based cutaneous surgical-skill training on a chicken-skin bench model in a medical undergraduate program. Indian J Dermatol. 2013;58(3):200-207. doi:10.4103/0019-5154.110829

- Cowan C, Stacey S. Laceration repair assessment rubric. 2023. STFM Resource Library. https://resourcelibrary.stfm.org/viewdocument/laceration-repair-assessment-rubric

- Colthart I, Bagnall G, Evans A, et al. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME Guide no. 10. Med Teach. 2008;30(2):124-145. doi:10.1080/01421590701881699

- Gabbard T, Romanelli F. the accuracy of health professions students’ self-assessments compared to objective measures of competence. Am J Pharm Educ. 2021;85(4):8405. doi:10.5688/ajpe8405

- Cook DA, Zendejas B, Hamstra SJ, Hatala R, Brydges R. What counts as validity evidence? Examples and prevalence in a systematic review of simulation-based assessment. Adv Health Sci Educ Theory Pract. 2014;19(2):233-250. doi:10.1007/s10459-013-9458-4

There are no comments for this article.