There are multiple guidelines from publishers and organizations on the use of artificial intelligence (AI) in publishing.1-5 However, none are specific to family medicine. Most journals have some basic AI use recommendations for authors, but more explicit direction is needed, as not all AI tools are the same.

As family medicine journal editors, we want to provide a unified statement about AI in academic publishing for authors, editors, publishers, and peer reviewers based on our current understanding of the field. The technology is advancing rapidly. While text generated from early large language models (LLMs) was relatively easy to identify, text generated from newer versions is getting progressively better at imitating human language and more challenging to detect. Our goal is to develop a unified framework for managing AI in family medicine journals. As this is a rapidly evolving environment, we acknowledge that any such framework will need to continue to evolve. However, we also feel it is important to provide some guidance for where we are today.

Artificial intelligence (AI) is a broad field where computers perform tasks that have historically been thought to require human intelligence. LLMs are a recent breakthrough in AI that allow computers to generate text that seems like it comes from a human. LLMs deal with language generation, while the broader term “generative AI” can also include AI-generated images or figures. ChatGPT is one of the earliest and widely used LLM models, but other companies have developed similar products. LLMs “learn” to do a multifaceted analysis of word sequences in a massive text training database and generate new sequences of words using a complex probability model. The model has a random component, so responses to the exact same prompt submitted multiple times will not be identical. LLMs can generate text that looks like a medical journal article in response to a prompt, but the article’s content may or may not be accurate. LLMs may “confabulate” generating convincing text that includes false information.6-8 LLMs do not search the internet for answers to questions. However, they have been paired with search engines in increasingly sophisticated ways. For the rest of this editorial, we will use the broad term “AI” synonymously with LLMs.

Role of Large Language Models in Academic Writing and Research

As LLM tools are updated and authors and researchers become familiar with them, they will undoubtedly become more functional in assisting the research and writing process by improving efficiency and consistency. However, current research on the best use of these tools in publication is still lacking. A systematic review exploring the role of ChatGPT in literature searches found that most articles on the topic are commentaries, blog posts, and editorials, with little peer-reviewed research.9 Some studies have demonstrated benefit in narrowing the scope of literature review when AI tools were applied to large data sets of studies and prompted to evaluate them for inclusion based on the title and abstract. Another paper reported that AI had 70% accuracy in appropriately identifying relevant studies compared to human researchers and may reduce time and provide a less subjective approach to literature review.10-12 When used to assist with writing background sections, LLMs’ writing was rated the same if not better than human researchers, but the citations were consistently false in another study.13 LLM models are frequently deficient in providing “real” papers and correctly matching authors to their own papers when generating citations and therefore are at risk of creating fictious citations that appear convincing despite incorrect information including DOI numbers.6,14

Studies evaluating the perceptions of AI use in academic journals and evaluating the strengths and weaknesses of the tools revealed no agreement on how to report the use of AI tools.15 There are many tools; for example, some are used to improve grammar, and others generate content, yet parameters on substantive use versus nonsubstantive use are lacking. Furthermore, current AI detection tools cannot adequately distinguish use types.15 Reported benefits include reducing workload and the ability to summarize data efficiently, whereas weaknesses include variable accuracy, plagiarism, and deficient application of evidence-based medicine standards.7,16

Guidelines on appropriate AI use exist, such as the “Living Guidelines on the Responsible Use of Generative AI in Research” produced by the European Commission.17 These guidelines include steps for researchers, organizations, and funders. The fundamental principles for researchers are to maintain ultimate responsibility for content; apply AI tools transparently; ensure careful evaluation of privacy, intellectual property, and applicable legislation; continuously learn how best to use AI tools; and refrain from using tools on activities that directly affect other researchers and groups.17 While these are helpful starting points, family medicine publishers can collaborate on best practices for using AI tools and help define substantive reportable use while acknowledging the current limitations of various tools and understanding that they will continue to evolve. Family medicine journals do not have unique AI needs as compared to other journals, but the effort of all the editors to jointly present principles related to AI is a unique model.

Guidance for Use of LLMs/AI in Family Medicine Publications

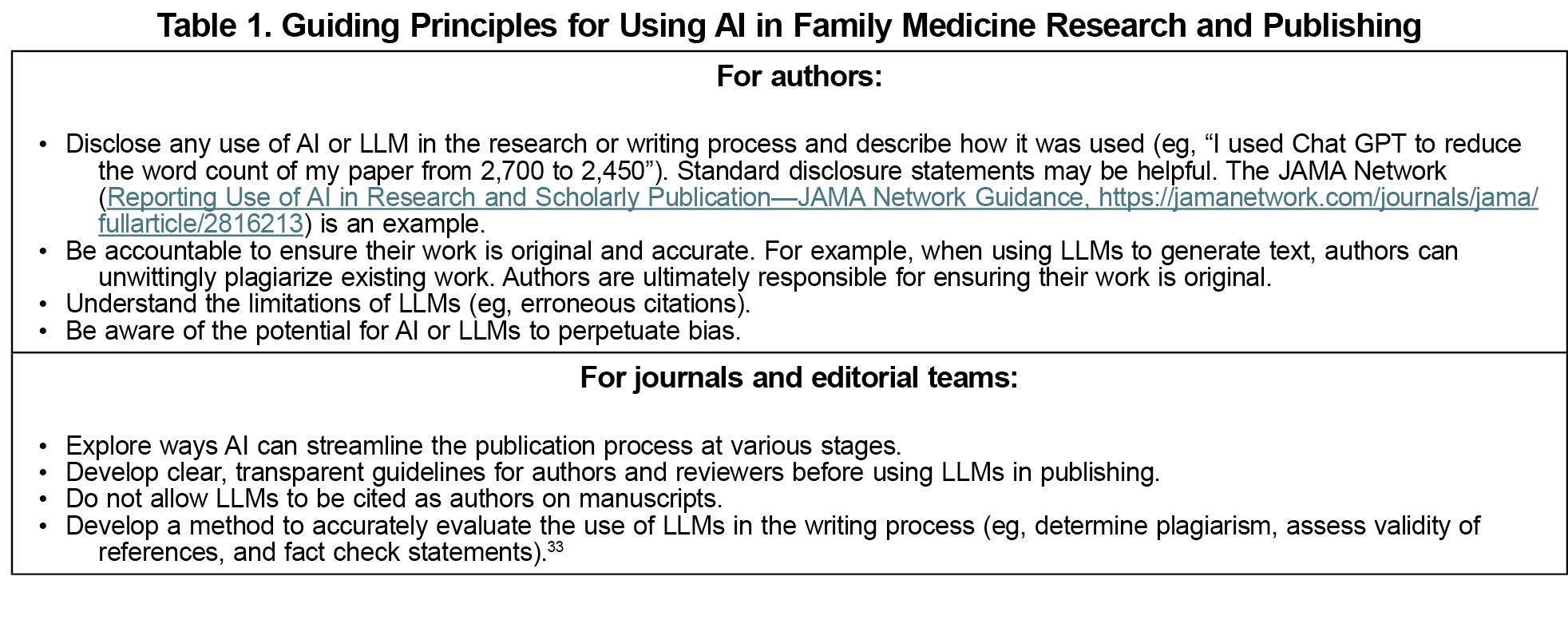

The core principles of scientific publishing will remain essentially unchanged by AI. For example, the criteria for authorship will remain the same. Authors will still be required to be active participants in conceptualizing and producing scientific work; writers and editors of manuscripts will be held accountable for the product (Table 1).

Authors must still cite others’ work appropriately when creating their current scientific research. Citing works will likely change over time as AI use in publishing matures. It is impossible to accurately list all sources used to train a given AI product. However, it would be possible to cite where a fact came from or who originated a particular idea. Similarly, authors will still need to ensure that their final draft is sufficiently original that they have not inadvertently plagiarized others’ works.1,18 Authors must be well versed in the existing literature of a given field.

Since LLMs model text generation on a training data set, there is an inherent concern that they will discover biased arguments and then repeat them, thereby compounding bias.19 Because LLMs mimic human-created content and there is a preponderance of biased, sexist, racist, and other discriminatory content on the internet, this is a significant risk.20 Some companies now work in the LLM/AI space to eliminate biases from these models, but they are in their infancy. Equality AI, for example, is developing “responsible AI to solve healthcare’s most challenging problems: inequity, bias and unfairness.”21 More investment is necessary to further remove bias from LLM/AI models. While authors have touted AI and LLMs as bias elimination tools, the fact that the results of bias elimination tools are not reproducible with any consistency has scholars questioning their utility. Successful deployment of an unbiased LLM/AI tool will depend on carefully examining and revising existing algorithms and the data used to train them.22 Excellent, unbiased algorithms have not been developed but might be in the future.23 AI tools can be used as a de facto editorial assistant which may help globalize the publication process by helping non-native English speakers publish in English-language journals.

The use of LLMs and broader AI tools is expanding rapidly. There are opportunities at all levels of research, writing, and publishing to use AI to enhance our work. A key goal for all family medicine journals is to require authors to identify the use of LLMs and assure that the LLMs used provide highly accurate information and mitigate the frequency of confabulation. Research is ongoing to develop methods to determine the accuracy of LLMs output.24 Editors and publishers must continue to advocate for accurate tools to validate the work of LLMs. Researchers should assess the performance of tools that are used in the writing process. For example, they should study the extent to which LLMs plagiarize, provide false citations, or generate false statements. They should also study tools that detect these events.

AI tools are already being used by some publishers and editors to do initial screens of manuscripts and to match potential reviewers with submitted papers. The complex interplay between AI tools and humans is evolving.25 While AI will likely not replace human researchers, authors, reviewers or editors, it continues to contribute to the publication process in myriad ways. We want to know more: “How can LLMs contribute to the publication process?” “Can authors ask LLMs to do literature searches or draft a paper?” “Can we train AI to contribute to a revision of a or review a paper?” Probably yes, but we must scrutinize any AI-generated references and we likely cannot train AI to evaluate conclusions or determine impact of a specific paper in the field. Family medicine journals are publishing important papers on AI —not only about its use in research and publishing but also about its use in clinical practice26-32 and this editorial is a call for more scholarship in this area.

Acknowledgments

The authors acknowledge Dan Parente, Steven Lin, Winston Liaw, Renee Crichlow, Octavia Amaechi, Brandi White, and Sam Grammer for their helpful suggestions.

Publication Note: This article is being published simultaneously in Family Medicine, JABFM, AFP, Annals of Family Medicine, FPIN, Canadian Family Physician, PRiMER, Family Medicine and Community Health, FP Essentials, and FPM.

References

-

Zielinski C, Winker MA, Aggarwal R, et al. Chatbots, generative AI, and scholarly manuscripts: WAME recommendations on chatbots and generative artificial intelligence in relation to scholarly publications. World Association of Medical Editors. January 20, 2023. Revised May 31, 2023. Accessed October 3, 2024.

https://wame.org/page3.php?id=106 doi:10.3889/oamjms.2023.11723

-

Recommendations for the conduct, reporting, editing, and publication of scholarly work in medical journals. International Committee of Medical Journal Editors. Updated January 2024. Accessed October 3, 2024.

https://www.icmje.org/icmje-recommendations.pdf

-

Adams L, Fontaine E, Lin S, Crowell T, Chung VCH, Gonzalez AA, eds.

Artificial Intelligence in Health, Health Care and Biomedical Science: An AI Code of Conduct Framework Principles and Commitments Discussion Draft. NAM Perspectives; April 8, 2024,

doi:10.31478/202403a

-

-

-

Haider J, Soderstron KR, Ekstrom B, Rodl M. GPT-fabricated scientific papers on Google Scholar: key features, spread, and implications for preempting evidence manipulation.

Harvard Misinformation Review. 2024;5(5).

doi:10.37016/mr-2020-156

-

Ramoni D, Sgura C, Liberale L, Montecucco F, Ioannidis JPA, Carbone F. Artificial intelligence in scientific medical writing: legitimate and deceptive uses and ethical concerns.

Eur J Intern Med. 2024;127:31-35.

doi:10.1016/j.ejim.2024.07.012

-

-

-

Zimmermann R, Staab M, Nasseri M, Brandtner P. Leveraging large language models for literature review tasks - a case study using chatgpt. In: Guarda T, Portela F, Diaz-Nafria JM, eds.

Advanced Research in Technologies, Information, Innovation and Sustainability. ARTIIS 2023. Communications in Computer and Information Science. Vol 1935. Springer; 2024,

doi:10.1007/978-3-031-48858-0_25

-

Dennstädt F, Zink J, Putora PM, Hastings J, Cihoric N. Title and abstract screening for literature reviews using large language models: an exploratory study in the biomedical domain.

Syst Rev. 2024;13(1):158.

doi:10.1186/s13643-024-02575-4

-

-

Huespe IA, Echeverri J, Khalid A, et al. Clinical research with large language models generated writing-clinical research with AI-assisted writing (CRAW) Study.

Crit Care Explor. 2023;5(10):e0975.

doi:10.1097/CCE.0000000000000975

-

Byun C, Vasicek P, Seppi K. This reference does not exist: an exploration of LLM citation accuracy and relevance. In: Proceedings of the Third Workshop on Bridging Human--Computer Interaction and Natural Language Processing. Association for Computational Linguistics; 2024:28–39.

-

Chemaya N, Martin D. Perceptions and detection of AI use in manuscript preparation for academic journals.

PLoS One. 2024;19(7):e0304807.

doi:10.1371/journal.pone.0304807

-

-

-

Kaebnick GE, Magnus DC, Kao A, et al. Editors’ statement on the responsible use of generative AI technologies in scholarly journal publishing.

Med Health Care Philos. 2023;26(4):499-503.

doi:10.1007/s11019-023-10176-6

-

Saguil A. Chatbots and large language models in family medicine. Am Fam Physician. 2024;109(6):501-502.

-

Andrew A. Potential applications and implications of large language models in primary care.

Fam Med Community Health. 2024;12(suppl 1):e002602.

doi:10.1136/fmch-2023-002602

-

-

-

-

Elkhatat AM, Elsaid K, Almeer S. Evaluating the efficacy of AI content detection tools in differentiating between human and AI-generated text.

Int J Educ Integr. 2023;19(1):17.

doi:10.1007/s40979-023-00140-5

-

Ranjbari D, Abbasgholizadeh Rahimi S. Implications of conscious AI in primary healthcare.

Fam Med Community Health. 2024;12(suppl 1):e002625.

doi:10.1136/fmch-2023-002625

-

Parente DJ. Generative artificial intelligence and large language models in primary care medical education. [published August 8, 2024].

Fam Med. 2024;56(9):534-540.

doi:10.22454/FamMed.2024.775525

-

Waldren SE. The promise and pitfalls of AI in primary care. Fam Pract Manag. 2024;31(2):27-31.

-

Hanna K, Chartash D, Liaw W, et al. Family medicine must prepare for artificial intelligence.

J Am Board Fam Med. 2024;37(4):520-524.

doi:10.3122/jabfm.2023.230360R1

-

Hanna K. exploring the applications of chatgpt in family medicine education: five innovative ways for faculty integration.

PRiMER Peer-Rev Rep Med Educ Res. 2023;7:26.

doi:10.22454/PRiMER.2023.985351

-

Kueper JK, Terry AL, Zwarenstein M, Lizotte DJ. Artificial intelligence and primary care research: a scoping review.

Ann Fam Med. 2020;18(3):250-258.

doi:10.1370/afm.2518

-

Hake J, Crowley M, Coy A, et al. Quality, accuracy, and bias in ChatGPT-based summarization of medical abstracts.

Ann Fam Med. 2024;22(2):113-120.

doi:10.1370/afm.3075

-

Kueper JK, Emu M, Banbury M, et al. Artificial intelligence for family medicine research in Canada: current state and future directions: Report of the CFPC AI Working Group.

Can Fam Physician. 2024;70(3):161-168.

doi:10.46747/cfp.7003161

- Sheng E, Chang K-W, Natarajan P, Peng N. The woman worked as a babysitter: on biases in language generation. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Association for Computational Linguistics; 2019: 3407–3412. doi:10.18653/v1/D19-1339

There are no comments for this article.