Background and Objectives: Family medicine residency programs accredited by the Accreditation Council for Graduate Medical Education and the American Osteopathic Association typically require their residents to take the American Board of Family Medicine’s In-Training Examination (ITE) and the American College of Osteopathic Family Physicians’ In-Service Examination (ISE). With implementation of the single accreditation system (SAS), is it necessary to administer both examinations? This pilot study assessed whether the degree of similarity for the construct of family medicine knowledge and clinical decision making as measured by both exams is high enough to be considered equivalent and analyzed resident ability distribution on both exams.

Methods: A repeated measures design was used to determine how similar and how different the rankings of PGY-3s were with regard to their knowledge of family medicine as measured by the ISE and ITE. Eighteen third-year osteopathic residents participated in the analysis, and the response rate was 100%.

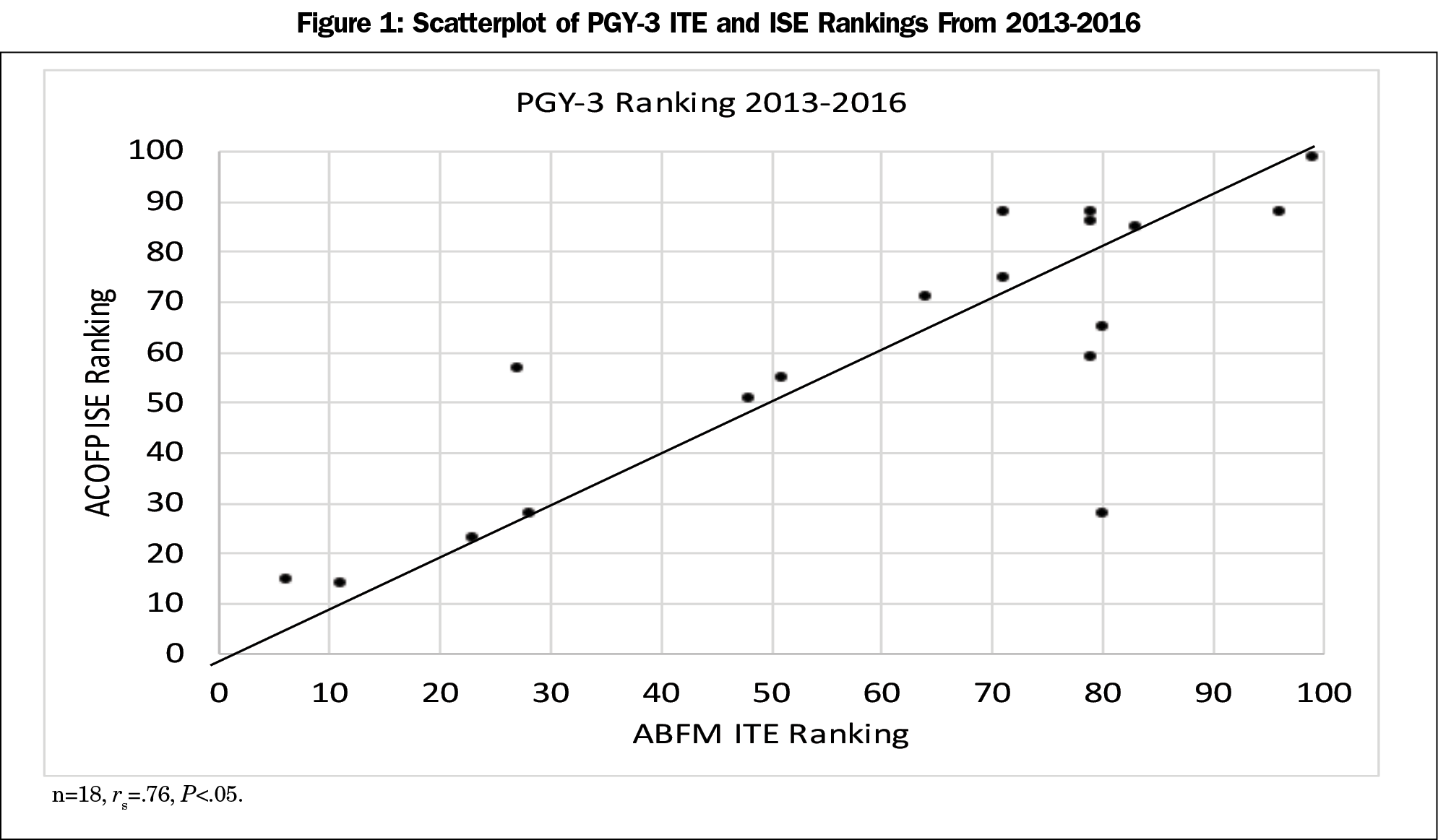

Results: The correlation between ISE and ITE rankings was moderately high and significantly different from zero (rs=.76, P<0.05). A Wilcoxon signed rank test indicated that the median ISE score of 62 was not statistically significantly different than the median ITE score of 71 (Z=-0.74, P=0.46, 2-tailed).

Conclusions: The lack of a difference on statistical analysis of ISE scores and the ITE scores of the PGY-3 residents suggests that the cohort of osteopathic residents in family residency programs and the cohort of residents in ACGME-accredited programs seem to be of comparable ability, therefore there is no clear justification for administering both examinations.

With few exceptions, family medicine residency programs accredited by the Accreditation Council for Graduate Medical Education (ACGME) and the American Osteopathic Association (AOA) require their resident physicians to take the American Board of Family Medicine’s (ABFM) In-Training Examination (ITE) and the American College of Osteopathic Family Physicians’ (ACOFP) In-Service Examination (ISE). With the implementation of the single accreditation system (SAS), the question arises, is it necessary to administer both examinations?

In 1964, the American Board of Neurological Surgery developed the first ITE for a medical specialty in an attempt to reduce the high failure rate on their certification examination.1 There are many studies regarding the power of ITEs to predict outcomes on their corresponding certification examination. These include surgery,4-8 internal medicine,9-12 psychiatry and neurology,13-15 radiology,16,17 pediatrics,18,19 obstetrics and gynecology,20,21 anesthesiology,22 orthopedic surgery,23 pathology,24 and family medicine.2,3,25 ITEs are also used by residency programs to meet the ACGME’s Common Program Requirement IV.A.5.b, which reads:

Residents must demonstrate knowledge of established and evolving biomedical, clinical, epidemiological and social-behavioral sciences, as well as the application of this knowledge to patient care.26

The ABFM’s ITE and the ACOFP’s ISE have historically served only their own discipline’s learners—allopathic or osteopathic family physicians. However, the distinctiveness of these groups is quickly disappearing. In 2015, ACGME-accredited family medicine residency programs accepted 2,463 resident physicians, one-third of whom were osteopathic medical school graduates.27 At the same time, a quarter of these programs were dually accredited by both the ACGME and the AOA.28 The AOA will no longer accredit programs after June 2020 and will instead rely on the SAS.29,30

With the implementation of SAS, the burden of administering both exams seems unnecessary. Yet some residencies that teach osteopathic patient care principles and practices (OPP) may wish to have those skills and practices recognized by applying for ACGME Osteopathic Recognition.30,31 ACGME Osteopathic Recognition requires programs to assess resident “knowledge of osteopathic principles and practice in the specialty…through a specialty-specific osteopathic in-service examination or other equivalent formal exam (VB1a).” The ISE clearly satisfies this requirement. But this raises the question of whether administering the ITE to these same learners is redundant.

We developed a pilot study to look at this question. One purpose of this study was to assess whether the degree of similarity for the construct of family medicine knowledge and clinical decision-making as measured by both the ITE and ISE was high enough to be considered equivalent. This study used data from three dually-accredited family medicine residency programs to answer this question. If they are substantively and functionally equivalent, then administering both examinations incurs additional costs, removes trainees from clinical experiences, and places additional administrative burdens on programs without providing any additional value. If, the examinations are not equivalent then administering both may be warranted, but the rationale for doing so should be articulated and deemed worth the effort.

The second purpose of the study was to assess how similar the ISE resident ability distribution is to the ITE resident ability distribution. Because all residency programs will be accredited by the ACGME starting in 2020, this comparison would provide an insight for the coming large influx of osteopathic residents into the ACGME system.

The Institutional Review Board at the Medical College of Wisconsin deemed this study exempt, the Designated Institutional Official (DIO) for graduate medical education at The Medical College of Wisconsin approved the study.

Eighteen third-year osteopathic family medicine residents enrolled in three residency programs accredited by the ACGME and the AOA from 2013 to 2016 in urban or suburban settings in the upper Midwest participated in the study. An administrative assistant not associated with the study deidentified all resident ITE and ISE scores.

The ACOFP’s ISE has approximately 220 scored items. In 2015, 2,565 family medicine residents took the ISE with a likely equal distribution coming from each of the 3 years of training.32 The results are reported to program directors in the form of program year (PGY)-specific percentiles, overall percentiles, and standard scores that have a mean of 500 and a standard deviation of 100.32 The exam is administered by a different organization than the osteopathic family medicine certifying board and the questions on the ISE are not linked to the certifying exam.

The ABFM’s ITE contains 240 multiple-choice items and is built to the same specifications as the Family Medicine Certification Examination (FMCE).2 Because the ITE is constructed to similar specifications and equated onto the FMCE scale using the dichotomous Rasch model, the ITE scores are highly correlated with the scores examinees would have earned on the FMCE, had they taken it instead of the ITE2. Three dually-accredited residency programs administered both the ACOFP ISE and the ABFM’s ITE examination to their residents for 8 years prior to the implementation of SAS.

A repeated measures design was used to determine how similar and how different the rankings of third-year residents were with regard to their knowledge of family medicine as measured by the ISE and ITE. This was accomplished by administering both the ITE and the ISE to third-year osteopathic family medicine residents within a span of a few weeks of each other. Each examinee represented a link between the population of examinees taking the ITE and the population of examinees taking the ISE. By comparing the ranks of these connector examinees, conclusions in the form of correlations and signed ranks can be used to draw conclusions about the similarities of the two examinations and the differences between these two populations.

A correlation indicates the extent to which the two tests may be measuring the same construct. Even a correlation of 1.0 does not mean that the two populations are the same because there could be constant shift that pushes the trend line off but still parallel to the identity line. Because the data were percentile ranks, Spearman rank order correlation was used to estimate the similarity between the two sets of ranks. A high correlation would suggest that the two examinations are measuring similar constructs. A signed ranks tests is used to determine whether two dependent, related, or paired samples were selected from populations having the same distribution.

The correlation between the ISE and ITE rankings was moderately high and significantly different from zero (rs=.76, P<0.05). Figure 1 illustrates the correlational relationship. If the correlations were disattenuated for the unreliability of the examinations, the correlation would increase to rs=.92.

A Wilcoxon signed rank test indicated that the median ISE score of 62 was not statistically significantly different than the median ITE score of 71 (Z=-0.74, P=0.46, 2-tailed).

There were two instances in which unexpected variables contributed to a highly discrepant score (Figure 1). Excluding the two outliers (A and B) the Spearman ρ correlation would increase from 0.76 to 0.85. Excluding the outliers did not change the no significance finding of the make a difference Wilcoxon signed ranks test (Z=-0.95, P=0.35, 2-tailed).

Although the family medicine ITE and ISE examinations have been administered for years, there are no previous studies comparing the congruence of resident scores on these examinations. The results of our study demonstrate that among PGY-3 residents the correlation between the percentile ranking on the ITE and the ISE is quite high. The resulting correlation is even higher if the two outliers are dropped. Although there are only 18 linkages noted in the study, these 18 linkages represent the entire group of residents who took both exams during our study period, which is a much higher number. Additionally, a statistical test for signed differences failed to show a difference between the two sets of rankings.

Our findings suggest either one of two possibilities: family medicine as a whole embraces many of the osteopathic patient-care principles, or the ISE is significantly more about broad spectrum family medicine than it is about osteopathic principles. From a policy perspective one might ask whether the ACGME should require the ACOFP’s ISE for Osteopathic Recognition in family medicine.

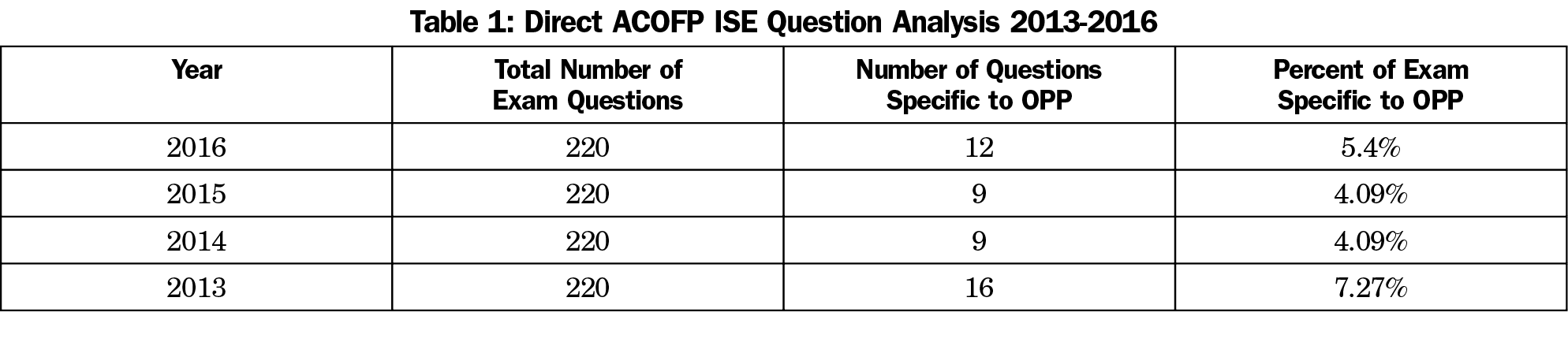

Recent analysis of the ISE has revealed that only 4%-7% of the questions on the exams administered from 2013-2016 were specific to OPP (Table 1). If this assumption is true, then it might follow that the ISE is a poor instrument to use to satisfy the ACGME’s requirements for osteopathic recognition. To better support the intent of the ACGME’s program requirements for osteopathic recognition, ACOFP could realign the content of their examination to be more focused on OPP and less focused on the entire breadth of family medicine.

The main limitations of this study are its small sample size and the limited geographic location of the sample. Having a larger sample size and broader representation of residencies would have increased the generalizability of the results. While this study provides support for the assertion that the ITE and the ISE measure similar constructs, it does not provide a conversion of ITE scores to ISE scores or vice versa. This is not possible because the ITE is a criterion-referenced test that reports interval scale measures while the ISE is a norm-referenced test that reports rankings. There are also no studies comparing the ABFM’s FMCE with the American Osteopathic Board of Family Physician’s certification examination. Although there are studies that compare the performance of graduates from osteopathic medical schools and graduates of allopathic medical schools on the ABFM certification examination,33 there are no studies comparing resident performance across the osteopathic and allopathic certification examinations. It would be helpful to the family medicine community if ABOFP and ABFM could collaborate on such a study.

Previously, dual accreditation has enabled family medicine residency programs to recruit both osteopathic and allopathic applicants who are interested in training at sites that will provide training in osteopathic principles. Now, programs can achieve this same aim with SAS and ACGME Osteopathic Recognition.28 The results of our study indicate that the ACOFP’s ISE may not adequately measure osteopathic principles to assure program compliance with osteopathic recognition requirements.Furthermore, the lack of a difference on the Wilcoxon signed rank test between ISE scores and the ITE scores of the PGY-3 residents in this study suggests that osteopathic residents and allopathic residents have similar knowledge bases. These preliminary findings may help to offset concerns about how well prepared osteopathic residents are for family medicine residency programs, which have primarily recruited allopathic residents.

Acknowledgments

Dr Thomas R. O’Neill is employed by the American Board of Family Medicine and oversees the development and scoring of one of the examinations studied in this report.

References

- Hubbard J, Levit E. The National Board of Medical Examiners: the first seventy years. Philadelphia, PA: National Board of Medical Examiners; 1985.

- O’Neill TR, Li Z, Peabody MR, Lybarger M, Royal K, Puffer JC. The predictive validity of the ABFM’s In-Training Examination. Fam Med. 2015;47(5):349-356.

- O’Neill TR, Peabody MR, Song H. The predictive validity of the National Board of Osteopathic Medical Examiners’ COMLEX-USA Examinations with regard to outcomes on American Board of Family Medicine Examinations. Acad Med. 2016;91(11):1568-1575. https://doi.org/10.1097/ACM.0000000000001254

- Garvin PJ, Kaminski DL. Significance of the in-training examination in a surgical residency program. Surgery. 1984;96(1):109-113.

- Biester TW. A study of the relationship between a medical certification examination and an in-training examination. Chicago, IL: American Educational Research Association; 1985.

- Biester TW. The American Board of Surgery In-Training Examination as a predictor of success on the qualifying examination. Curr Surg. 1987;44(3):194-198.

- Jones AT, Biester TW, Buyske J, Lewis FR, Malangoni MA. Using the American Board of Surgery in-training examination to predict board certification: a cautionary study. Journal of Surgical Education 2914;71(6):e144-148. https://doi.org/10.1016/j.jsurg.2014.04.004

- Shetler PL. Observations on the American Board of Surgery In-Training examination, board results, and conference attendance. Am J Surg. 1982;144(3):292-294. https://doi.org/10.1016/0002-9610(82)90002-2

- Grossman RS, Fincher R-ME, Layne RD, Seelig CB, Berkowitz LR, Levine MA. Validity of the in-training examination for predicting American Board of Internal Medicine certifying examination scores. J Gen Intern Med. 1992;7(1):63-67. https://doi.org/10.1007/BF02599105

- Waxman H, Braunstein G, Dantzker D, et al. Performance on the internal medicine second-year residency in-training examination predicts the outcome of the ABIM certifying examination. J Gen Intern Med. 1994;9(12):692-694. https://doi.org/10.1007/BF02599012

- Rollins LK, Martindale JR, Edmond M, Manser T, Scheld WM. Predicting pass rates on the American Board of Internal Medicine certifying examination. J Gen Intern Med. 1998;13(6):414-416. https://doi.org/10.1046/j.1525-1497.1998.00122.x

- Babbott SF, Beasley BW, Hinchey KT, Blotzer JW, Holmboe ES. The predictive validity of the internal medicine in-training examination. Am J Med. 2007;120(8):735-740. https://doi.org/10.1016/j.amjmed.2007.05.003

- Webb LC, Juul D, Reynolds CF III, et al. How well does the psychiatry residency in-training examination predict performance on the American Board of Psychiatry and Neurology. Part I. Examination? Am J Psychiatry. 1996;153(6):831-832. https://doi.org/10.1176/ajp.153.6.831

- Goodman JC, Juul D, Westmoreland B, Burns R. RITE performance predicts outcome on the ABPN Part I examination. Neurology. 2002;58(8):1144-1146. https://doi.org/10.1212/WNL.58.8.1144

- Juul D, Schneidman BS, Sexson SB, Fernandez, F., Beresin, E. V., Ebert, M.H., Winstead, D.K., Faulkner, L.R. Relationship between Resident-In-Training Examination in Psychiatry and subsequent examination performances. Acad Psychiatry 009;33(5):404-6. https://doi.org/10.1176/appi.ap.33.5.404

- Baumgartner BR, Peterman SB. Relationship between American College of Radiology in-training examination scores and American Board of Radiology written examination scores. Acad Radiol. 1996;3(10):873-878. https://doi.org/10.1016/S1076-6332(96)80281-9

- Baumgartner BR, Peterman SB. 1998 Joseph E. Whitley, MD, Award. Relationship between American College of Radiology in-training examination scores and American Board of Radiology written examination scores. Part 2. Multi-institutional study. Acad Radiol. 1998;5(5):374-379. https://doi.org/10.1016/S1076-6332(98)80156-6

- McCaskill QE, Kirk JJ, Barata DM, Wludyka PS, Zenni EA, Chiu TT. USMLE step 1 scores as a significant predictor of future board passage in pediatrics. Ambul Pediatr. 2007;7(2):192-195. https://doi.org/10.1016/j.ambp.2007.01.002

- Althouse LA, McGuinness GA. The in-training examination: an analysis of its predictive value on performance on the general pediatrics certification examination. J Pediatr. 2008;153(3):425-428. https://doi.org/10.1016/j.jpeds.2008.03.012

- Spellacy WN, Carlan SJ, McCarthy JM, Tsibris JC. Prediction of ABOG written examination performance from the third-year CREOG in-training examination results. J Reprod Med. 2006;51(8):621-622.

- Withiam-Leitch M, Olawaiye A. Resident performance on the in-training and board examinations in obstetrics and gynecology: implications for the ACGME Outcome Project. Teach Learn Med. 2008;20(2):136-142. https://doi.org/10.1080/10401330801991642

- Kearney RA, Sullivan P, Skakun E; American Board of Anesthesiology-American Society of Anesthesiologists. Royal College of Physicians and Surgeons of Canada. Performance on ABA-ASA in-training examination predicts success for RCPSC certification. Can J Anaesth. 2000;47(9):914-918. https://doi.org/10.1007/BF03019676

- Klein GR, Austin MS, Randolph S, Sharkey PF, Hilibrand AS. Passing the Boards: can USMLE and Orthopaedic in-Training Examination scores predict passage of the ABOS Part-I examination? J Bone Joint Surg Am. 2004;86-A(5):1092-1095. https://doi.org/10.2106/00004623-200405000-00032

- Rinder HM, Grimes MM, Wagner J, Bennett BD; RISE Committee, American Society for Clinical Pathology and the American Board of Pathology. Senior pathology resident in-service examination scores correlate with outcomes of the American Board of Pathology certifying examinations. Am J Clin Pathol. 2011;136(4):499-506. https://doi.org/10.1309/AJCPA7O4BBUGLSWW

- Leigh TM, Johnson TP, Pisacano NJ. Predictive validity of the American Board of Family Practice In-Training Examination. Acad Med. 1990;65(7):454-457. https://doi.org/10.1097/00001888-199007000-00009

- Accreditation Council for Graduate Medical Education. ACGME Common Program Requirements. Chicago: ACGME; 2017. https://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements. Accessed August 30, 2018.

- Kozakowski KM, Travis A, Bentley A, Fetter G Jr. Entry of US medical school graduates into family medicine residencies: 2015–2016. Fam Med. 2016; 48(9):688-695.

- Mims LD, Bressler LC, Wannamaker LR, Carek PJ. The effect of dual accreditation on family medicine residency programs. Fam Med. 2015;47(4):292-297.

- Accreditation Council for Graduate Medical Education. Timeline for AOA-approved programs to apply for ACGME accreditation. Chicago: ACGME; 2014. http://www.acgme.org/acgmeweb/Portals/0/PDFs/Nasca-Community/Timeline.pdf. Accessed June 2017.

- Miller T, Jarvis J, Waterson Z, Clements D, Mitchel K. Osteopathic recognition: when, what, how and why? Ann Fam Med. 2017;15:91.

- Accreditation Council for Graduate Medical Education. Osteopathic Recognition Requirements. Chicago: ACGME; 2015.

-

Zipp C. ACOFP In-Service Exam Update and Question Writing. https://www.acofp.org/ACOFPIMIS/Acofporg/PDFs/ACOFP16/Handouts/PD/Weds_am_0830_Zipp_Christoper_Question%20Writing%20In-Service%20Exam.pdf. Accessed April 20, 2017. Accessed April 20, 2017.

- O’Neill TR, Royal KD, Schulte BM, Leigh T. Comparing the performance of allopathically and osteopathically trained physicians on the American Board of Family Medicine’s Certification Examination. ERIC ED506669; Published July 2009. https://eric.ed.gov/?id=ED506669. Accessed April 30, 2010.

There are no comments for this article.