Background and Objectives: Reports of innovations in evidence-based medicine (EBM) training have focused on curriculum design and knowledge gained. Little is known about the educational culture and environment for EBM training and the extent to which those environments exist in family medicine residencies in the United States.

Methods: A literature review on this topic identified a validated EBM environment scale intended for learner use. This scale was adapted for completion by family medicine residency program directors (PDs) and administered through an omnibus survey. Responses to this scale were analyzed descriptively with program and PD demographics. An EBM culture score was calculated for each program and the results were regressed with the correlated demographics.

Results: In our adapted survey, family medicine PDs generally rated their residencies high on the EBM culture scale, but admitted to challenges with faculty feedback to residents about EBM skills, ability to protect time for EBM instruction, and clinician skepticism about EBM. In linear regression analysis, the mean summary score on the EBM scale was lower for female PDs and in programs with a higher proportion of international medical school graduates.

Conclusions: To improve the culture for EBM teaching, family medicine residency programs should focus on faculty engagement and support and the allocation of sufficient time for EBM education.

Training in evidence-based medicine (EBM) skills, such as critical appraisal of medical research literature and its judicious, conscientious, and explicit application to clinical practice,1 has been an integral component of family medicine residency education since the early 2000s. Its importance is reflected primarily in the Accreditation Council for Graduate Medical Education’s (ACGME’s) Problem Based Learning and Improvement competency and related milestones and some of the related, newly-developed entrustable professional activities (EPAs). The existing educational literature on this topic has mainly focused on describing the variety and content of EBM curricula, and on assessment of knowledge and skills related to the practice of EBM.2-4 Several of this paper’s authors (J.E., J.H., D.W., G.C.)—members of the Society of Teachers of Family Medicine (STFM) Group on Evidence-Based Medicine—reflected on the status of EBM teaching in medical school and in family medicine residency programs. From our collective anecdotal experience, we noted that in many programs this teaching is relegated to a small minority of faculty members in a school or program who possess expertise and interest in this area.

Research into either specifically designated or hidden curricula in US medical school training reveals that the culture of an educational program can either reinforce or subvert curricular initiatives.5 We believe role modeling and a supportive training environment are essential components in all aspects of medical school education and residency training. However, to date, these aspects of training in EBM have not been well explored in the educational literature. A literature review revealed several cultural factors that could influence the success of EBM training curricula, such as authentic curricular exercises, role modeling, and sufficient resources.3,6-18

The best quality work in this area was a survey study by Mi, et al that examined the EBM training environment from a learner’s perspective.19,20 Extensive survey validation was performed, and the results showed that a supportive EBM learning culture, as perceived by the trainees, was important in EBM education. We recognized the opportunity to complement this research with a nationwide survey of residency program directors in family medicine, using a modified form of the Mi, et al survey, in order to assess the extent to which programs possessed the elements of this supportive EBM culture.

Survey Creation and Data Collection

We adapted the validated survey from Mi, et al containing 36 items in seven subscales for use in the Council of Academic Family Medicine Educational Research Alliance’s (CERA’s) Program Directors Survey, an omnibus survey conducted annually.21 We eliminated items from two subscales of Mi’s EBM scale that focused solely on learner attitudes and accountability. The remaining subscales focused on resource availability, social support, learning support, situational cues, and learning culture. Because of the limitations on survey length in CERA surveys, we trimmed duplicative items from the Mi survey, but retained key questions from each subscale. We incorporated the edited subscales of 13 items into the final CERA survey. These items, which formed the EBM Culture Scale (ECS), asked respondents to rate their agreement with the item statements on a 5-point Likert scale from 1 (strongly disagree) to 5 (strongly agree). The survey was pretested to ensure clarity of the questions with family medicine educators who were not part of the target survey population. The American Academy of Family Physicians Institutional Review Board approved the project in January 2015. The survey (deployed using SurveyMonkey22) was sent by email to all US family medicine residency program directors identified by the Association of Family Medicine Residency Directors. Data was collected from January 2015 to March of 2015 using email invitations to the SurveyMonkey platform. Two follow-up reminders were sent to enhance response rate.

Analysis

We analyzed the survey results descriptively for response rate, demographic information of the respondents, and information about the programs. We analyzed survey item internal consistency using Cronbach α. We examined the frequencies and means of the responses to the ECS items and created an overall ECS score by summing the scores for the 13 items. We further analyzed the results for this overall score by conducting a linear regression on the ECS score by examining, as coefficients, the demographics obtained as part of the larger survey that were significantly associated with the EBM score in a priori testing. All analyses were conducted using R version 3.2.2, with the psych package.23,24

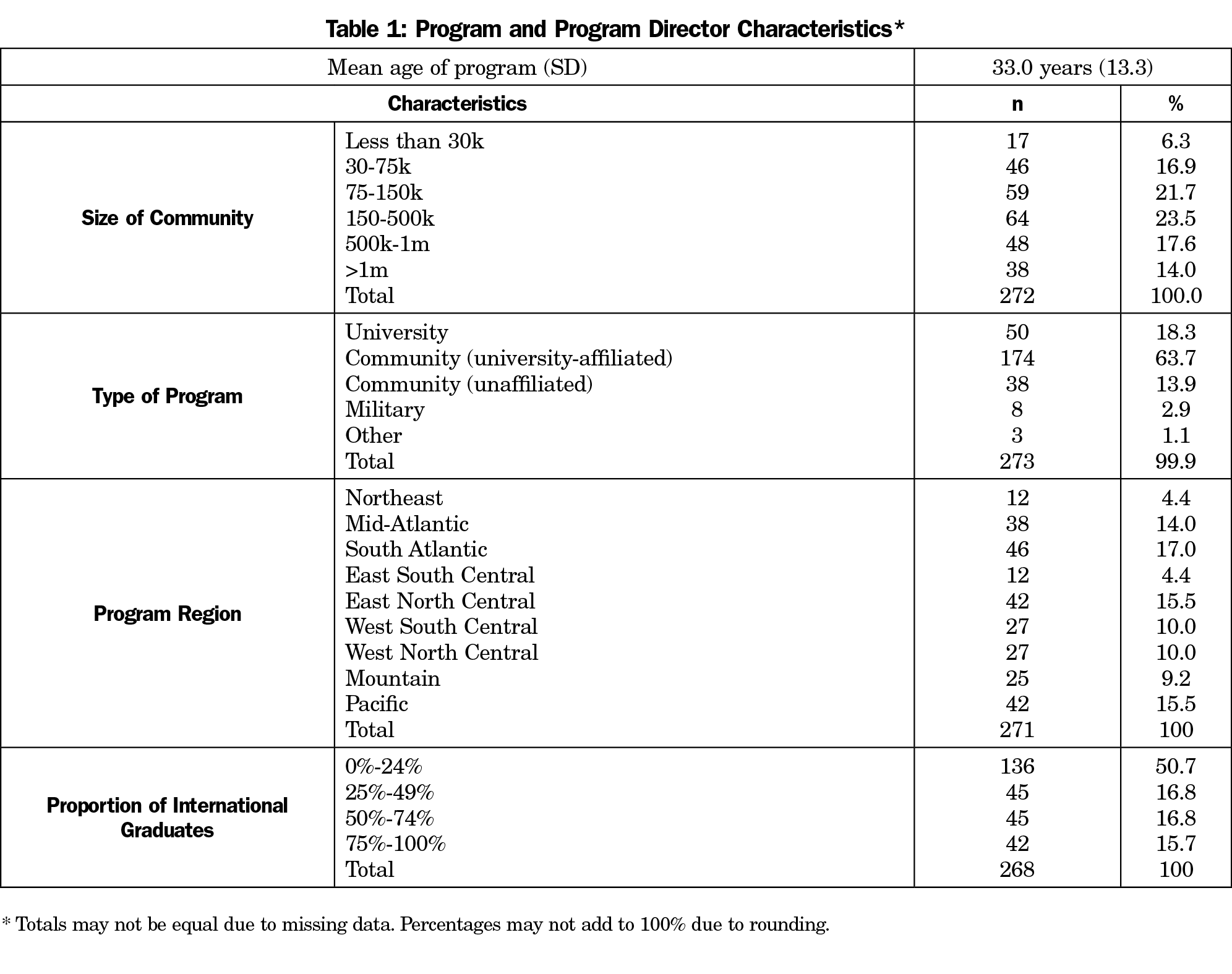

We received 274 responses out of a sampled population of 452 (60.6% response rate). The respondents were mostly male (64%) and had a median of 4 years of experience as program director (interquartile range 1.5 to 7.5 years). The mean age of the respondents’ residency programs was 33.1 years (SD 13.3 years); further program characteristics are shown in Table 1. The survey showed a good level of internal consistency (Cronbach α of 0.85 [95% CI 0.81-0.89]).

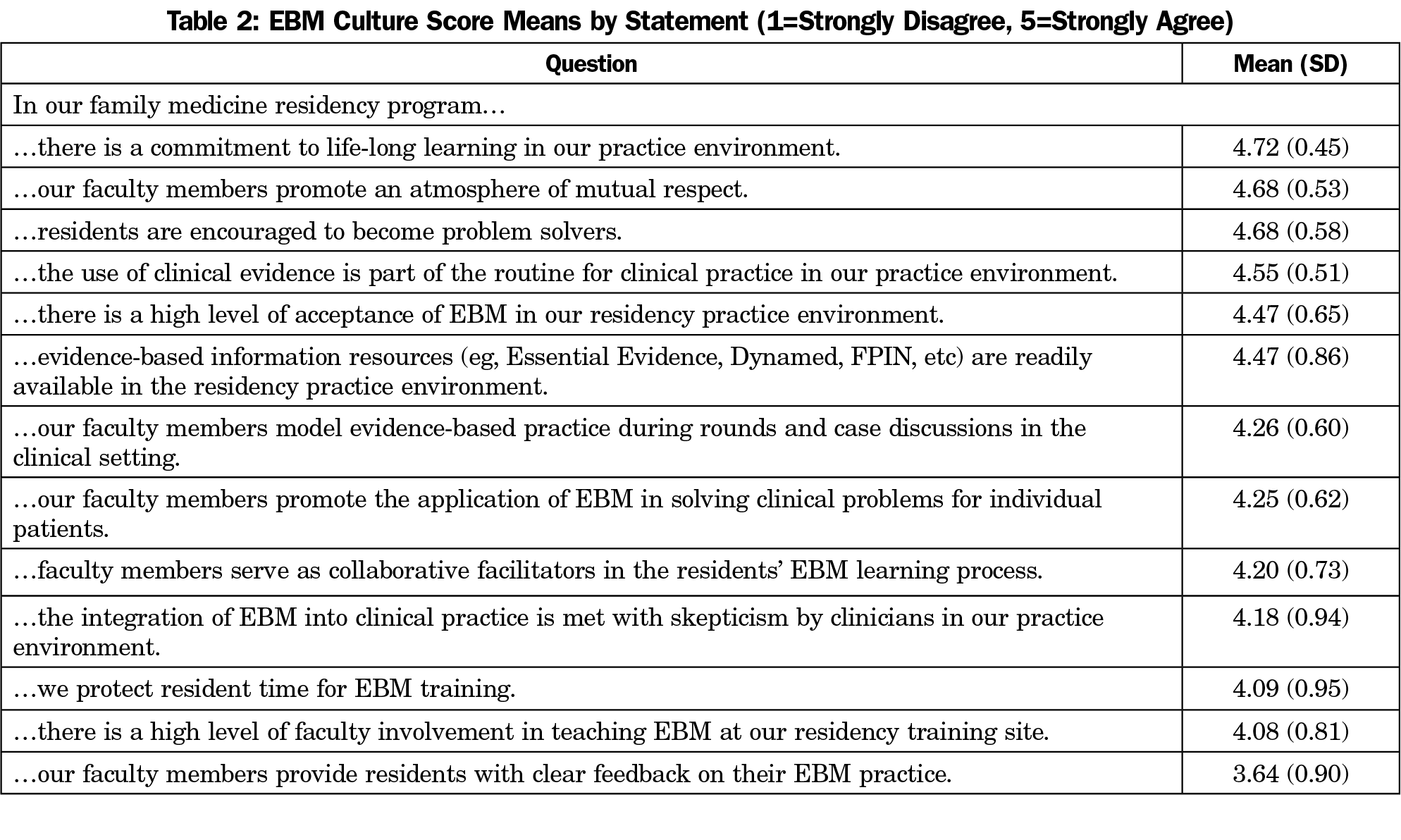

The mean score of each ECS item is shown in Table 2. The most strongly-endorsed items included the practice environment’s commitment to lifelong learning, the program’s cultivation of an atmosphere of mutual respect, and the encouragement of residents as problem solvers. The least strongly-endorsed items included faculty feedback to residents about EBM skills, faculty involvement in teaching EBM, and protection of resident time for EBM training. The mean overall ECS score was 56.3 (SD 5.64) out of a maximum score of 65, and most program directors scored their programs greater than 50.

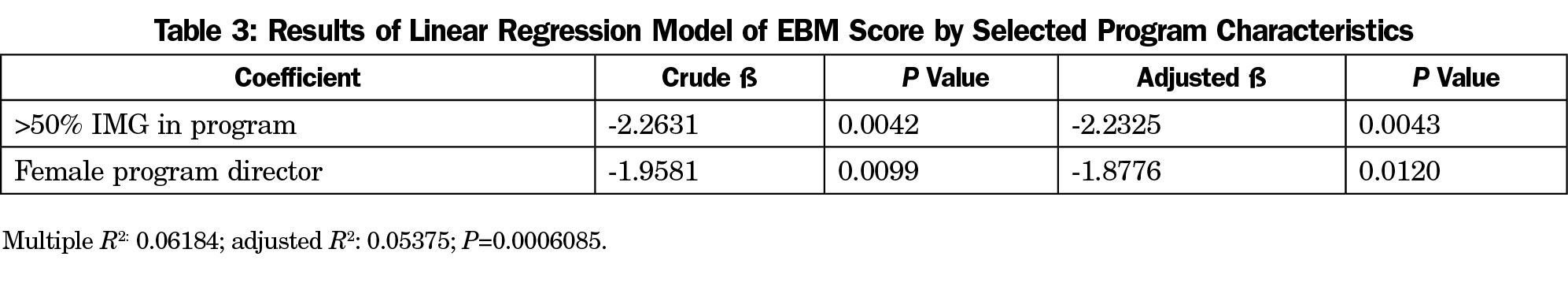

We explored the association of overall ECS score with each of the demographic items as a priori testing for our linear regression analysis. Only the gender of the program director (t=2.47, P=0.01) and proportion of international medical graduates (IMGs) in the program (ANOVA, F=2.43, P=0.048) were significantly correlated with the overall ECS score. Further a priori testing revealed a bifurcation of the proportion of international graduates variable at 50%, and we created a corresponding dichotomous variable for use in the analysis. We performed a linear regression analysis of the overall ECS score using program director gender and proportion of IMGs over 50% (Table 3). Female gender of the program director predicted a 1.88-point decrease (95% CI 0.41 to 3.34 points) in the overall ECS score. Despite some correlation seen in a priori testing between program director gender and years of experience (P=0.003), adding years as program director did not contribute significantly to the model. Proportion of IMGs over 50% in the program predicted a 2.23-point decrease (95% CI 0.71 to 3.75) in the ECS score.

Overall, this survey provides thought-provoking data about program directors’ self-assessment of their programs’ learning environments for teaching EBM. Most program directors rated their programs high on the EBM scale, which may result from both a global expectation (from the ACGME and others) that residencies teach EBM well, as well as a natural tendency for positive self-assessment. Examining the individual items, it seems that both the involvement of faculty in EBM teaching and their delivery of feedback to the residents about their EBM skills present the most challenges. This could be due to the time available to deliver this feedback, the faculty’s self-efficacy in EBM skills, the faculty’s self-efficacy in teaching and assessing EBM skills, or the perceived effectiveness of this feedback. Program directors generally rated the atmosphere and environmental statements better than the specifics of EBM teaching or the EBM-related teaching infrastructure.

The difference in the prediction of overall EBM score by program director gender could be explained by a documented tendency of women to self-evaluate more harshly than men concerning managerial and performance-related domains.25,26 There is no obvious mechanism by which this prediction would represent a true difference. For the lower EBM scores associated with a higher proportion of IMGs, some research has suggested that a lack of EBM education in medical school may underlie the relationship.27 In addition, smaller and less-established residency programs frequently have a high proportion of IMGs, so we postulate that there may be less overall curricular infrastructure in these programs. There may also be greater curricular attention to acculturation and communication issues in these residencies, thereby displacing other curricular elements. This finding deserves more study examining both factual curriculum components and perceptions or attitudes toward teaching of EBM-integrated knowledge.

This study is limited by the adaptation of a previously validated survey for use with a different respondent population. In order to incorporate the ECS scale within the omnibus CERA survey, the original scale developed and validated by Mi was modified by the elimination of a number of items. As expected, the original factor structure identified by Mi was not preserved in the modified instrument. However, all remaining items were closely associated with one another, and the most efficient analytic step was to utilize the overall ECS score in the regression analysis. It is possible that use of the original, complete ECS scale as validated by Mi would have yielded different results. However, we feel that the careful selection of the applicable factors from the original Mi survey and the thematically consistent results we obtained enable useful conclusions to be drawn.

Another limitation is the highly subjective self-assessment of residency program characteristics, that depends on the respondents’ ability to meaningfully compare their program to a larger and nondefined standard. We attempted to collect data about the number of residency slots per year in each program, but the data were clearly unreliable, as it appeared that many respondents answered relating the total available slots in the residency program, and we did not have an acceptable method for distinguishing the errors from actual data.

The strengths of this study include the overall response rate, and the adaptation of a validated survey. Because of the geographically broad representation of the survey respondents, community size and type of program (see Table 1), and its reasonably high response rate of 60.6%, these findings can reasonably generalize to all US family medicine residencies.

The findings identify important EBM contextual factors that were confirmed in another recent study by Bergh, et al: knowledge and learning materials; learner support; general relationships and support; institutional focus on EBM; education, training, and supervision; EBM application opportunities; and affirmation of EBM environment.28 Our findings are also in accordance with those of several other studies indicating the importance of these environmental/contextual factors that contribute to the culture of promoting or sustaining learning and adoption of EBM. Specifically, those studies noted the importance of faculty role modeling, time dedicated to the EBM curriculum, institutional support, collaboration from a library and similar nonmedical faculty, and leadership commitment.29-31

The results of this study suggest strong implications for residency programs in developing and implementing an EBM intervention. Effective learning outcomes and application of EBM knowledge and skills are associated with learner factors (eg, prior EBM training and experience) and learning support through faculty feedback, protected time, role modelling, or mentoring. Based on the survey results, we suggest that to improve the delivery of an EBM curriculum in residency, program directors should focus on authentic activity (eg, integrating and reinforcing EBM teaching in the clinical environment), whole-residency involvement and commitment, and a suitable general and EBM-specific infrastructure for learning and application of learning. Future research is warranted to investigate the impact of affective and environment factors on EBM learning and practice, so EBM training can be designed and implemented to optimize EBM training outcomes to improve patient care and promote residents’ critical thinking, problem-solving, and lifelong learning.

Acknowledgments

The authors thank Virginia Young, MLS, for assistance with the literature review search, and Dean Seeheusen, MD, MPH, for assistance with the preparation of the CERA survey.

Presentation: Epling, JW, Heidelbaugh, J, Woolever, D, Castelli, G, Mi, M, Mader, EM, Morley CP. What constitutes an EBM culture in training and education? 43rd Annual Meeting of the North American Primary Care Research Group; November 2015. Cancun, Mexico.

References

- Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312(7023):71-72. https://doi.org/10.1136/bmj.312.7023.71

- Ahmadi S-F, Baradaran HR, Ahmadi E. Effectiveness of teaching evidence-based medicine to undergraduate medical students: a BEME systematic review. Med Teach. 2015;37(1):21-30. https://doi.org/10.3109/0142159X.2014.971724

- Ilic D, Forbes K. Undergraduate medical student perceptions and use of evidence-based medicine: a qualitative study. BMC Med Educ. 2010;10:58-6920-10-58. https://doi.org/10.1186/1472-6920-10-58

- Hecht L, Buhse S, Meyer G. Effectiveness of training in evidence-based medicine skills for healthcare professionals: a systematic review. BMC Med Educ. 2016;16(1):103. https://doi.org/10.1186/s12909-016-0616-2

- O’Sullivan H, van Mook W, Fewtrell R, Wass V. Integrating professionalism into the curriculum: AMEE Guide No. 61. Med Teach. 2012;34(2):e64-e77. https://doi.org/10.3109/0142159X.2012.655610

- Edwards KS, Woolf PK, Hetzler T. Pediatric residents as learners and teachers of evidence-based medicine. Acad Med. 2002;77(7):748. https://doi.org/10.1097/00001888-200207000-00037

- Korenstein D, Dunn A, McGinn T. Mixing it up: integrating evidence-based medicine and patient care. Acad Med. 2002;77(7):741-742. https://doi.org/10.1097/00001888-200207000-00028

- Holloway R, Nesbit K, Bordley D, Noyes K. Teaching and evaluating first and second year medical students’ practice of evidence-based medicine. Med Educ. 2004;38(8):868-878. https://doi.org/10.1111/j.1365-2929.2004.01817.x.

- van Dijk N, Hooft L, Wieringa-de Waard M. What are the barriers to residents’ practicing evidence-based medicine? A systematic review. Acad Med. 2010;85(7):1163-1170. https://doi.org/10.1097/ACM.0b013e3181d4152f

- O’Regan K, Marsden P, Sayers G, et al. Videoconferencing of a national program for residents on evidence-based practice: early performance evaluation. J Am Coll Radiol. 2010;7(2):138-145. https://doi.org/10.1016/j.jacr.2009.09.003

- Johnston JM, Schooling CM, Leung GM. A randomised-controlled trial of two educational modes for undergraduate evidence-based medicine learning in Asia. BMC Med Educ. 2009;9(1):63. https://doi.org/10.1186/1472-6920-9-63

- Keim SM, Howse D, Bracke P, Mendoza K. Promoting evidence based medicine in preclinical medical students via a federated literature search tool. Med Teach. 2008;30(9-10):880-884. https://doi.org/10.1080/01421590802258912

- Laird S, George J, Sanford SM, Coon S. Development, implementation, and outcomes of an initiative to integrate evidence-based medicine into an osteopathic curriculum. J Am Osteopath Assoc. 2010;110(10):593-601.

- Carpenter CR, Kane BG, Carter M, Lucas R, Wilbur LG, Graffeo CS. Incorporating evidence-based medicine into resident education: a CORD survey of faculty and resident expectations. Acad Emerg Med. 2010;17(suppl 2):S54-S61. https://doi.org/10.1111/j.1553-2712.2010.00889.x

- Oude Rengerink K, Thangaratinam S, Barnfield G, et al. How can we teach EBM in clinical practice? An analysis of barriers to implementation of on-the-job EBM teaching and learning. Med Teach. 2011;33(3):e125-e130. https://doi.org/10.3109/0142159X.2011.542520

- Rohwer A, Young T, van Schalkwyk S. Effective or just practical? An evaluation of an online postgraduate module on evidence-based medicine (EBM). BMC Med Educ. 2013;13(1):77. https://doi.org/10.1186/1472-6920-13-77

- Cavanaugh SK, Calabretta N. Meeting the challenge of evidence-based medicine in the family medicine clerkship: closing the loop from academics to office. Med Ref Serv Q. 2013;32(2):172-178. https://doi.org/10.1080/02763869.2013.776895

- Alahdab F, Firwana B, Hasan R, et al. Undergraduate medical students’ perceptions, attitudes, and competencies in evidence-based medicine (EBM), and their understanding of EBM reality in Syria. BMC Res Notes. 2012;5(1):431. https://doi.org/10.1186/1756-0500-5-431

- Mi M. Factors that influence effective evidence-based medicine instruction. Med Ref Serv Q. 2013;32(4):424-433. https://doi.org/10.1080/02763869.2013.837733

- Mi M, Moseley JL, Green ML. An instrument to characterize the environment for residents’ evidence-based medicine learning and practice. Fam Med. 2012;44(2):98-104.

- Mainous AG 3rd, Seehusen D, Shokar N. CAFM Educational Research Alliance (CERA) 2011 Residency Director survey: background, methods, and respondent characteristics. Fam Med. 44(10):691-693.

- SurveyMonkey (software). https://www.surveymonkey.com. San Mateo, CA: SurveyMonkey, Inc; 2018.

- R: A language and environment for statistical computing. Version 3.2.2. (software). https://www.r-project.org/. R Core Team; 2015.

- Revelle W. psych: Procedures for Personality and Psychological Research.Version 1.5.8 (software). https://CRAN.R-project.org/package=psych. Evanston, IL: Northwestern University; 2015.

- Haynes MC, Heilman ME. It had to be you (not me)!: women’s attributional rationalization of their contribution to successful joint work outcomes. Pers Soc Psychol Bull. 2013;39(7):956-969. https://doi.org/10.1177/0146167213486358

- Fletcher C. The implications of research on gender differences in self- assessment and 360 degree appraisal. Hum Resour Manage J. 2016;9(August):39-46. https://doi.org/10.1111/j.1748-8583.1999.tb00187.x

- Allan GM, Manca D, Szafran O, Korownyk C. EBM a challenge for international medical graduates. Fam Med. 2007;39(3):160.

- Bergh A-M, Grimbeek J, May W, et al. Measurement of perceptions of educational environment in evidence-based medicine. Evid Based Med. 2014;19(4):123-131. https://doi.org/10.1136/eb-2014-101726

- Young T, Rohwer A, van Schalkwyk S, Volmink J, Clarke M. Patience, persistence and pragmatism: experiences and lessons learnt from the implementation of clinically integrated teaching and learning of evidence-based health care - a qualitative study. PLoS One. 2015;10(6):e0131121. https://doi.org/10.1371/journal.pone.0131121.

- Blanco MA, Capello CF, Dorsch JL, Perry G, Zanetti ML. A survey study of evidence-based medicine training in US and Canadian medical schools. J Med Libr Assoc. 2014;102(3):160-168. https://doi.org/10.3163/1536-5050.102.3.005

- Kenefick CM, Boykan R, Chitkara M. Partnering with residents for evidence-based practice. Med Ref Serv Q. 2013;32(4):385-395. https://doi.org/10.1080/02763869.2013.837669

There are no comments for this article.