Background and Objectives: Clinical teachers (or preceptors) have expressed uncertainties about medical student expectations and how to assess them. The Association of American Medical Colleges (AAMC) created a list of core skills that graduating medical students should be able to perform. Using this framework, this innovation was designed to provide medical students specific, progressive clinical skills training that could be observed.

Methods: We used the AAMC skills to develop observable events, called Observed Practice Activities (OPAs), that students could accomplish with their outpatient preceptors. Preceptors and students were trained to use the OPA cards and all students turned in the cards at the end of the rotation.

Results: Seventy-nine of 115 preceptors and 80 of 149 students completed evaluations on the OPA cards. Both students (60%) and preceptors (70%) indicated the OPA cards were helpful for knowing expectations for a third-year medical student, although preceptors found the cards to be of greater value than the students.

Conclusions: The OPA cards enable outpatient preceptors to document student progress toward graduated skill acquisition. In addition, the OPA cards provide preceptors and students with specific tasks, expectations, and a template for directly observed, competency-based feedback. The majority of preceptors found the OPA cards easy to use and did not disrupt their clinical work. In addition, both students and preceptors found the cards to be helpful to understand expectations of a third-year medical student in our course. The OPA cards could be adapted by other schools to evaluate progressive skill development throughout the year.

Medical schools rely on clinician educators to teach.1,2 Clinician educators, henceforth referred to as preceptors, consist of university-paid and community-based clinicians. A tension exists for preceptors due to competing demands of patient care versus teaching, regardless of being paid by the university or as an unpaid, private clinician.3

An integral responsibility of clinician educators is completing evaluations. However, previous studies indicated preceptors were unclear of medical student expectations, making it challenging to complete evaluation tools and follow grading criteria.4,5 Students have also expressed concerns over the subjectivity of evaluations, which could impact their final grade.6

Therefore, we developed an instrument students use to meet objectives, receive feedback, and address accreditation comparability elements.7 Reviewing competency-based frameworks8 led us to identify a workplace-based evaluation we adapted, the Observed Practice Activities (OPA) checklist,9 to align with our required clinical skills.

Our goal with the OPA cards was to improve preceptor and student awareness of expectations during the Community Based Longitudinal Care (CBLC) course. This study reports perceptions about the OPA cards from preceptors and students.

The study took place at a public medical school in the Southeastern United States. CBLC is a required 16-week course, integrating outpatient family medicine, internal medicine and pediatrics clinical experiences. At the branch campus, CBLC integrated these disciplines over 24 weeks and included outpatient gynecology and psychiatry.

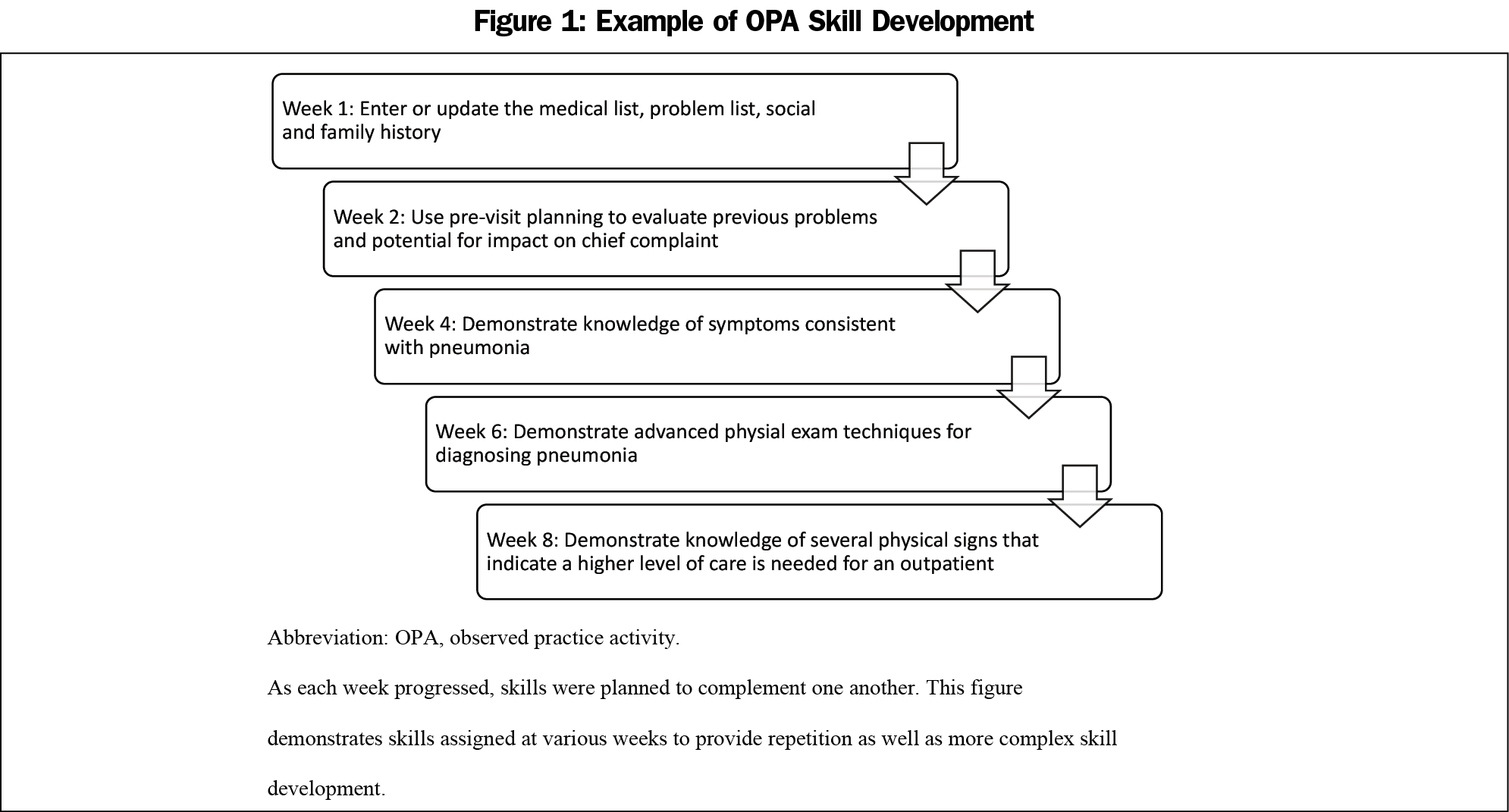

Using a consensus-building technique,10 a list of primary care clinical skills emerged from our objectives and published skills.8 The initial list compiled by course leaders included over 120 items. We consulted community-based preceptors to refine the list, resulting in a total of 113 items (see Appendix A, at: https://journals.stfm.org/media/4225/beck-dallaghan-appendix-a.pdf). We organized items by week, requiring students to complete a card each week for 15 weeks. We organized the OPA cards so skills increased in complexity, corresponding to didactic sessions (Figure 1).11 Preceptors had to observe and initial these skills, allowing students to receive feedback at the time of the activity.

We trained preceptors and students how to use the OPA cards12 in faculty development meetings and at course orientation. Full credit was given for completed cards, which contributed to their final grade.

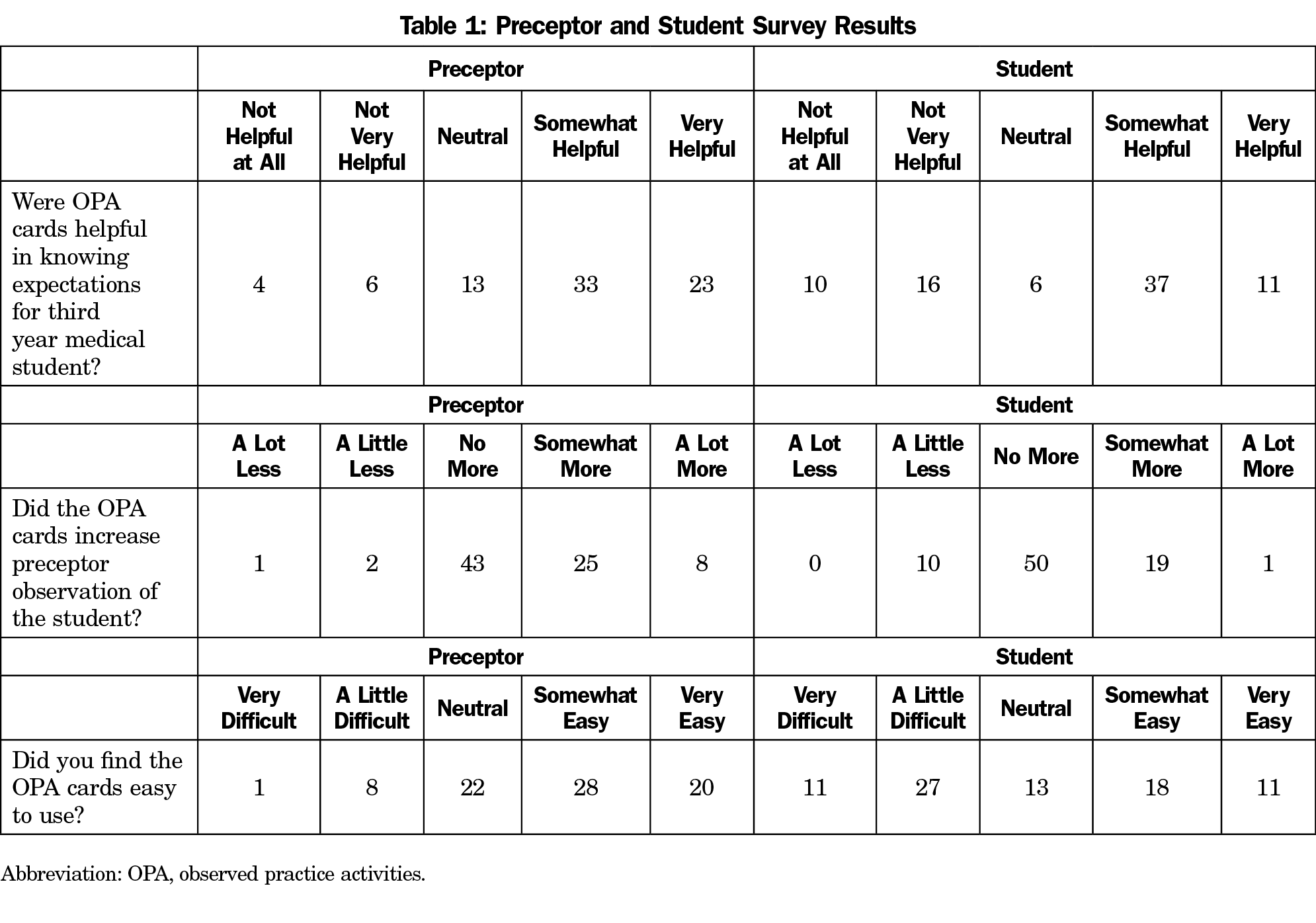

Anonymous preceptor and student evaluations asked questions related to usability, direct observation of students, and understanding expectations. Scales ranged from 1 (lowest rating) to 5 (highest rating). See Table 1 for different scale anchors. We analyzed numeric responses using independent samples Mann-Whitney U tests with IBM SPSS v 25 (Armonk, NY).

Thematic analysis identified patterns within the narrative data.13 One investigator (G.L.B.D.) coded comments. The first author (K.B.F.) reviewed the coding scheme. We reviewed codes to identify common themes from preceptors and medical students.

This study was reviewed and approved as secondary data by our office of human subjects review.

All 149 medical students completed their OPA cards. Approximately 20 had problems completing the card, which was addressed at midcourse feedback sessions. Alternative experiences were scheduled to ensure completion. Seventy-nine of 115 preceptors (68.7%) completed evaluations; 80 of 149 students (53.7%) completed evaluations (Table 1).

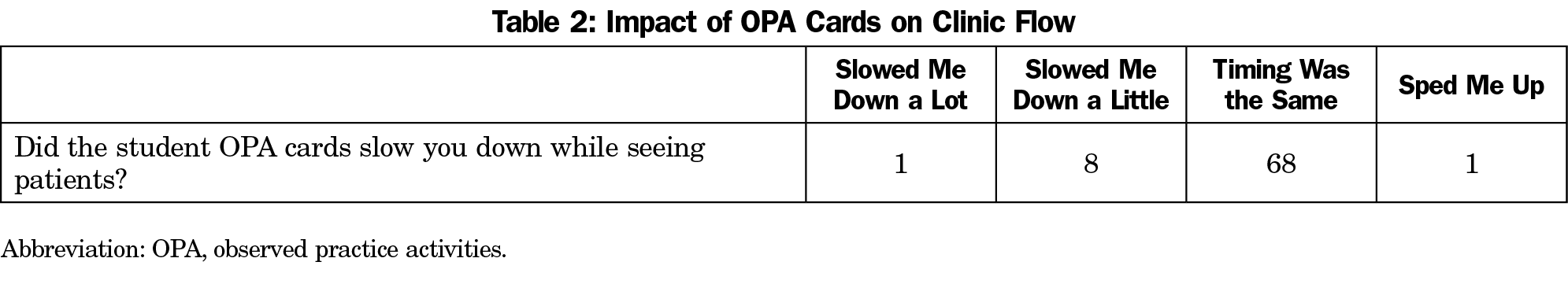

Although students (60%) and preceptors (70%) indicated the OPA cards clarified expectations, preceptors found the cards of greater value (U=2,435.00, P=.008). The preceptors (88%) found the cards easy to use, as opposed to 58% of students (U=1,969.50, P=.001). Preceptors felt using OPA cards encouraged observation more than students (U=2,431.50, P=.004). In addition, 90% of the preceptors indicated OPA cards did not slow patient flow in clinic (Table 2).

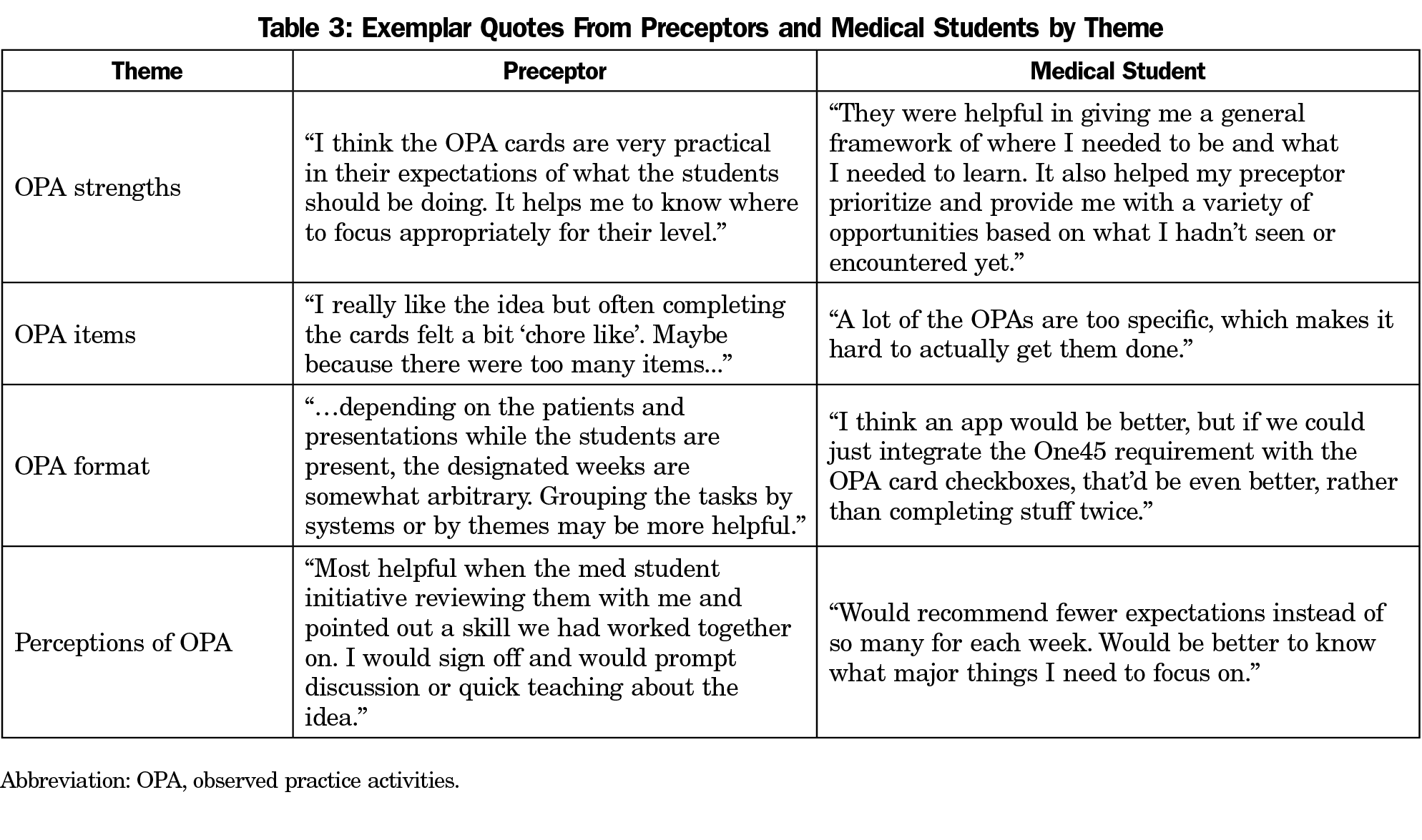

Analysis of narrative comments (38 students, 22 preceptors) resulted in 40 unique codes coalescing into four themes: strengths of OPA, OPA items, OPA format, and perceptions of OPA. Table 3 lists themes and exemplar quotes.

For strengths of OPA cards, preceptors emphasized the benefits of being reminded about expectations. Students found the cards enhanced their experiences, specifically noting:

…they were also helpful for the specialty days as these days were more limited and it helped bring up conversations about the important topics in that field.

Although the OPA cards were helpful, the specificity and volume of the items presented challenges. The OPA card format was awkward, with suggestions to incorporate the OPA into a mobile platform.

Comments revealed divergent perceptions about OPA cards. Preceptors and students felt this was one more task to complete. Preceptors noted that when students were proactive, it reminded them to help students meet requirements.

In this study, we explored preceptor and student perceptions of a clinical skills observation card. Similar to other studies, preceptors did not feel the OPA cards burdened their clinical schedules.2,14,15 A key finding was that students and preceptors felt better informed about the expectations. Preceptors felt more confident teaching because they had a specific list from which to teach.4 Students appreciated knowing what was expected of them in clinic11 and the list was particularly helpful in clinical settings where preceptors rarely teach students.

Preceptors and students indicated items on the OPA cards were either not relevant or too specific. For this reason, a small number of students struggled completing a task at which time we scheduled alternative experiences.

The weekly format was felt to be cumbersome, particularly since there is no guarantee tasks on a weekly card will present in clinic that week. Students expressed concerns that items seemed repetitive; however, competency-based medical education is intended to improve the quantity and quality of feedback over time and in different contexts.16 Even though a consensus process was employed to generate the OPA cards, after implementation it became evident that regular review of the items is necessary, and adjustments need to be made. Future work with the OPA cards will include organizing items better and clarifying items perceived to lack relevance.

This study was performed at a single institution where expectations of students’ clinical skills may not be similar to other medical schools. However, identifying core skills using a consensus process could be replicated by other schools on longitudinal or block clerkships.

Based on our evaluation, preceptors found the OPA cards easy to use and did not slow them down during their clinical work. Students and preceptors found the cards to be helpful to understand expectations. There were logistical and item content issues noted that continue to be reviewed annually. Our OPA cards can be adapted by other schools by coming to consensus on core clinical skill requirements.

References

- McCurdy FA, Beck GL, Kollath JP, Harper JL. Pediatric clerkship experience and performance in the Nebraska Education Consortium: a community vs university comparison. Arch Pediatr Adolesc Med. 1999;153(9):989-994. doi:10.1001/archpedi.153.9.989

- Christner JG, Dallaghan GB, Briscoe G, et al. The community preceptor crisis: recruiting and retaining community-based faculty to teach medical students-a shared perspective from the Alliance for Clinical Education. Teach Learn Med. 2016;28(3):329-336. doi:10.1080/10401334.2016.1152899

- ten Cate O, Scheele F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. 2007;82(6):542-547. doi:10.1097/ACM.0b013e31805559c7

- Beck Dallaghan GL, Alerte AM, Ryan MS, et al. Enlisting community-based preceptor: a multi-center qualitative action study of U.S. pediatricians. Acad Med. 2017;92(8):1168-1174. doi:10.1097/ACM.0000000000001667

- Paul CR, Vercio C, Tenney Soeiro R, et al. The decline in community preceptor teaching activity: from the voices of pediatricians who have stopped teaching medical students. Acad Med. 2020;95(2):301-309. doi:10.1097/ACM.0000000000002947

- Mazotti L, O’Brien B, Tong L, Hauer KE. Perceptions of evaluation in longitudinal versus traditional clerkships. Med Educ. 2011;45(5):464-470. doi:10.1111/j.1365-2923.2010.03904.x

- Liaison Committed for Medical Education. LCME Data Collection Instrument, for Full Accreditation Surveys in AY 2018-19. https://static.vtc.vt.edu/media/documents/2018-19_DCI-Full_2017-06-13_1.pdf. Accessed April 14, 2021.

- Association of American Medical Colleges. Core entrustable activities for entering residency. https://members.aamc.org/eweb/upload/Core%20EPA%20Curriculum%20Dev%20Guide.pdf. Accessed November 8, 2018.

- Warm EJ, Mathis BR, Held JD, et al. Entrustment and mapping of observable practice activities for resident assessment. J Gen Intern Med. 2014;29(8):1177-1182. doi:10.1007/s11606-014-2801-5

- Ogden SR, Culp WC Jr, Villamaria FJ, Ball TR. Developing a checklist: consensus via a modified Delphi technique. J Cardiothorac Vasc Anesth. 2016;30(4):855-858. doi:10.1053/j.jvca.2016.02.022

- Hicks PJ, Margolis MJ, Carraccio CL, et al; PMAC Module 1 Study Group. A novel workplace-based assessment for competency-based decisions and learner feedback. Med Teach. 2018;40(11):1143-1150. doi:10.1080/0142159X.2018.1461204

- Nousiainen MT, Caverzagie KJ, Ferguson PC, Frank JR, Collaborators ICBME; ICBME Collaborators. Implementing competency-based medical education: what changes in curricular structure and processes are needed? Med Teach. 2017;39(6):594-598. doi:10.1080/0142159X.2017.1315077

- Clarke V, Braun V. Thematic analysis. J Posit Psychol. 2017;12(3):297-298. doi:10.1080/17439760.2016.1262613

- Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(6):573-576. doi:10.1370/afm.1713

- Caverzagie KJ, Nousiainen MT, Ferguson PC, et al; ICBME Collaborators. Overarching challenges to the implementation of competency-based medical education. Med Teach. 2017;39(6):588-593. doi:10.1080/0142159X.2017.1315075

- Harris P, Bhanji F, Topps M, et al; ICBME Collaborators. Evolving concepts of assessment in a competency-based world. Med Teach. 2017;39(6):603-608. doi:10.1080/0142159X.2017.1315071

There are no comments for this article.